Automating your tests is always a worthy undertaking: software gets delivered quicker, tests run more frequently (making it easier to catch bugs), etc. However, you'll likely encounter problems on the road to getting there:

- automation is a task that takes a lot of time and effort

- manual testers might not trust automation

Without specialized tools, automating a manual test case means first copy-pasting all the metadata and scenarios into Jira and your IDE; only then do you write the code. And you'll need to do it repeatedly because automation is not a one-time event.

Ongoing conversion

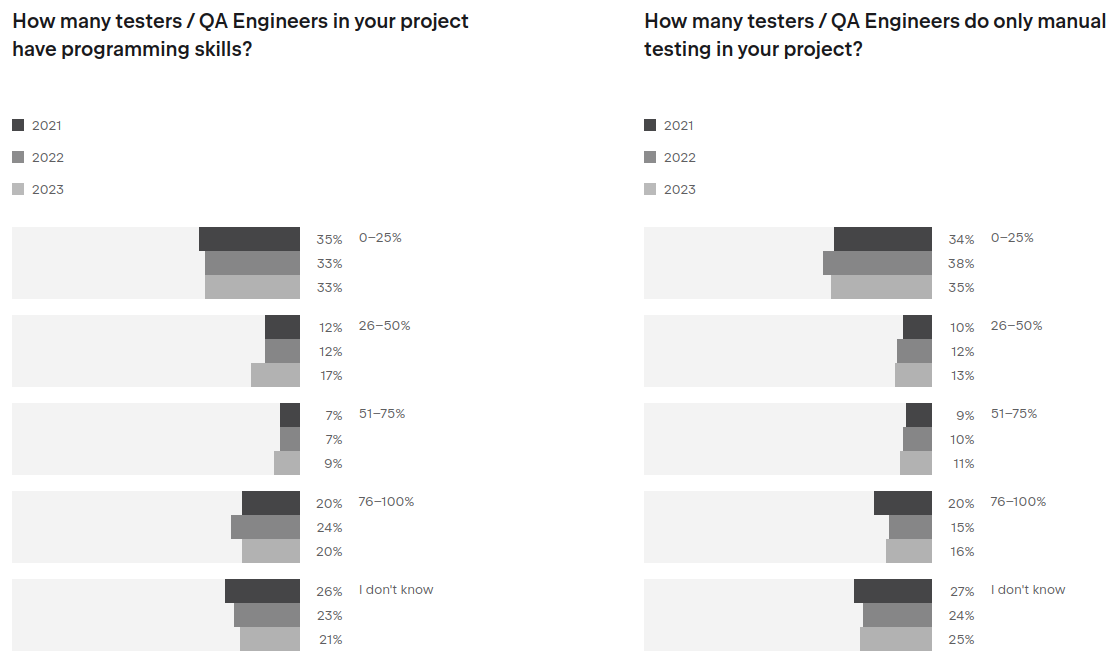

Automating existing manual test cases isn't a one-time issue for a company. JetBrains' research shows that manual testing isn't going anywhere:

And there is good reason for that. The competence of automation engineers is usually on the code side of things: knowing the instruments, the tech stack, and the patterns, achieving readability and performance. Manual testers, on the other hand, tend to have better knowledge of test design, test theory, and the actual system they're testing.

Manual testers are the first to dig into a new feature when it's out. Doing the first few rounds of testing manually takes less time than writing automated tests. So, converting manual test cases will always be part of the workflow, and it will always be important to cut down its time and ensure the expertise of manual testers makes it into your code. Manual testers have to be able to trust automation.

Automation in a bunker

Manual and automated testers often work as if the other team isn't there. In this case, automation doesn't nearly provide its full value to the team.

The reasons are always bad communication channels and lack of trust. On their own, a manual tester usually can't read an automated test, doesn't know how to run it, or how to analyze the results. On the other hand, an automation engineer misinterprets test cases and doesn't fully understand how the system under test works. They also don't know the testing priorities, so they choose test cases randomly when automating.

It's not like either of them is bad at what they are doing; they are both great in their respective fields. What's needed is some common ground for them to talk to each other properly.

Common ground for manual and automated testing

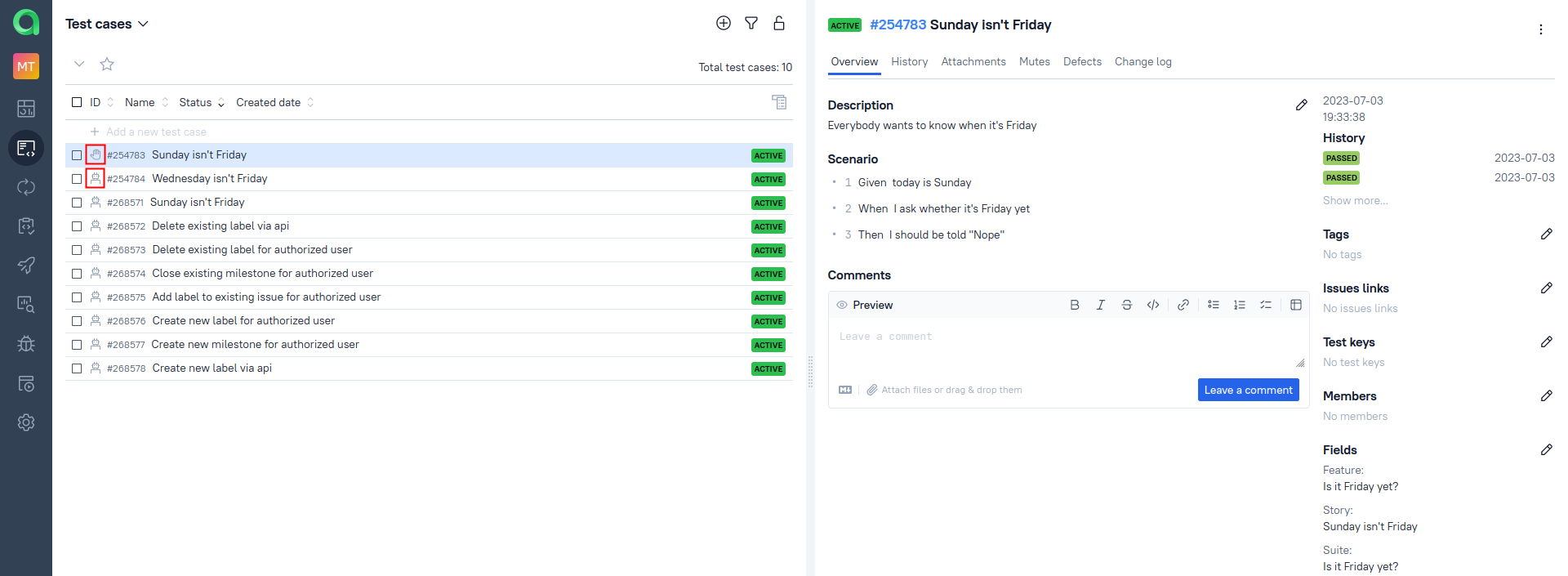

One of the ways to solve that problem is to store manual and automated tests together in a single space like it's done in Allure Testops. Here, both types of tests look like traditional manual test cases; you can tell the two apart by the little sign next to their name:

This is the common ground I've been talking about, and it's the starting point for automation. Here are the basic steps:

- As a manual tester, you write a test case in Testops and mark that it needs automation.

- Then, with just a few clicks, a SDET converts this into boilerplate code for their IDE. They don't have to worry about writing meaningful titles, step names, feature names, tags, or anything else. They just write code.

- Then, the SDET uploads the code, and you can compare the steps from the original manual test case with steps from the new automated test to ensure its fidelity.

Let's go over this process in detail.

The IDE plugins for Allure Testops

The functionality I'm talking about is provided by the IDE plugins for Allure Testops. They can do plenty of stuff, not just what we've talked about here; for a thorough guide on all the features and on installing the plugins, you can visit our documentation. Here, let's do a quick step-by-step with PyCharm.

Let's say a manual tester has already created a test case and marked that it needs automation. You copy the ID of that test case.

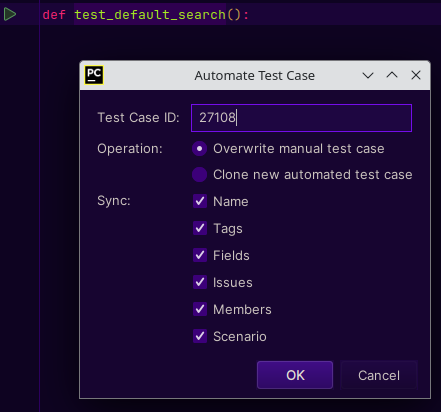

In the IDE, you write down the function signature for your test (as you would anyway); say, def test_default_search(). Then, right-click it and select "Allure Testops: Import test case". In the menu that will appear, fill in the test case ID and whatever else is necessary:

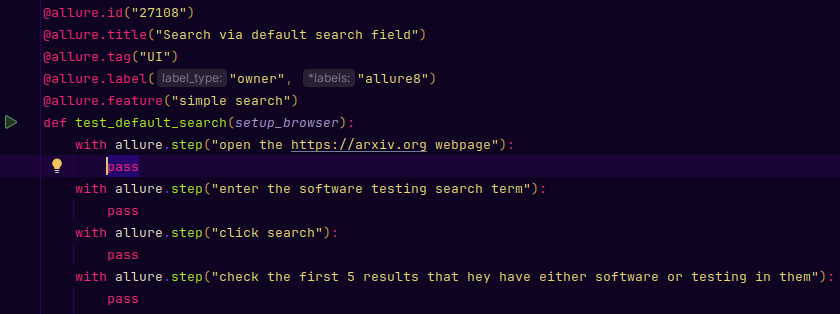

Once you've hit "OK", the IDE will create annotations for your test case based on the manual test:

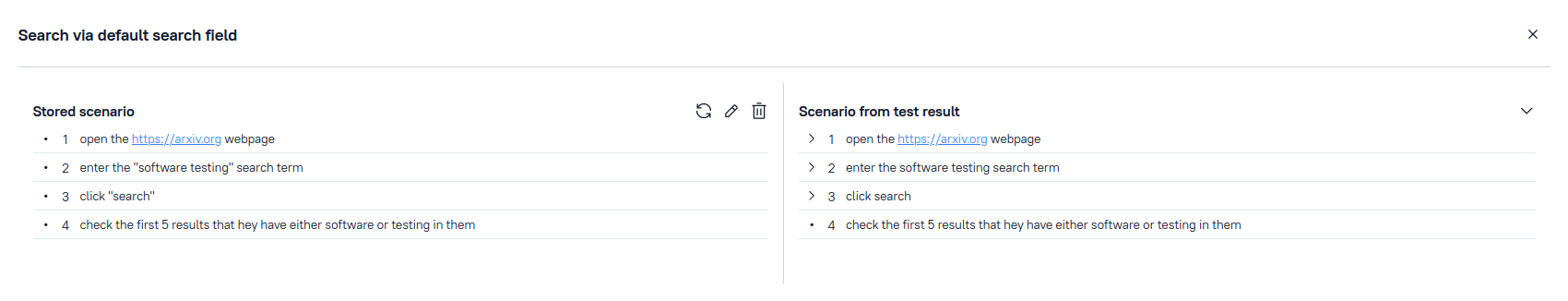

Finally, once you've run your new tests and your results have been uploaded to Testops, the manual test case will be automatically updated with the information from your code (that's called Smart Test Cases). Now, the manual tester can compare the steps from the old test cases with what was uploaded:

Once they are satisfied, the manual scenario can be deleted, making the test fully automated.

Conclusion

Think of Allure Testops as an icebreaker. Not in the sense that it cruises the Arctic (not that we know of, at least). Rather, it helps break down silence and indifference between parts of the team.

Importing test case metadata speeds up the work of SDETs and allows them to concentrate on what they do best - write code. A test scenario based on that code can then be compared with the old test scenario, showing SDETs and manual testers that they are working on the same thing.