The DORA metrics are pretty much an iceberg, with the five indicators sticking out above the surface and plenty of research hidden beneath the waves. With the amount of work that has been put into that program, the whole thing can seem fairly opaque when you start working with them. Let's try to peek under the surface and see what's going on down there.

After our last post about metrics, we thought it might be interesting to look at how metrics are used on different organizational levels. And if we start from the top, DORA is one of the more popular projects today. Here, we’ll tell you some ideas we’ve had on how to use the DORA metrics, but first, there have been some questions we’ve been asking ourselves about the research and its methodology. We’d like to share those questions with you, starting with:

Question: what is DORA?

DevOps Research and Assessment is a company founded in 2015. Since then, they have been publishing State of DevOps reports, in which they've analyzed development trends in the software industry.

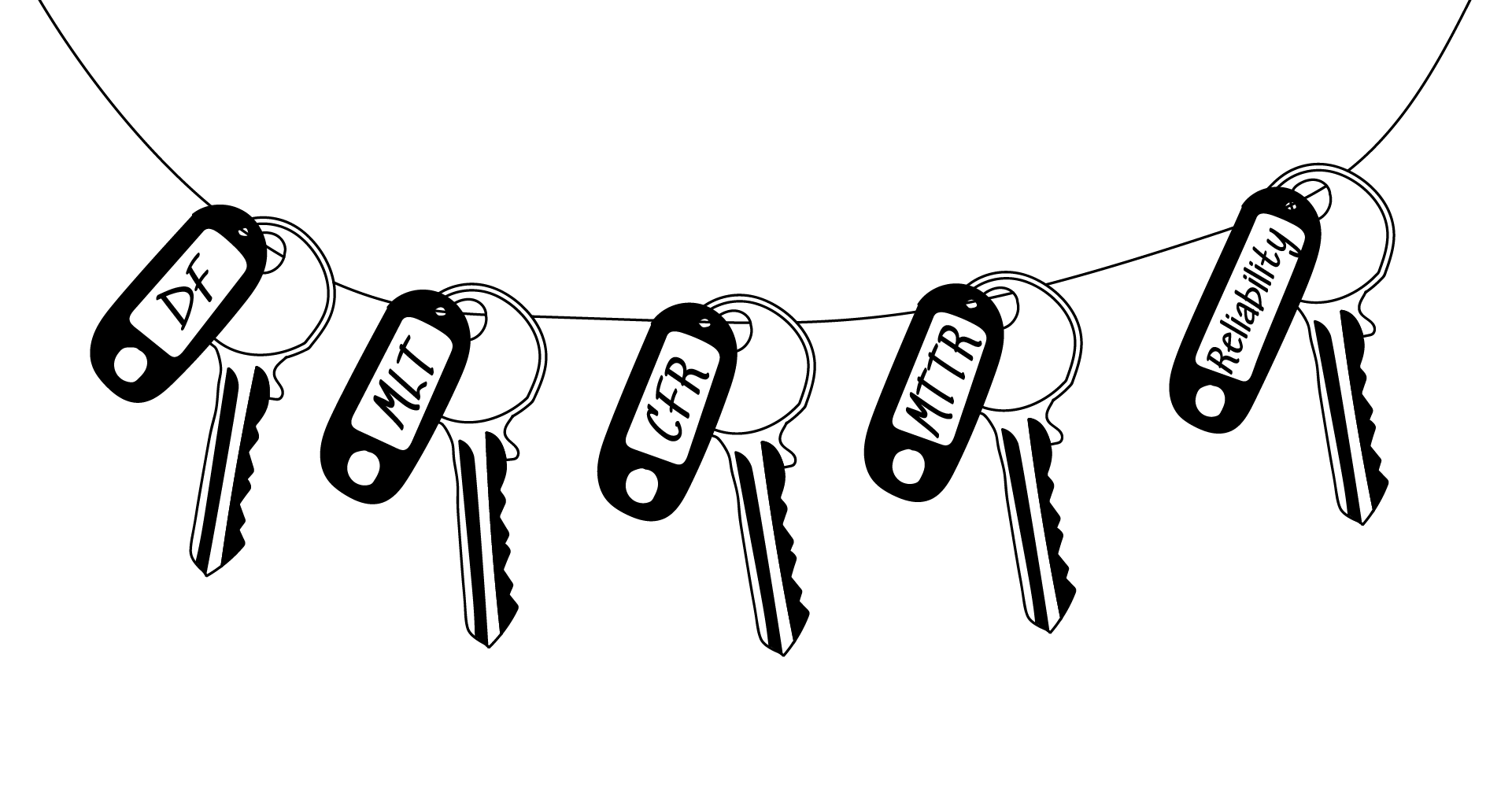

In 2018, the people behind that research published a book where they identified four key metrics that have the strongest influence on business performance:

- Deployment Frequency (DF). How often your team deploys to production.

- Mean Lead Time for Changes (MLT). How long it takes for a commit to get to production. Together with DF, those are measures of velocity.

- Change Failure Rate (CFR). The number of times your users were negatively affected by changes, divided by the number of changes.

- Mean Time to Restore (MTTR). How quickly service was restored after each failure. Both this and CFR are measures of stability.

- Reliability - the degree to which a team can keep promises and assertions about the software they operate. This is the most recent addition to the list.

The DORA team has conducted a truly impressive amount of work. They’ve done solid academic research, and in the reports, they always honestly present all results, even when those seem to counter their hypotheses. All that work and the volume of data processed truly is impressive, but, ironically, it might present a limitation. When the DORA team applies their metrics, they back it up with detailed knowledge; this is absent when someone else uses them. And it is not a hypothetical situation, because by now, these metrics are so popular that tools have been written specifically to measure them - like fourkeys or Sleuth. More general tools like GitLab or Codefresh can also track them out of the box.

The questions we’re about to ask might be construed as criticism - this is not the case. We’re just trying to show that DORA is a complex tool, which should be, as they say, handled with care.

Question: do the key metrics work everywhere?

The main selling point of the four key metrics is their universal importance. DORA found them to be significant for all types of enterprises, regardless of whether we're talking about a Silicon Valley startup, a multinational corporation, or a government. This would imply that those metrics could work as an industry standard. And in a way, this is how they are presented: the companies in DORA surveys are grouped into clusters (usually four), from low to elite, and the values for the elite cluster look like something everyone should emulate.

But in reality, all of this is more descriptive than imperative. The promoted value for e.g. Mean Lead Time for Changes is simply the value from companies that were grouped into the elite cluster, and that value can change from year to year: for instance, in 2019 it was less than one day, in 2018 and 2021 - less than one hour, and in 2022 - between one day and one week. By the way, that last one is due to the fact that there was no "elite" cluster at all that year, the data looked more convenient in three clusters. So, if we stop at this point and don't look further than the key metrics, we just get the message - here's a picture of what an elite DevOps team looks like, let's be more like them, everybody.

In the end, we're coming back to the simple truth that correlation does not imply causation. If the industry leaders that have embraced DevOps all display these stats, does it mean that by gaining those stats you will also become a leader? Doing it without proper understanding or regard for context might result in wasted effort. How much will it cost you to drive each of those metrics to the elite level - and keep them there indefinitely? What will the return on that investment be? To answer those questions, you're going to need to dig deeper - and the same goes for the next question on our list.

Question: we know how fast we are going - but in what direction?

Not only will you need something lower-level to complement the DORA metrics, you’ll also need something higher-level. As we’ve already said previously, a good metric should somehow be tied to user happiness. The problem is, that the DORA metrics tell you nothing about business context - whether or not you’re responding to any kind of real demand out there. Using just DORA to set OKRs will paint a very incomplete picture of how well the business is performing. You’ll know how fast you’re going, but you might be driving in the opposite direction from where you need to be, and the DORA metrics won’t alert you to that.

Question: what is reliability?

This is what we've been asking ourselves when we were researching the fifth DORA metric. If you’ve read the 2021 and 2022 reports, you'll know that it is something that was inspired by Google's Site Reliability Engineering (SRE), but you'll still be none the wiser as to what specific metrics it is based on, how exactly it is calculated, or how you might go about measuring your own reliability. The reports don't show any values for it, it is not shown in the nice tables where they compare clusters, and the Quick Check offered by DORA doesn't mention reliability at all. The last State of DevOps report states that investment in SRE yields improvements to reliability “only once a threshold of adoption has been reached” - but doesn’t tell us what that threshold is. This leaves a company in the dark as to whether they’ll gain anything by investing in SRE.

This is not to be taken as criticism of SRE - it's just that the way it's presented in the reports is opaque, and if you want to make any meaningful decisions in your team, you'll need to drill down to more actionable metrics.

Idea: check the DORA metrics against each other

The great thing about the key DORA metrics is how they can serve as checks for each other; each of them taken on its own would lie by omission. A team of very careful developers who are diligent with their unit tests and who are supported by a qualified QA team could have the same Deployment Frequency and Lead Time for Changes as a team who does no testing whatsoever. Obviously, the products delivered by those teams would be very different. So, we need a measure of how much user experience is affected by change. Time to Restore tells us something about it - but on its own, it is useless, like measuring distance instead of speed. Spending two hours restoring a failure that happened once a month or once a day are two completely different things. Change Failure Rate to the rescue - it tells us how often the changes happen. Another problem with MTTR: you could have a low value that is achieved by fixing every disaster with an emergency hack. Or you could have a high Deployment Frequency which allows you to roll out stable fixes in a quick and reliable manner. This is an extremely important advantage of a high DF: being able to respond to situations in the field in a non-emergency manner. Again, the metrics serve as checks for each other.

Further, if we’re trying to gauge the damage from failures, we need to know how much every minute of downtime costs us. Figuring it out will require additional research, but it will put the figures into proper context and will finally allow us to make a decision based on the numbers. So, for the DORA metrics to be used, they need to be judged against each other and against additional technical, product, and marketing metrics.

Idea: know the limits of applicability

Taking this point further, there are situations where the DORA metrics don’t necessarily indicate success or failure. The most obvious example here is that your deployment frequency depends not only on your internal performance but also on your client’s situation. If they are only accepting changes once per quarter, there isn’t much you can do about that.

The last State of DevOps report recommends using cloud computing for improving organizational performance (which includes deployment frequency). This makes sense, of course - but clouds are not always an option, and this should be considered when judging your DF. If we take Qameta Software as an example, Allure TestOps has a cloud-based version, where updating is a relatively easy affair. However, if you want to update an on-premises version, you’ll need to work with the admins and it will take a while. Moreover, some clients simply decide that they want to stay with an older version of TestOps for fear of backward compatibility problems. Wrike has about 70k tests which are launched several times a day with TestOps. Any disruption to that process will have an extremely high cost, so they’ve made the decision not to update. There are other applications for which update frequency also isn’t as high a priority - like offline computer games.

All in all, there are situations where chasing the elite status measured by DORA might do more harm than good. This doesn’t mean that the metrics themselves become useless, just that they have to be used with care.

Idea: use the DORA metrics as an alarm bell

The DORA metrics are lagging indicators, not leading ones. This means that they don’t react to change immediately, and only show the general direction of where things are going. In order to be useful, they have to be backed by other, more sensitive and local indicators.

If we take a look at some real examples, we’ll see that the four key metrics are often used as an alarm bell. At first, people making decisions get dissatisfied with the values they're seeing. Maybe they want to get into a higher DORA cluster, or maybe one particular metric used to show higher values in the past. So they start digging into the problem and drilling down: either talk to people in their teams or look up lower-level metrics, such as throughput, flow efficiency, work in progress, number of merges to trunk per developer per day, bugs found pre-prod vs in prod, etc. This helps identify real issues and bottlenecks.

And if you want to know what actions you can take to address your issues, DORA is offering a list of DevOps capabilities, which range from techniques like version control and CI to cultural aspects like improving job satisfaction.

Conclusion

Of course, there is a reason why some stuff like reliability might be opaque and why some technical details might be black-boxed (or gray-boxed) in the DORA metrics. It seems like they were created for a fairly high organizational level. At some point, the authors state explicitly that the DORA assessment tool was designed to target business leaders and executives. This, combined with its academic background, makes it an impressive and complex tool.

In order to use that tool properly, we, as always, have to be aware of its limitations. We have to know that in some situations, a non-elite value for the key metrics might be perfectly acceptable, and chasing elite status might cost more than that chase will yield. Business context and customer needs have to be held above these metrics. The metrics have to be checked against each other and against more local and leading indicators. If all of this is kept in mind, the DORA metrics can provide a useful measure of your operational performance.

We'll be back with more metrics

We plan to continue this series of articles about metrics in QA. The previous one outlined a series of requirements for a good metric; and now, we want to cover specific examples of metrics on different organizational levels. After DORA, we'll probably want to go for something lower-level, so stay tuned!