Modern technology requires people to work together closely and communicate. For that, they need something to talk about, a shared context. But if everyone is a specialist in just their own field, they won't know what the other person is talking about. Today, we'll try to solve this problem!

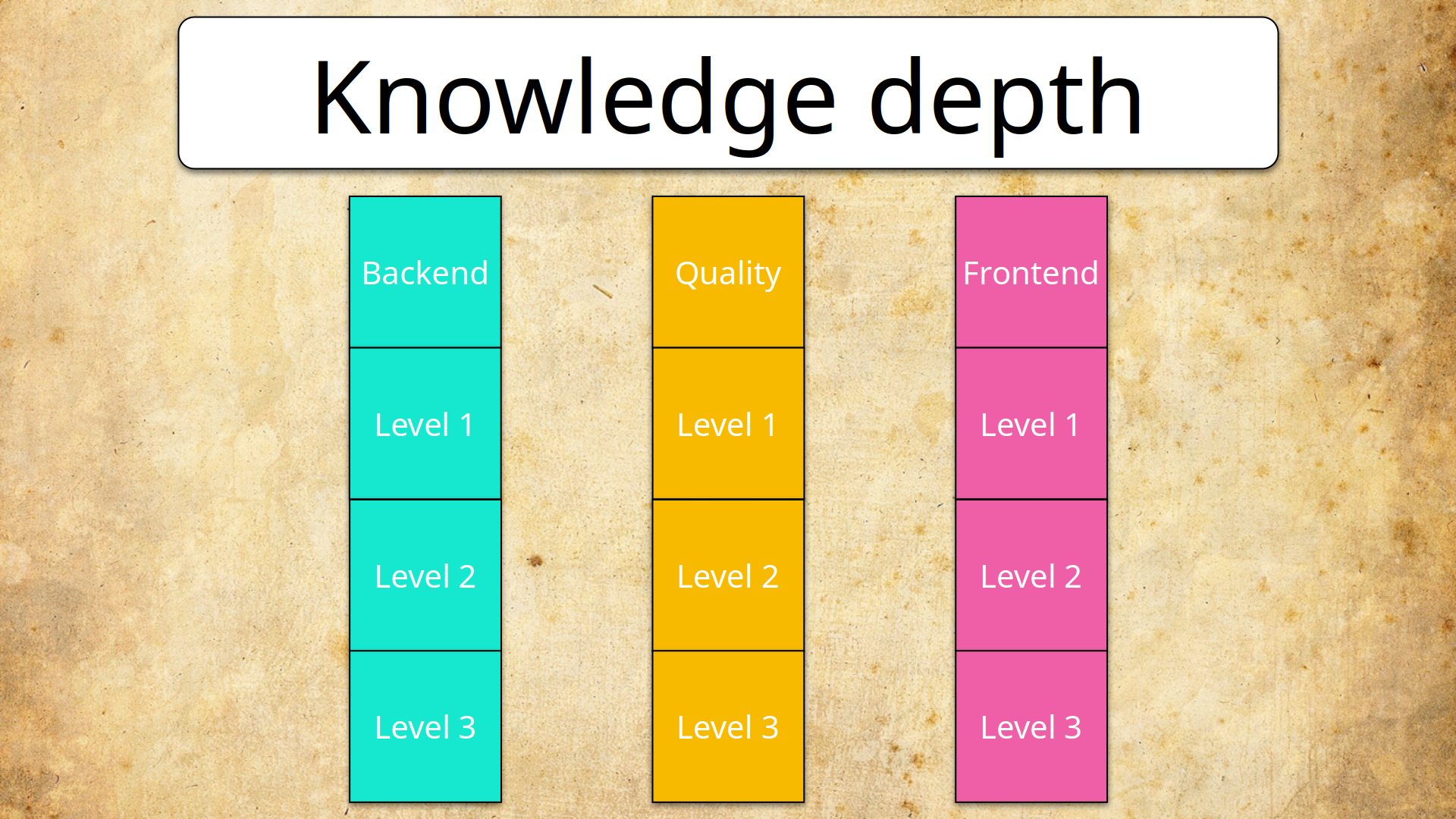

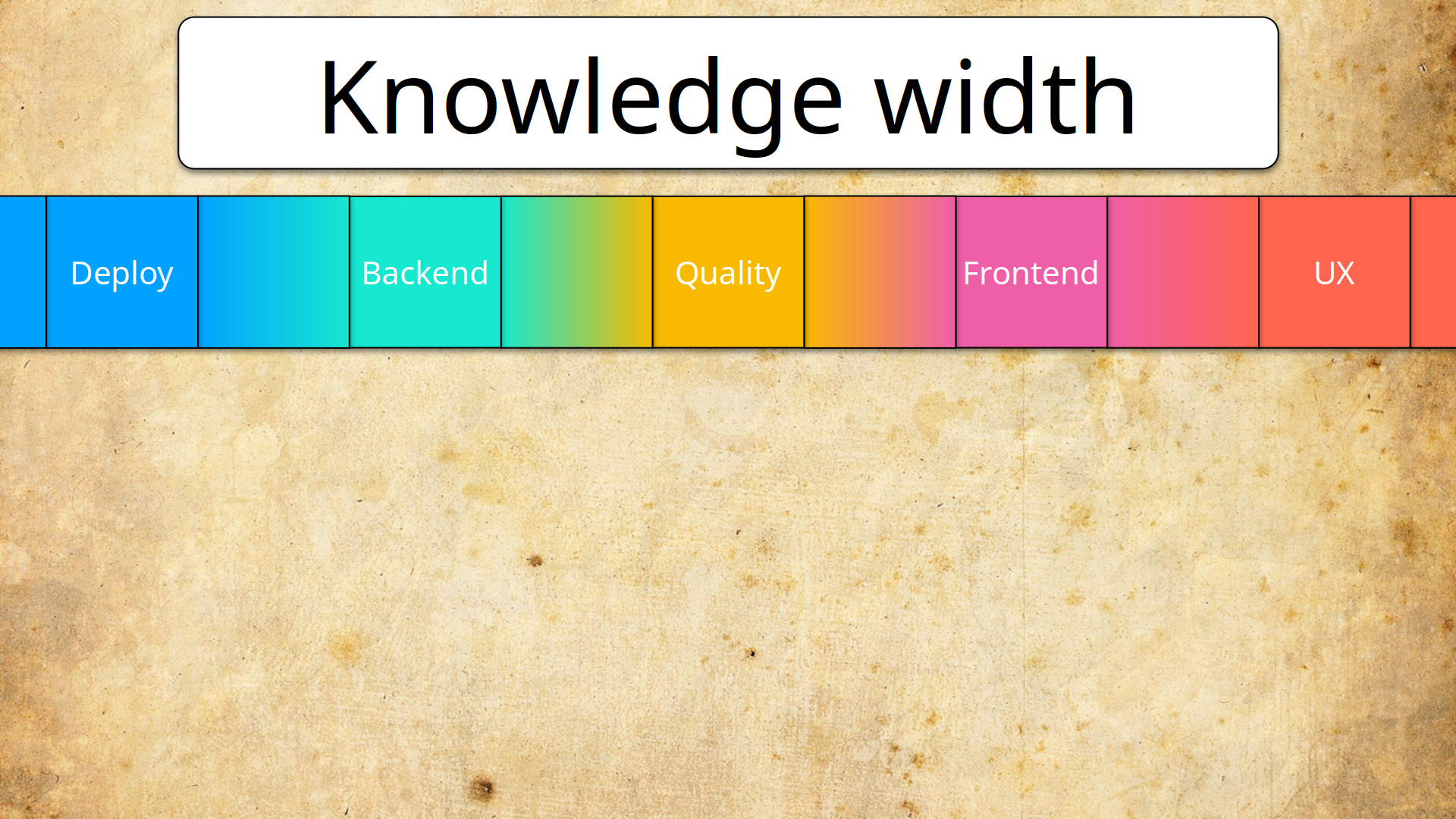

But first, let's talk about T-shaped specialists. What does the term mean? The idea is that as a professional, you can either go deep or you can go wide.

Growing as a professional

I-shaped and dash-shaped growth

Say you're a junior working on the backend, and you can do some simple stuff like writing controllers or writing from JSON into a database. As you accumulate experience and learn in your field, your knowledge profile will deepen, and you will learn more about architecture, different design principles, databases, scaling, etc. We can dub this person a "scientist", someone who digs deep into their field, an I-shaped specialist.

Another direction for your professional growth is understanding more about the field of other people on your team. If you are a backender, you'll probably have a frontend developer and a tester beside you. You could learn basic stuff about forms and requests or how to write simple tests. Here, you go for width, not depth; you become a "sage", a dash-shaped specialist.

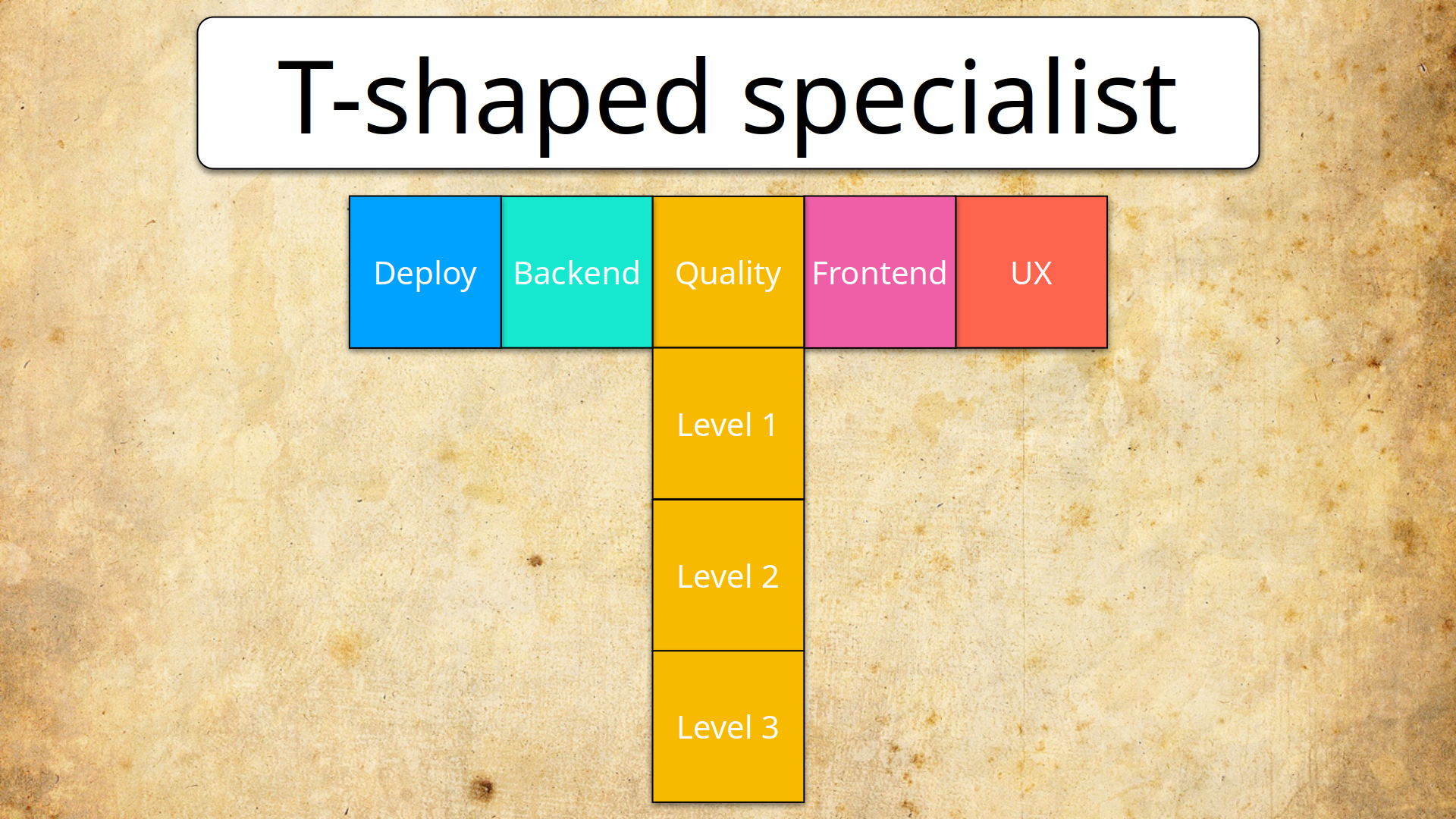

T-shaped growth

Finally, there's the combination of the two directions:

A functioning enterprise will require people to develop in both directions. Understanding SOLID principles is essential for a developer, but so is writing unit tests, and the two are related. Going both deep and wide means you'll be T-shaped, which is valuable today.

The direction of width helps with communication and understanding the general goals of the enterprise - which is why it is vital for business owners. However, they aren't the only ones that need it.

If people from different teams have trouble talking to each other, the cost of communicating will be extremely high, especially when communicating goals. Everyone will hear things their own way and have a different definition of done.

This problem can be countered by going into so much detail that tests requiring 30 minutes of work will have a specification that takes testers 3 hours to go through. Of course, the cost of not communicating goals would be even higher.

Silos

It's about the problem of silos. People either work in the context of business needs or the more narrow focus of their fields; the distribution of effort is different in either case (you can see it in the metrics they use).

Of course, if each team is holed up in its own domain, all the communication will go through the manager, who will be constantly swamped.

The three amigos approach is trying to solve the silo problem by simply getting people to bloody talk to each other once in a while. And it works: there is research showing that transdisciplinary teams have an advantage when tackling "wicked problems" - problems that lack precise definitions, stopping rules, or opportunities for trial and error.

Building communication APIs

So, how do you get there? It would help if you worked on communication APIs - your shared boundaries with other specialists.

There are many ways to share your expertise: meetings, code reviews, or just having a beer with colleagues. However, one of the most effective ways is a report.

If we go with the metaphor, what does an API do? It tells a person from the outside what a complicated system will spit out from its insides. But that is exactly what a report does!

A report is a shared context for the inside and the outside. It condenses knowledge so that people with different backgrounds can talk about it. It's a universal tool for creating common ground.

So what, you just build a report, and that's it, the communication problem is solved?

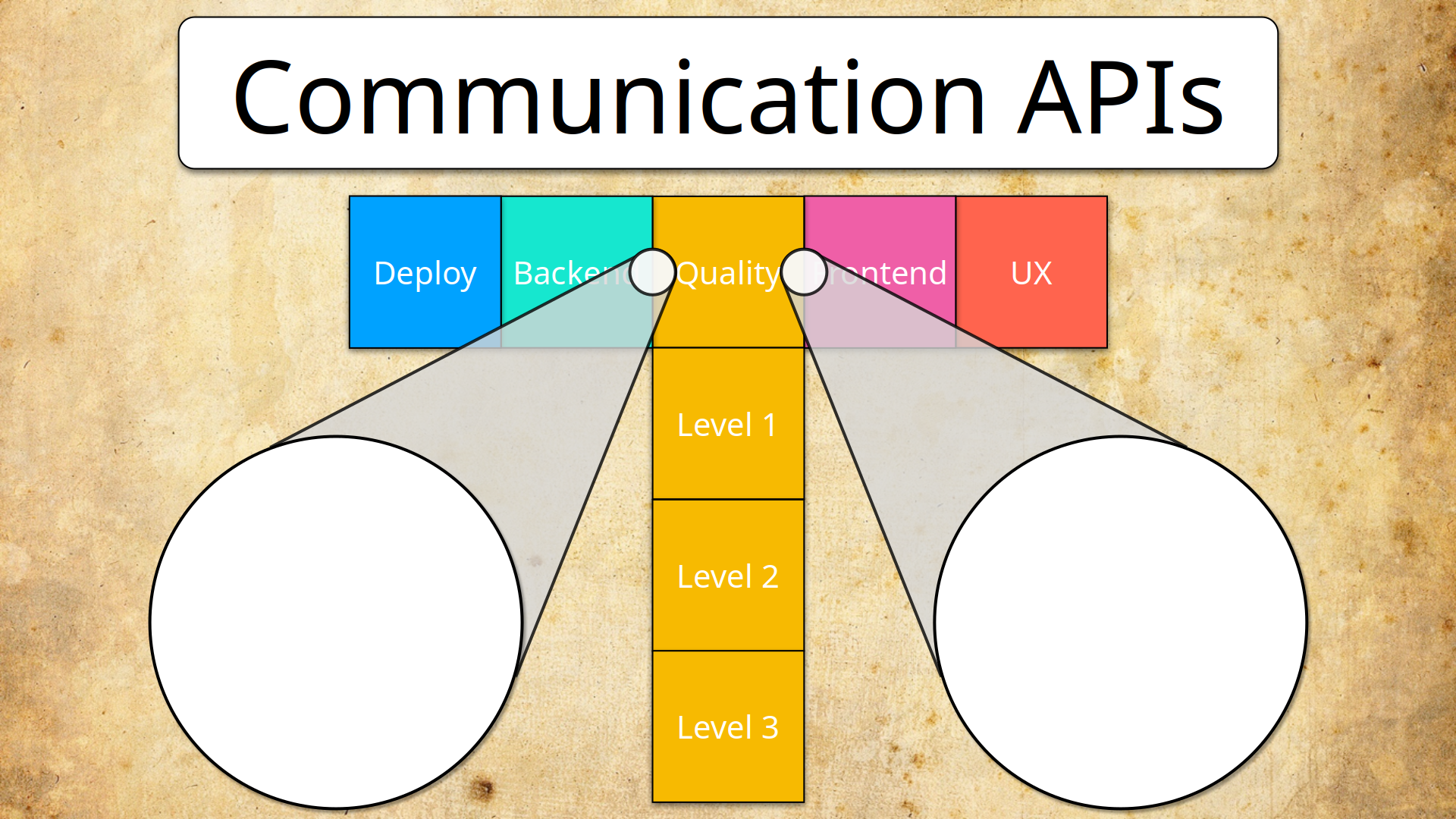

Actually - yes, it is. Let's have a look at a few reports, shall we? In any IT company, there is a frontend team, a backend team, and a QA team. So, we'll look at reports that can be created at the interfaces between those teams.

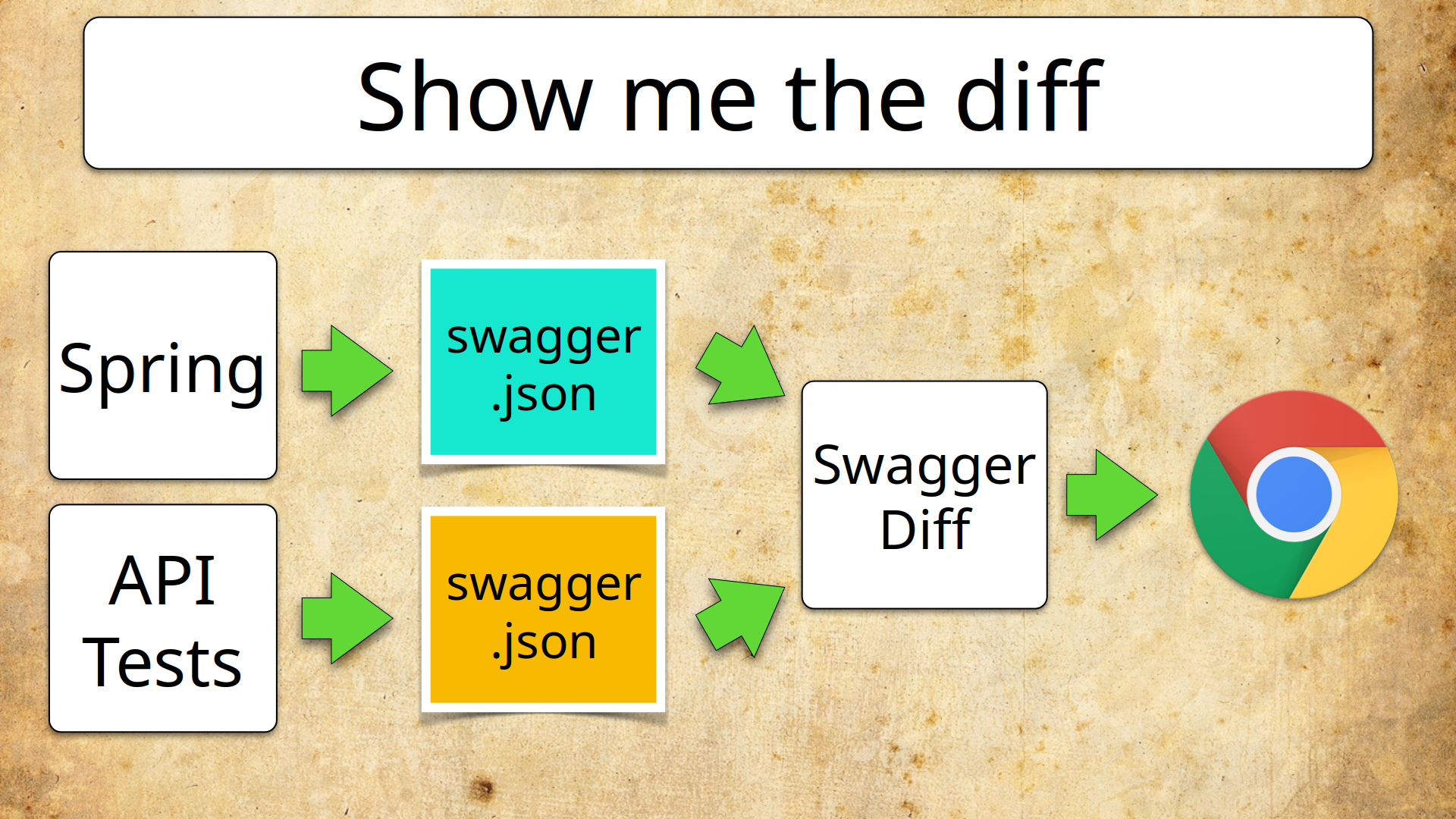

Quality <=> Backend: Swagger diff

What kind of shared context is there for QA and the Backend? Well, there are the APIs: devs write them, and QA tests them. Here, we can use Swagger.

With language-independent documentation for APIs, Swagger provides the kind of shared context we're talking about. I've created a report builder that compares two swaggers, one for tests and one for APIs.

This report tells you your test coverage - and provides common ground for testers and backend developers.

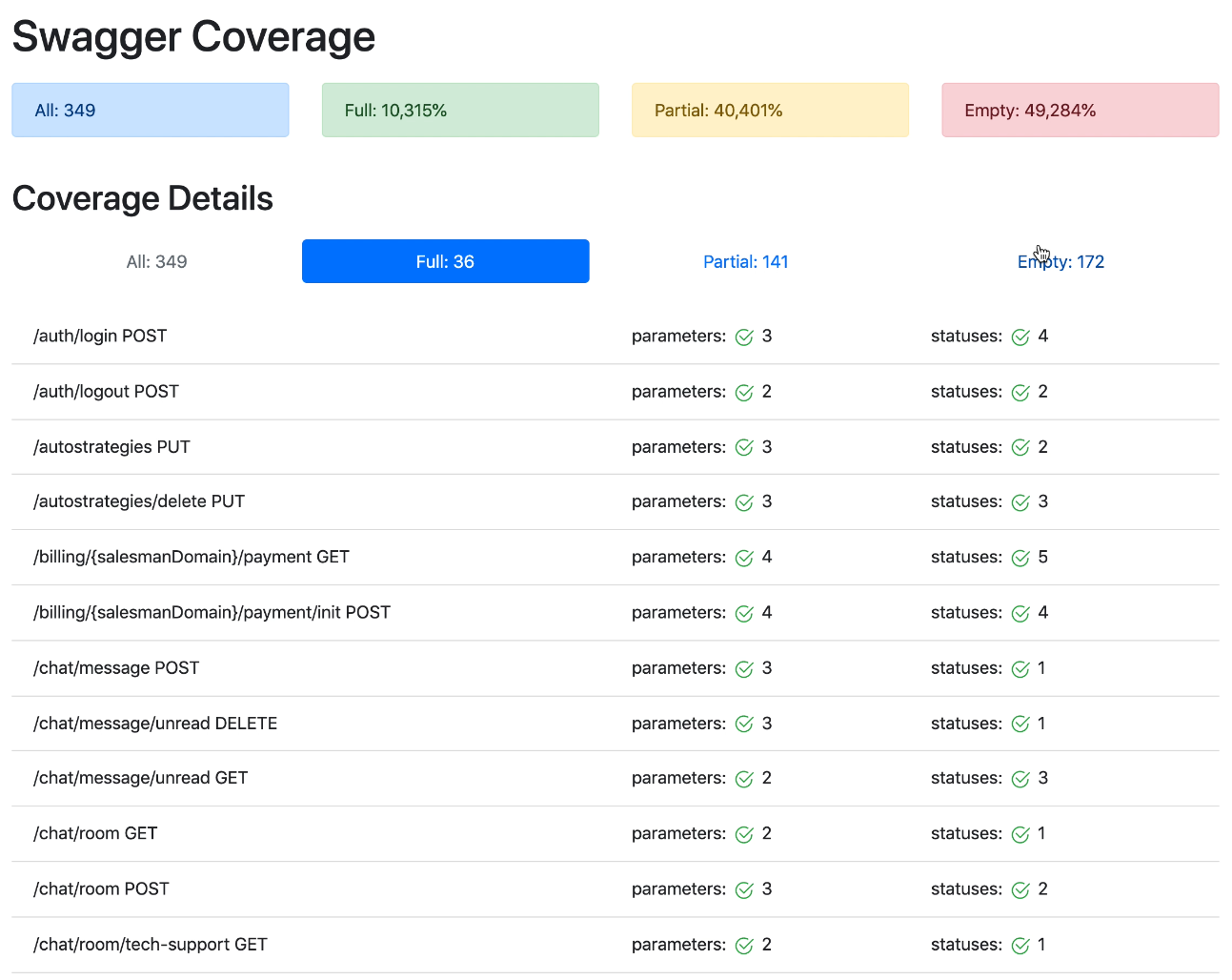

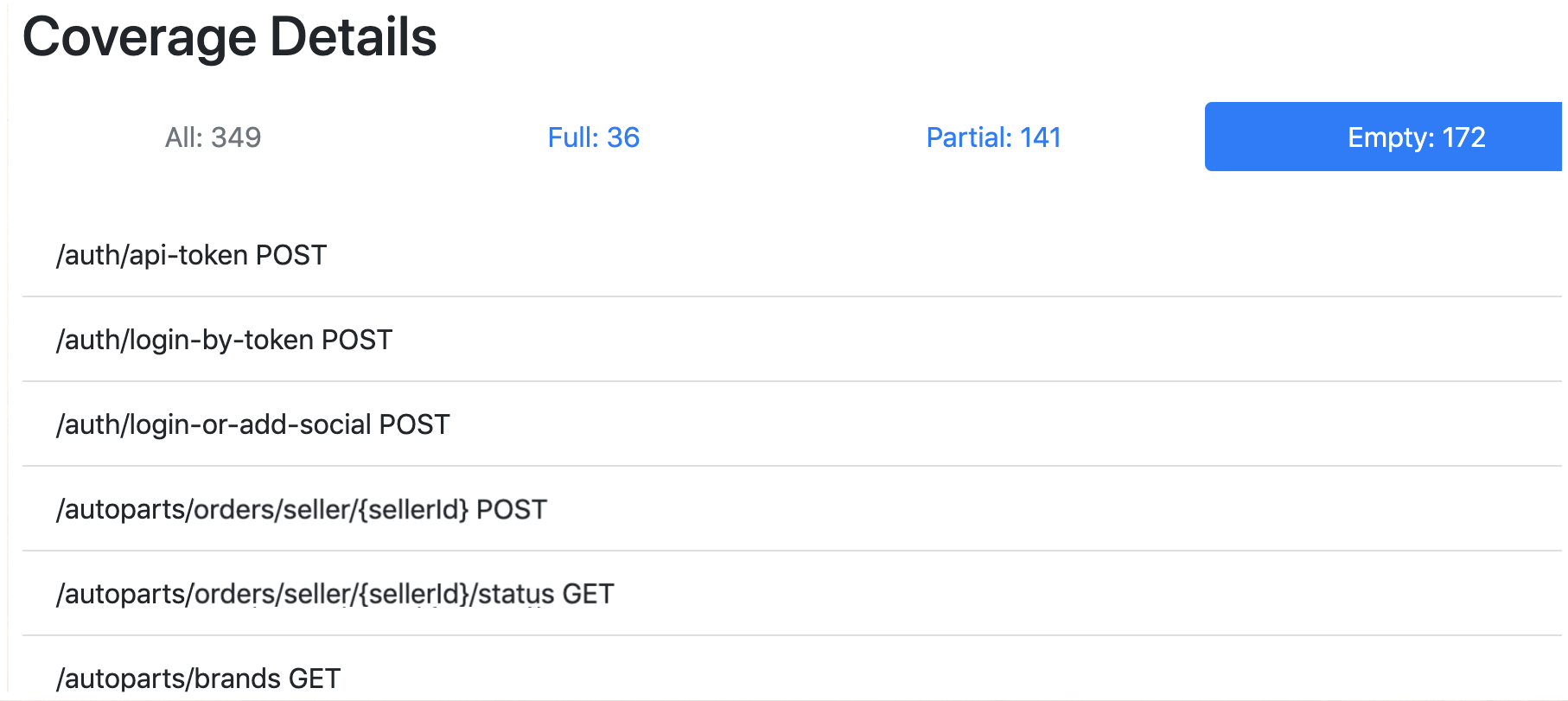

Here's how the report looks:

The green ticks mean that the respective API calls have been covered with tests. Everything that hasn't been covered is listed under "Empty":

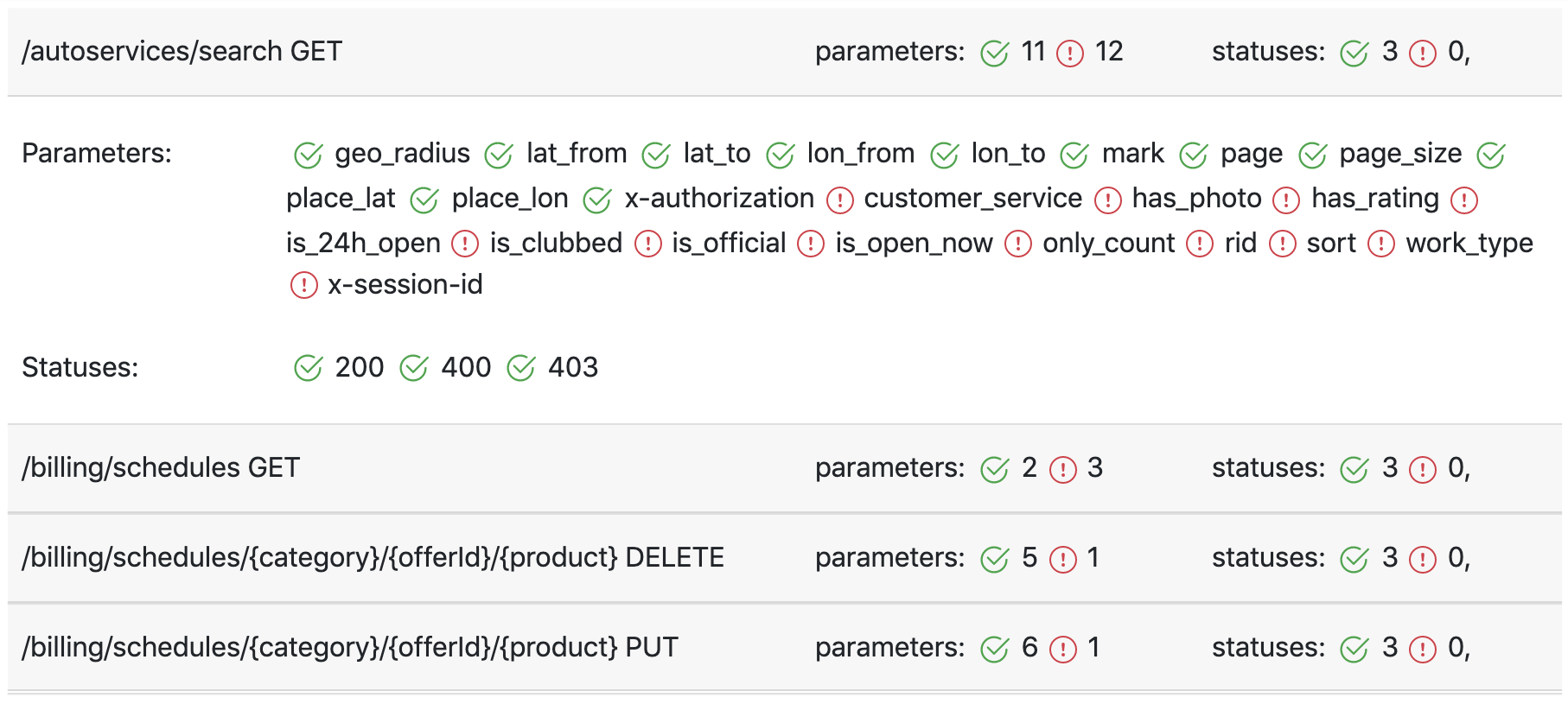

Finally, under "Partial", you can dive into a particular call and see what parameters and status codes haven't been covered with tests:

Why is such a report useful? Well, the dev knows what functionality is critical, and the tester knows what functionality is covered. With this report:

- the developer can check if some serious risks haven't been covered;

- the tester can track new features that need tests;

- both are prompted into conversations with one another.

Frontend <=> Quality: Webdriver Coverage

What is the shared context for frontend devs and QA? It's - you guessed it - the frontend. We could build a report like this that would take a look at elements on a page and count how many times each one of them was picked with a selector from an automated test. That would show us the test coverage of our page.

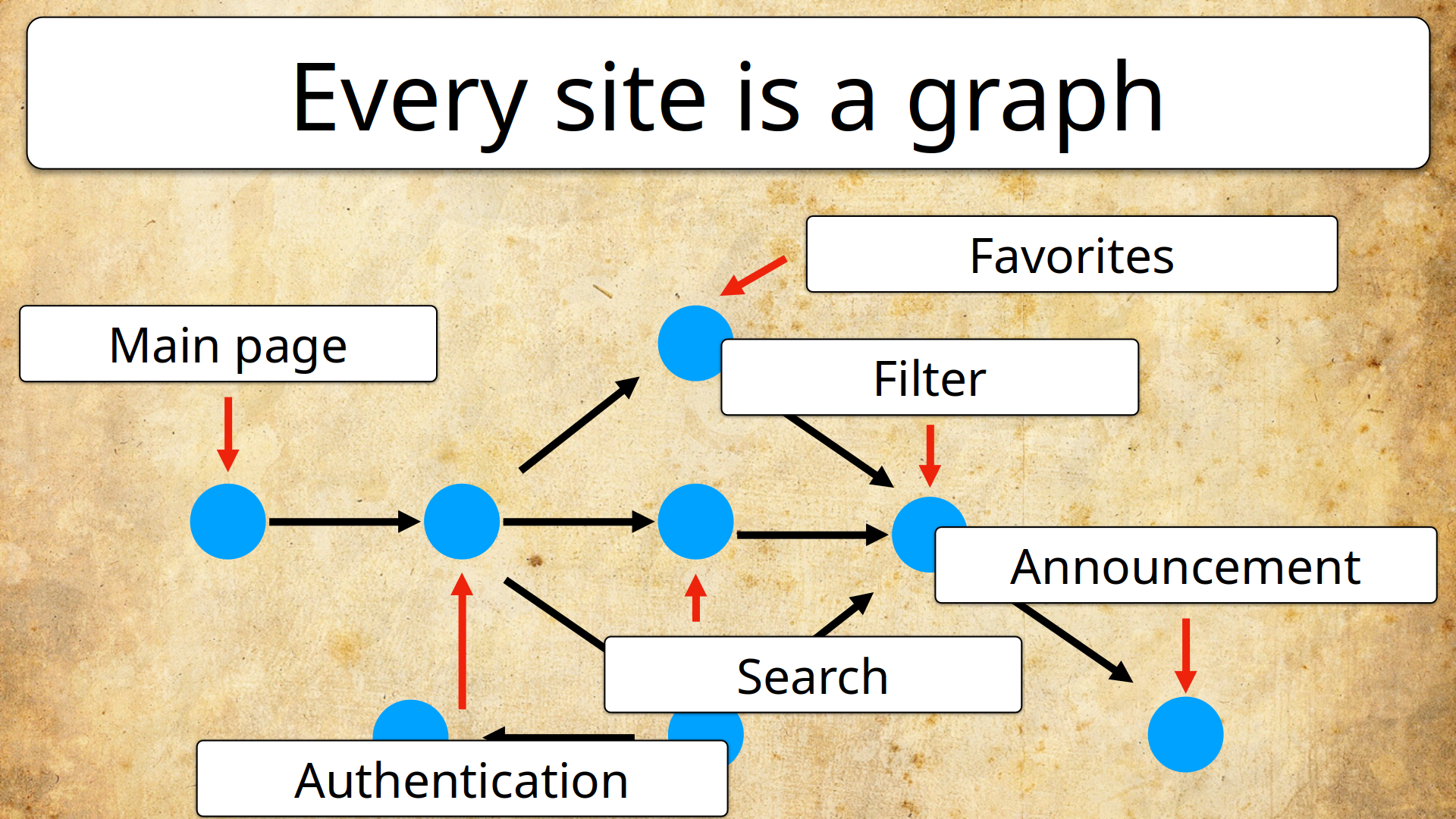

The idea behind it is that every site can be represented as a graph:

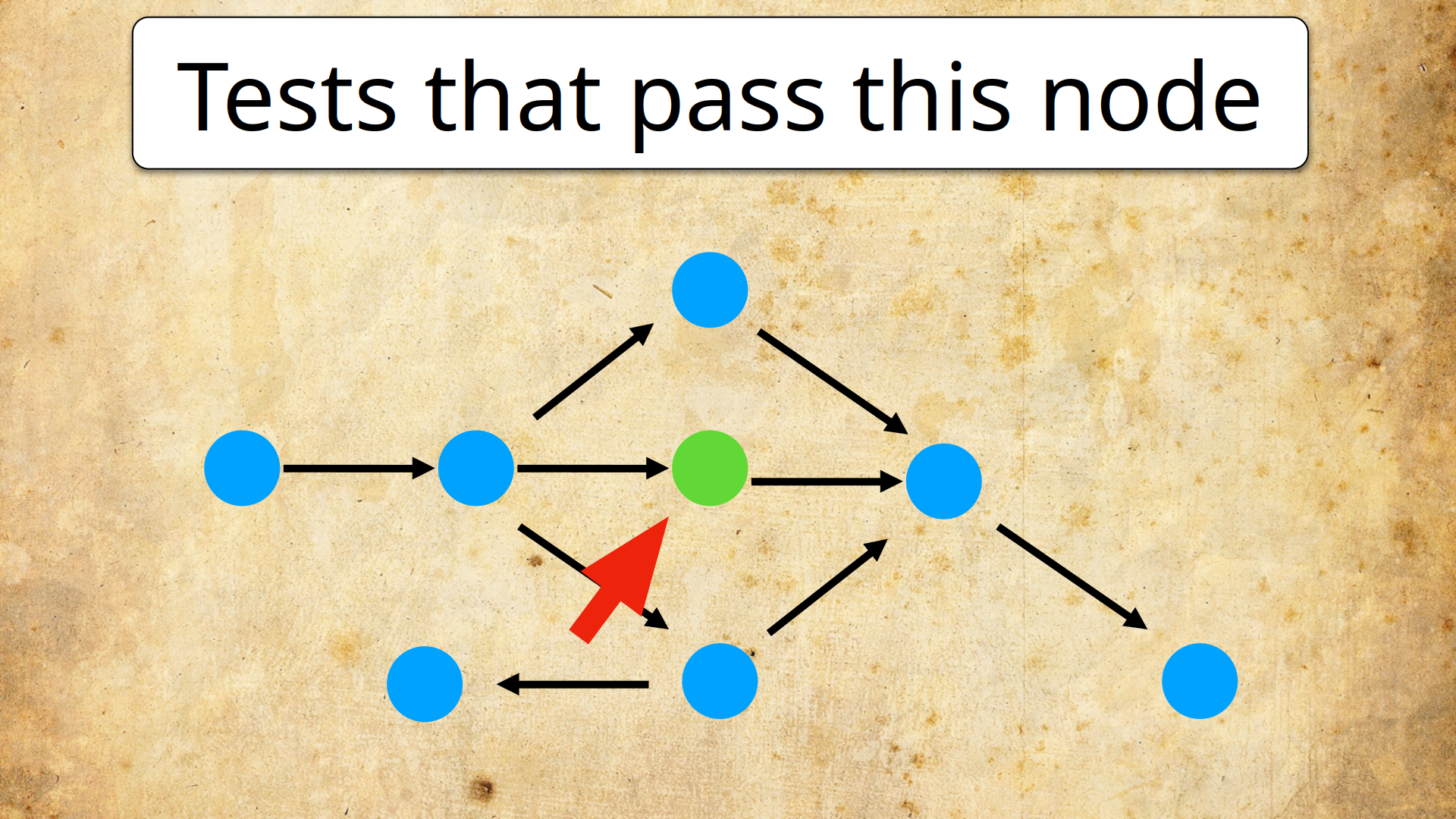

Both users and tests start at the entrance, and then go through nodes of that graph. And it can help a lot to know how much stuff will break if we change a particular node.

Unfortunately, this report depends on how well your tests are written. If all the selectors are based on the IDs of elements - you'll get the full picture. Unfortunately, if the selectors don't use unique identifiers, the coverage data is going to be off.

So, imagine a frontend developer wants to refactor the page, they're about to rewrite a button, and they see in their report that 87 tests use the button. Okay, that's definitely cause to stop and think; maybe even go and warn QA that there is a truckload of work coming their way. So, like I said - reports encourage communication!

Frontend <=> Backend: Allure Report

Another great base for reports is an automated test. For the three amigos concept, automated acceptance tests provide a common expression of what we want from the system. But test reports also create shared contexts for different developers.

Today, a multilanguage tech stack is very common - for instance, Go, Python, and Java on the backend, with a frontend written in JavaScript. In this situation, it can be tough to figure out the causes of failures - you need to dig into different reports for different languages, and it all becomes a tangled mess in your head.

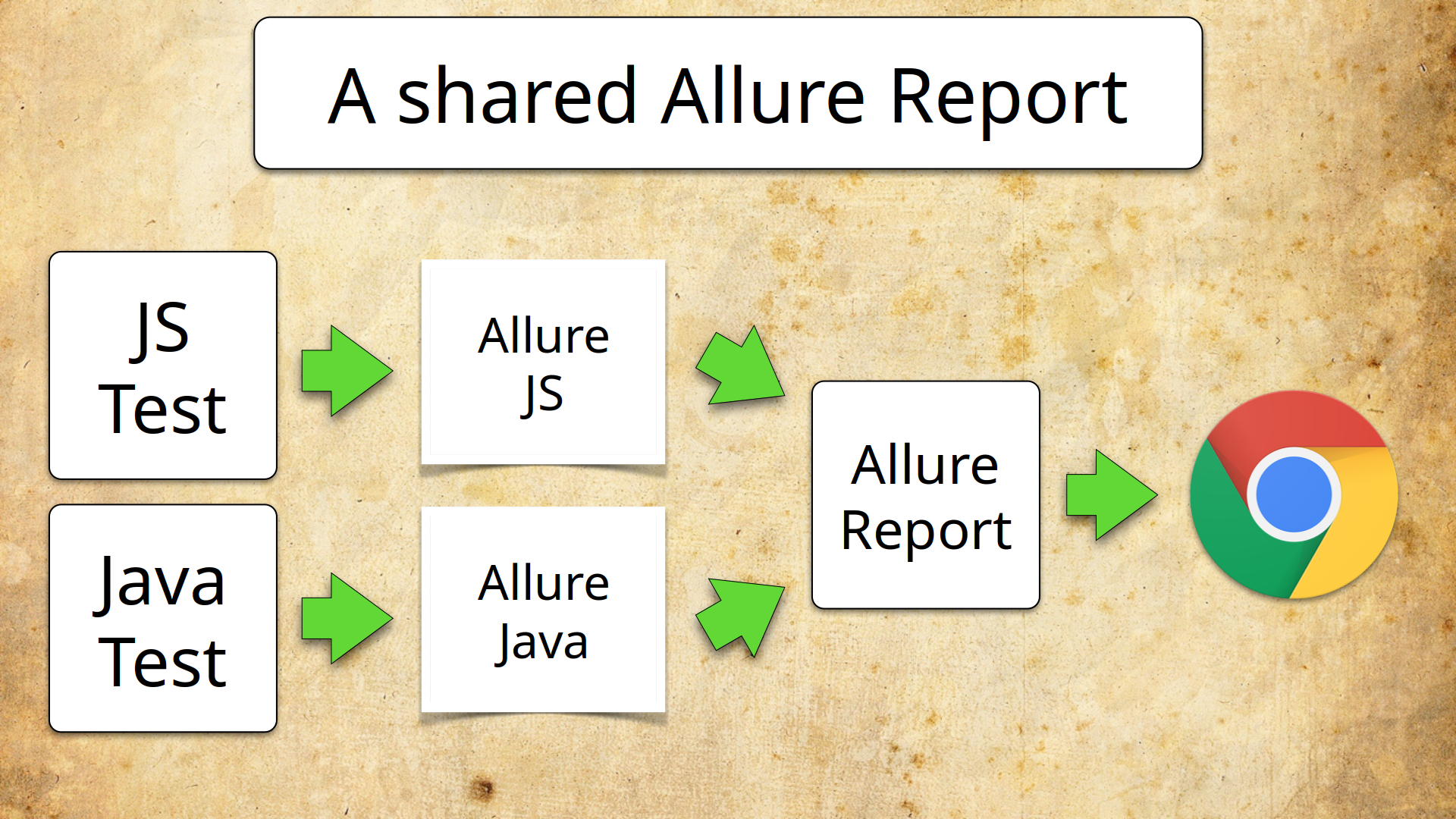

Here's where Allure Report shines because it integrates with pretty much anything you can think of. Tests from every framework in every language all get transformed into the same data format. This base is used to display your test results in various neat ways. This means we can build an Allure report based on two languages:

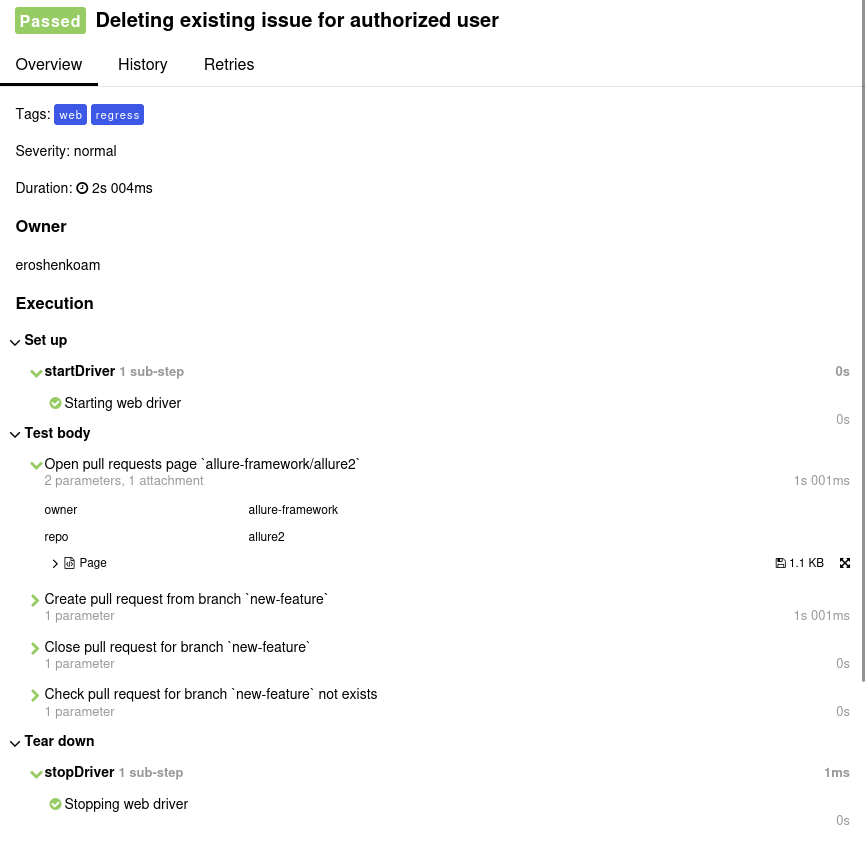

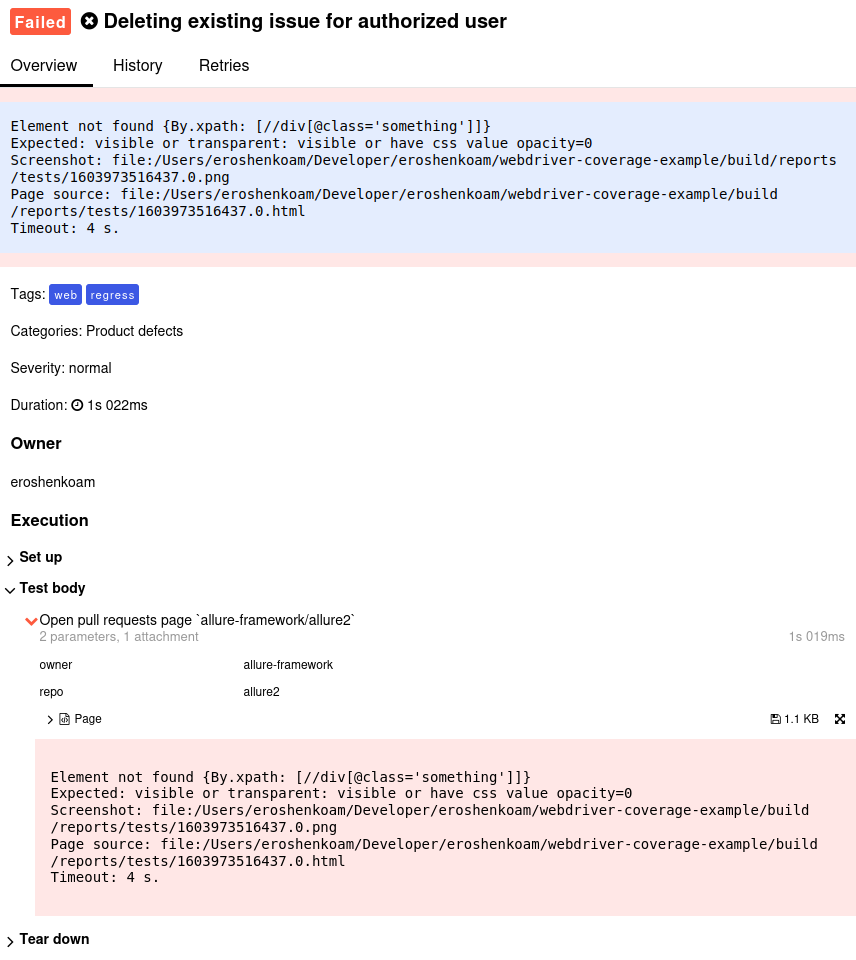

Every test gets a representation in plain natural language, which doesn't depend on the framework:

This has everything you need to analyze the cause of failure - steps you can read without knowing the programming language, screenshots, and an HTML of the page under test. Failed tests also include stack traces:

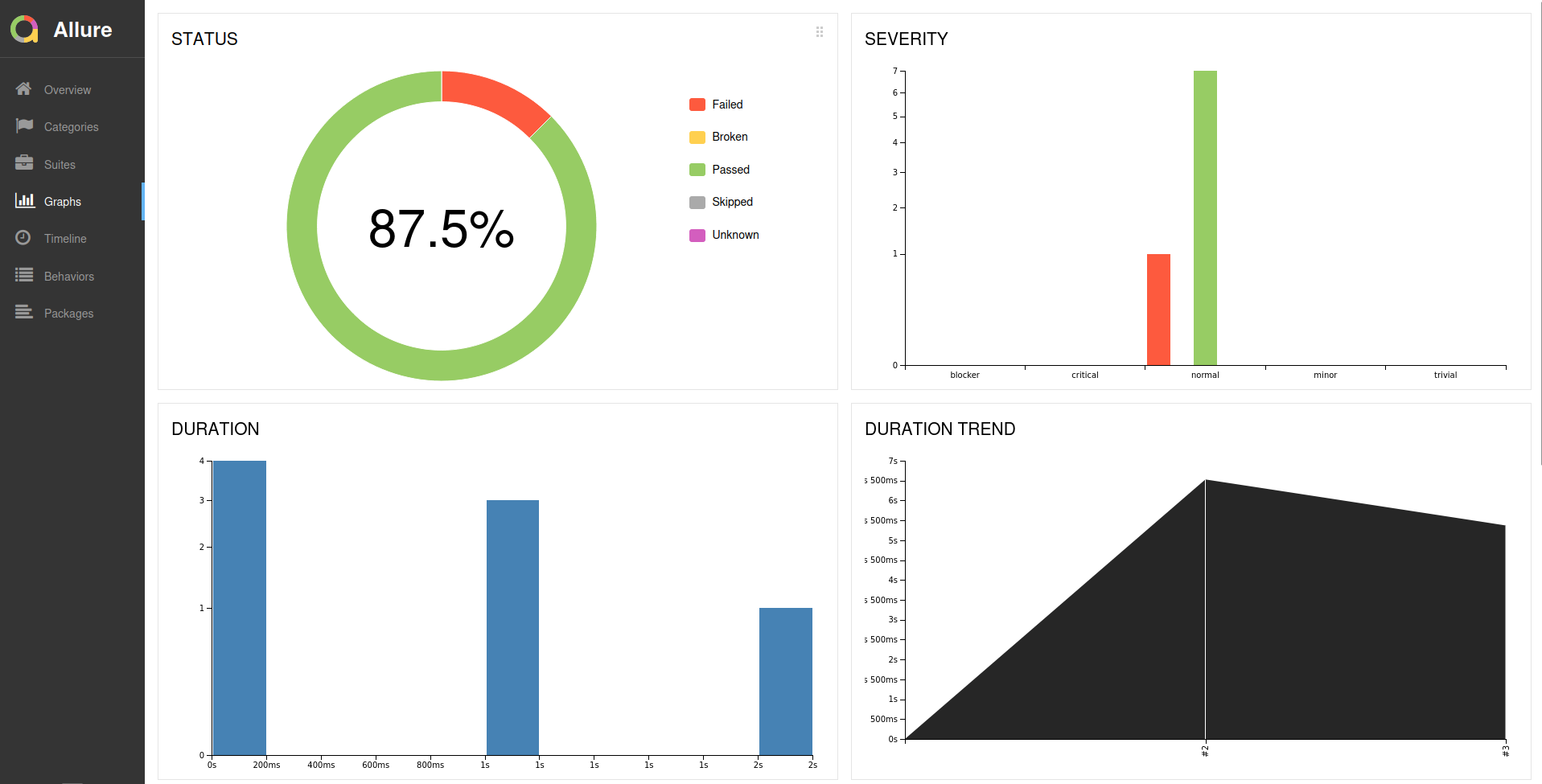

And there are also graphs based on aggregated data from your test results:

This allows people with different tools to see the same picture and understand each other.

Conclusion

If you want effective lines of communication across professional boundaries, you need common ground, something for people to talk about. Reports are great for this - they condense complex information so that anyone can read and understand it. And as people get the horizontal part of their T shape stronger, the costs of communication fall, and organizational goals become much clearer for everybody.