SuperJob UI regression and acceptance testing automation. Tools and Solutions

Ruslan Akhmetzianov

Oct 06 2021

Anton Shkredov, QA Lead at SuperJob, an online recruitment platform, tells a story of how the team went from manual-only to full automation of UI testing. Dive in to learn how manual tests interrupted high-paced pipelines, how the automation process started, what tools the SuperJob QA team used, and what pitfalls they faced. This is a translated post from the Superjob tech blog.

Stage 1: How it started

The SuperJob website has more than 12 million monthly users, the database contains more than 40 million resumes, and every year there are 150,000 new employers. SuperJob is a data-intensive service. The testing team ensures service quality on all SuperJob platforms: Web, Mobile Web, iOS, Android but this post is devoted to the Web.

Several years ago, SuperJob UI testing infrastructure had manual testing only. The release was once a week, following a manual acceptance run. Could the team imagine that one day they wouldn’t need to check releases manually?

Each week, a tester on duty was appointed and made responsible for the release. The dev team deployed it on the staging for the tester to execute the acceptance run in TestRail. It usually took two to three days to give the go-ahead and all the features had also to be tested manually pre-staging.

It was decided the release cycle needed to be shortened and therefore the volume of routine tasks needed to be minimized. This led to the acceptance of the need for testing automation, this is where the path from manual to automated started.

Stage 2: Acceptance run automation and daily releases

The first testing automation tools trialed were Webdriver, the best-known tool on the market then, and Codeception, to synchronize automation and backend tech stacks. The reason for that was simple - the testing team had no experience in automation, so they needed the help of the developers' team to build the testing architecture or code a complex function.

It took about six months to build the first solution after choosing the programming language and tools:

- one of the testers took external PHP programming courses

- the team automated acceptance testing

- the pace for daily releases was achieved

- manual testing of the sprint tasks was moved to the release branch, leaving only the new features for the manual team

A single-threaded test run of 150 tests took 30 minutes. After manually checking the results the QA engineer gave the go-ahead to the release.

Stage 2 pitfalls: Manual testing leftovers and high pace demotivation

At this point, there were still some issues that required solving. The fully automated acceptance testing covered only the mission-critical quality business features leaving other parts of the website testing to manual testing.

Manual checks were time-consuming and fast feedback incompatible, and sometimes the testers found bugs almost at the time of release. If the bug was critical, the release could be postponed or even canceled. All this increased the level of stress in the team.

Though the amount of routine work went down, manual testers were unhappy due to the additional tension caused by an increased release frequency. Previously, the tester could take time going through tasks, but with daily releases the checks became hurried.

Consequently, due to business requirements, manual checks, and the monotony of work the decision was made to go for full-cycle testing automation which takes us to the current ongoing automation stage.

Stage 3: Regression testing automation, new tooling, and results

Regression testing required new tools to be picked and the ultimate solution with Playwright + CodeceptJS + Allure TestOps was selected for three reasons:

- The Codeception framework development stagnated as its maintainer switched to CodeceptJS development

- The explosive growth of JavaScript and JS testing tools

- Puppeteer was adopted first, but Playwright turned out to be more appropriate

Some historical background: Google Puppeteer uses DevTools. The tool helped us a lot in solving complex automation tasks i.e. checking fetching analytics services queries. With Puppeteer this could be done with a single method within the automated tests class, while WebDriver required non-trivial HAR logs sorting after test suite execution. However, the Puppeteer team moved to Microsoft and continued developing the tool as Playwright, making it cross-browser and more universal.

With this toolchain, fully automated regression testing was reached and all 2,500 manual tests in TestRail were automated. The suite took 30 minutes in 15 parallel threads enabling two releases a day.

Take a look at a test case example:

const I = actor();

Feature("Candidate Auth").tag("@auth");

letuser;

Before(async () => {

user = await I.getUser(); // Create candidate via test API

});

Scenario("Authorize candidate on main page", async ({ header, mainPage, authPage }) => {

I.amOnPage(mainPage.url);

I.click(header.button.login);

authPage.fillLoginFormAndSubmit(user.email, user.password);

await I.seeLoggedUserInterface();

}).tag("@acceptance");

No more manual tasks checking on a release. The only thing tested manually are any new features (new interfaces or business logic) that are to be automated. Everything else including bugs and minor enhancements is tested automatically in a TeamCity pipeline.

Now, with two releases and automation, the process looks like this:

- The first release comes at noon with a feature freeze at 8 AM

- The second release comes at 4:30 PM with a feature freeze at 2 PM

- For each release, a dev branch with all the code changes is automatically generated

- In 40 minutes the test run result comes to the Slack channel with a developer and a tester on duty

- If any tests fail, the tester analyzes the reasons for that

- In case of a bug, the tester transfers the task to the developer

- In case of a test case obsolescence, the tester updates the test case

Twice a day between the releases the testing team runs a regression test suite to ensure that the dev environment works fine and won't bring any errors before the noon release.

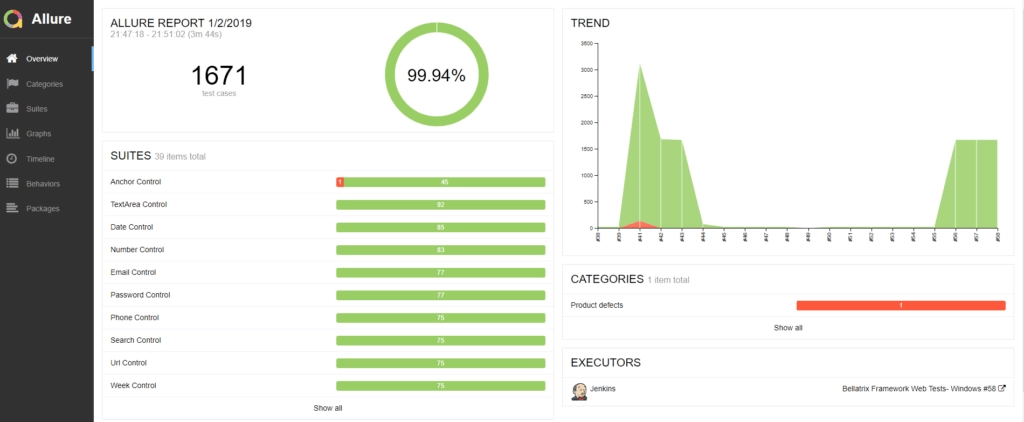

An Allure Report result example

New tools benefits

Playwright

All the tools we adopted brought some expected and unexpected benefits! Using Playwright instead of WebDriver increased test execution speed by 20% without any internal optimizations. Take a look at this awesome article that compares Puppeteer, Selenium, and Playwright.

Another killer feature of Playwright is cross-browser testing. Puppeteer was not a cross-browser tool and configuring WebDriver tests for various browsers was painful and constantly unstable. With Playwright, a Chrome test easily works for Firefox and Safari.

Screenshot testing became the icing on the cake. Playwright can take both full-page screenshots and screenshots of specific elements to be used for reference. Allure TestOps in turn has a convenient reference-result comparison interface.

Пример упавшего скриншотного теста

Automated screenshot checks allow us to automatically catch layout bugs. The whole release is tested via automated tests, and screenshot testing works as another layer of quality checking.

Of course, this approach requires added work as any interface changes. For such cases, we maintain a special TeamCity build that updates all the reference screenshots. We run the build on a branch that requires screenshots update, and after the test suite is executed successfully, new screenshots are committed to the branch.

Allure TestOps

Allure TestOps also turned out to be a beneficial tool to adopt.

First of all, it gathered all our builds in one place which was required as automation led to the maintenance of multiple builds: acceptance builds, regression testing builds, Safari-specific builds, etc.

Second, Allure TestOps replaced TestRail. We needed to gather both manual and automated tests in a single window to manage results, quality metrics, and documentation.

Third, the Allure Live Documentation feature automatically updates all the modified automated tests documentation after each successful TeamCity test run. Now, if a tester on duty fixes an automated test that failed due to business logic changes, documentation gets updated automatically. The feature provides real-time documentation updates with zero effort and time overhead.

The failed screenshot test comparison from the original post

Internal Training

Implementing automated testing opened new opportunities for our engineers' training. At the second stage where acceptance testing was automated, just one automation engineer was needed but further scaling and automation required more engineers. Many of the team were also enthusiastic about growing as automation QAs.

Consequently, an internal three-month course for manual testers was developed. The course introduces testers to automated tests development patterns, JS development basics, and all the necessary tools. The structure of the SuperJob course is as follows:

- Tooling review: CodeceptJS, Playwright

- Tooling setup and configuration: CodeceptJS, Playwright

- Test Case development and running

- Failed tests analysis and debugging

- CI test runs automation via TeamCity

- Test run reporting Allure

- Automation testing design patterns

In the end, each tester has its own GitHub automation repo.

Benefits and next steps

Fast feedback loop: the regression testing duration went from 3 days to 30 minutes!

Coverage: no tester can execute 2,500 tests in half an hour and with an automated approach the number of tests will keep growing

Release and features time-to-market decreased from once a week to twice a day

Engineering expertise: each tester in SuperJob has the opportunity to grow towards automation QA. While the opportunity itself is a good motivator for enthusiasts in our QA team, we can promote rapidly growing engineers to a new grade