Shared context vs shielded context: testers and developers writing tests together

Natalia Poliakova, Mikhail Lankin

Nov 24 2024

How do you get testers and developers to cooperate on tests?

If developers help out with tests at all - that's already a good start. But even then, there are differences in approach.

It is well-known that developers have a creative mindset, while testers have a destructive one. A tester is trained to think as a picky and inquisitive user; their view of the system is broader. A developer's expertise is in architecture; their view is deeper.

When writing tests, this translates into another difference: developers want to get the code running and get a green light. On the other hand, testers are consumers of tests, so they want quite a few things beyond that: tests that are easy to use and understand.

However, this is not does not mean we fight like cats and dogs. Writing tests helps developers write code that is modular, maintanable, and eaiser to understand. This is why devs and QA want fundamentally the same thing - but with different flavors.

In this article, we would like to explore this difference and share our experience of overcoming it. First, we will elaborate on the difference between a tester's and a developer's approach. Then, we will show that these differences are not insurmountable.

Different approaches to testing

Test code is different from production code in several ways:

- Using tests means reading them (if they fail, and you need to dig into the cause of failure). That puts additional emphasis on readability.

- Test code is not checked by tests, so it needs to be simple.

- People who read tests might not have as much coding experience as developers, which makes readability and simplicity even more important.

Developers might not be used to this environment. Let us illustrate this with an example.

Suppose we have a javascript function that checks if an element is visible or not:

public void checkElement(boolean visible = true) {

// if the element should be visible, pass nothing

// if the element should be invisible, pass false

}

Calling this function in a test looks like this: checkElement(). A developer might not see an issue with this, but a tester would ask - how do you know what we are checking for? It's not apparent unless you peek inside the function.

A much better name would be "isVisible". Also, it's best to remove the default value. If we did it, the function call would look like this: isVisible(true). When you read it in a test, you know immediately that this check passes if the element is visible.

How do you make sure that everyone follows requirements such as this one?

- You can nag people - in a respectful manner - until everyone follows the rules. Unfortunately, this is sometimes the only way.

- Other times, you can shield developers from unnecessary context, just as they shield you when they hide the complexity of an application behind a simple and testable interface.

- This often comes in the form of automating the rules you want everyone to follow.

Let us unpack the last two points with examples.

Shielding from context

Test design

End-to-end test design is not an easy task. Developers are the ones best positioned to design (and write) unit tests; however, with frontend tests, test design becomes more difficult. Rather than emulating the system's interaction with itself, frontend tests emulate its interaction with a user.

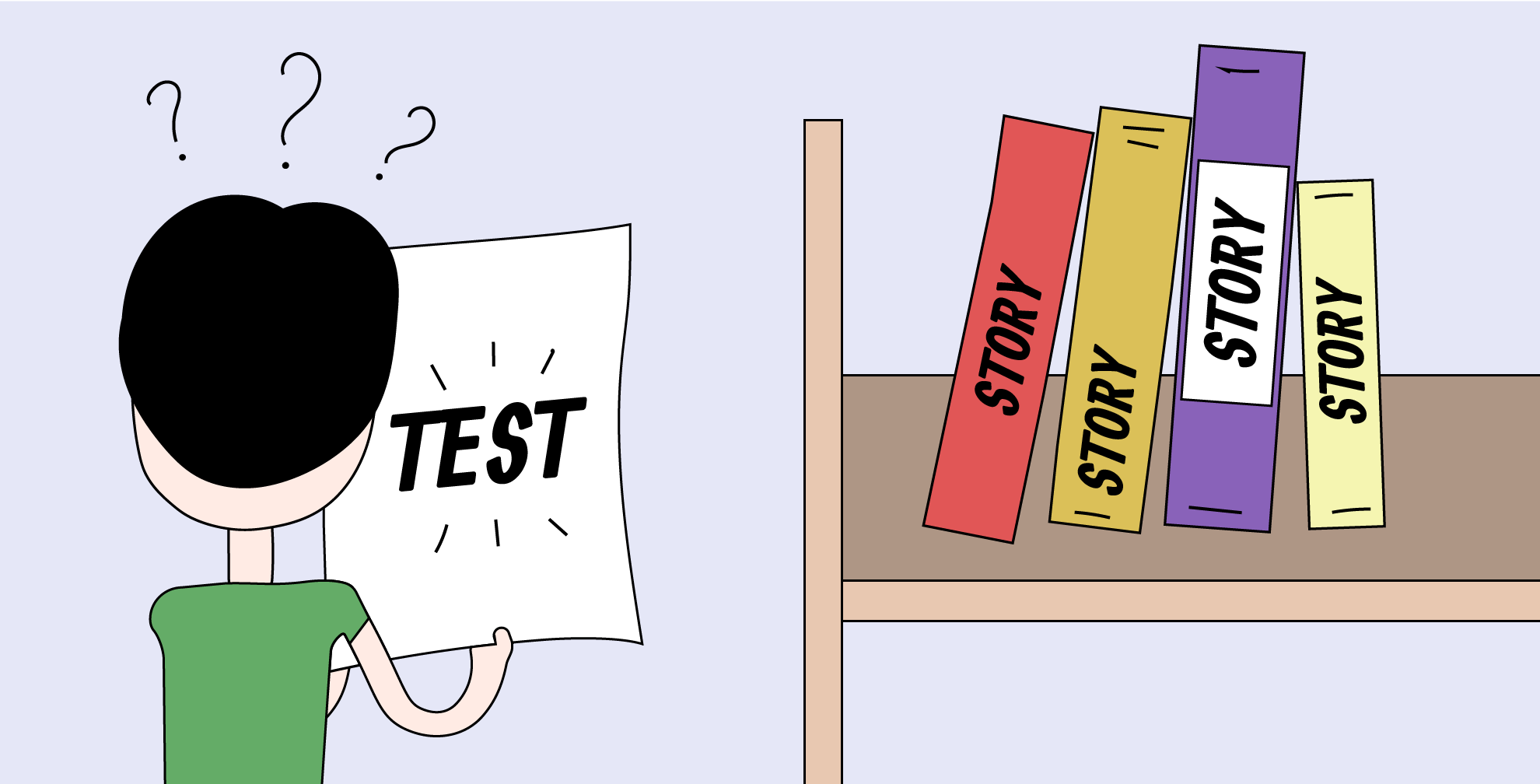

In our experience, it is very stressful for developers to write UI tests from scratch - it's always hard to figure out what you should test for. Because of this, the process is usually split into two parts: testers write the test documentation, which is then used as a basis for automated tests. This way, developers bring their code expertise to the table but are shielded from user-related context.

Features and stories

We've noticed that developers among us tend to have trouble assigning tests to features and stories. Which isn't a big surprise - unless you're working on a very small project, different features have different developers, and it's hard for everyone to keep track of the entire thing.

To solve this problem, we've just written a fixture that assigns features to tests based on which folder the test resides in; the subfolder then determines the story, etc. So when you're doing code review, you don't have to nag people about filling in the metadata, you just say: could you please move the test to folder "xyz"? So far, everyone seems happy with this arrangement.

Data-testid

Another metadata field we've had trouble with is data testid, a unique identifier that allows you to quickly and reliably find a component when testing - as long as these IDs are standardized.

We have devised a specific format for data-testid, and we've been trying to get people to use that format for a year and a half now - without success. The poorly-written IDs are usually discovered in code review when everything else already works. At that stage, correcting IDs feels like a formality, especially when plenty of other tasks need a developer's attention.

And it's not like developers object to the practice on principle - quite the contrary. They keep asking how to write these IDs properly. Both sides are working on the same thing and want it done right - it's just that there are hiccups in the process.

Well, it turns out someone made a linter for data-testids - which means we're not the only ones suffering from this. It ensures that data-testid values all match a provided regex.

We've added this linter for all commits and pull requests, and it fixed the problem. This is not surprising: our devs have also set up linters for testers to check the order of imports, indentation, and such, and we know this is a great practice.

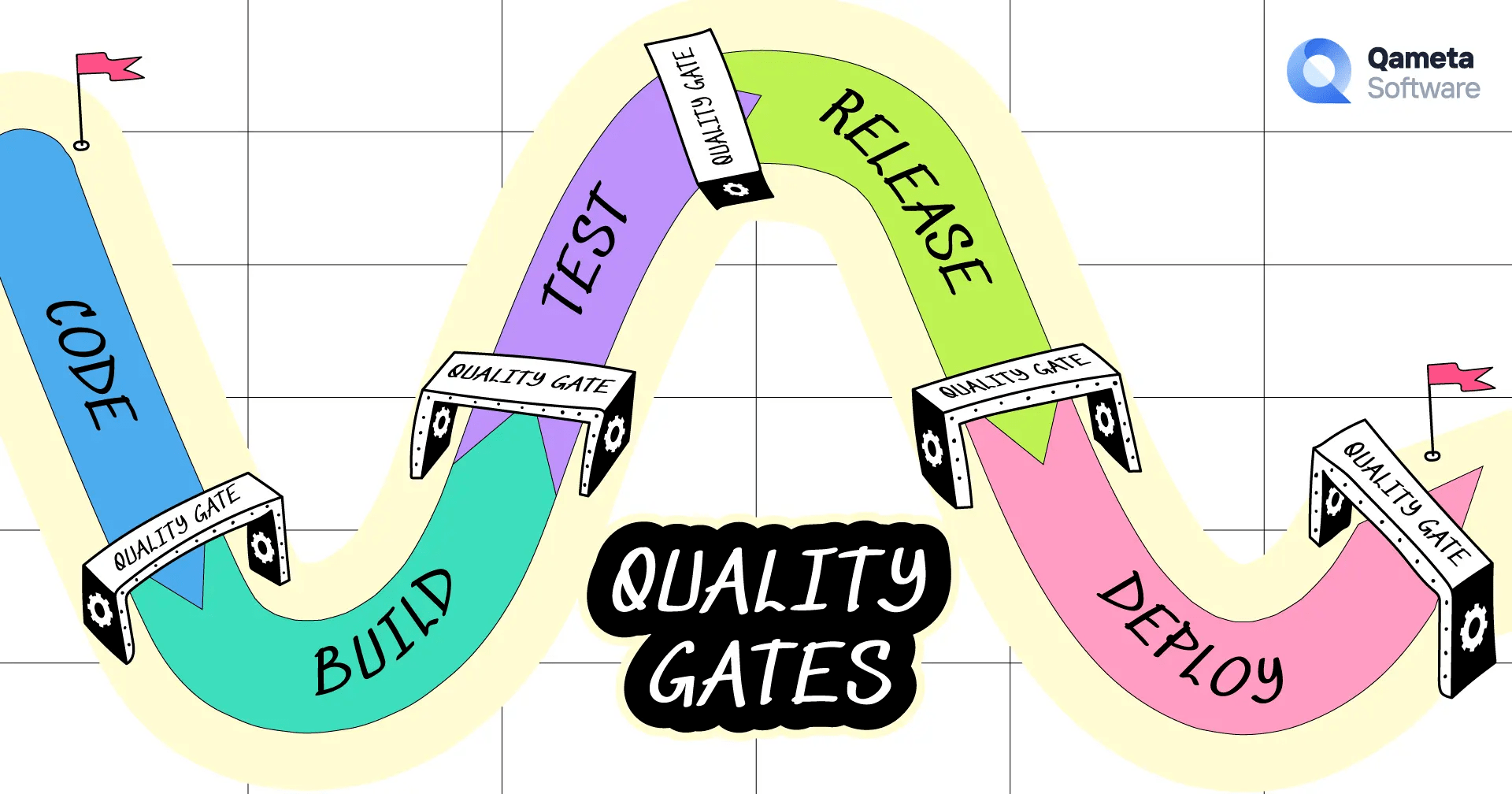

Still, the result is very telling. When you've already written all the code and have delivered your tests, fixing something like IDs seems like a formality. But when you have an automatic rule that tells you how to write the IDs from the start, it becomes a natural part of the process. Shift-left in action!

Being a full-stack tester helps

To make all these solutions work, testers have to be able to work with code and infrastructure.

Writing automated tests together requires both developers and testers to step outside their more "traditional" responsibilities. A developer is forced to assume the point of view of a user and look at their code "from the outside". A tester is forced to write code.

And this is a good thing. We've talked about the advantages for developers elsewhere; for testers, having more technical knowledge of the system they're working on allows them to use their superpowers more fully.

This is something we've seen first-hand when working on Allure TestOps. You might have an excellent technical implementation of certain functionality; a tester will take a look at it and tell you that:

- this is very costly to write and

- this will only confuse the user

- there is a much easier way to achieve what the user wants

Having a full-stack tester check the plans of analysts and developers can save you a lot of time and effort.

Conclusion

Working together on tests requires testers and developers to put in effort and adapt. The differences in expertise can be used to shield each other from unnecessary details.

Testers are the most active consumers of tests, so naturally, they tend to want more from them. Automating rules and requirements is a great way to make them work.

Testers will be able to apply their skills most efficiently if they have full-stack expertise.