Allure TestOps is a huge tool that does a lot and helps QA and Dev teams with testing. Sometimes, it takes a lot of words and screenshots to show how it all works! We thank the author of the original story Sergey Potanin, QA Automation team lead at Wrike, and the Wrike team for letting us keep this awesome story at our blog.

What is Allure?

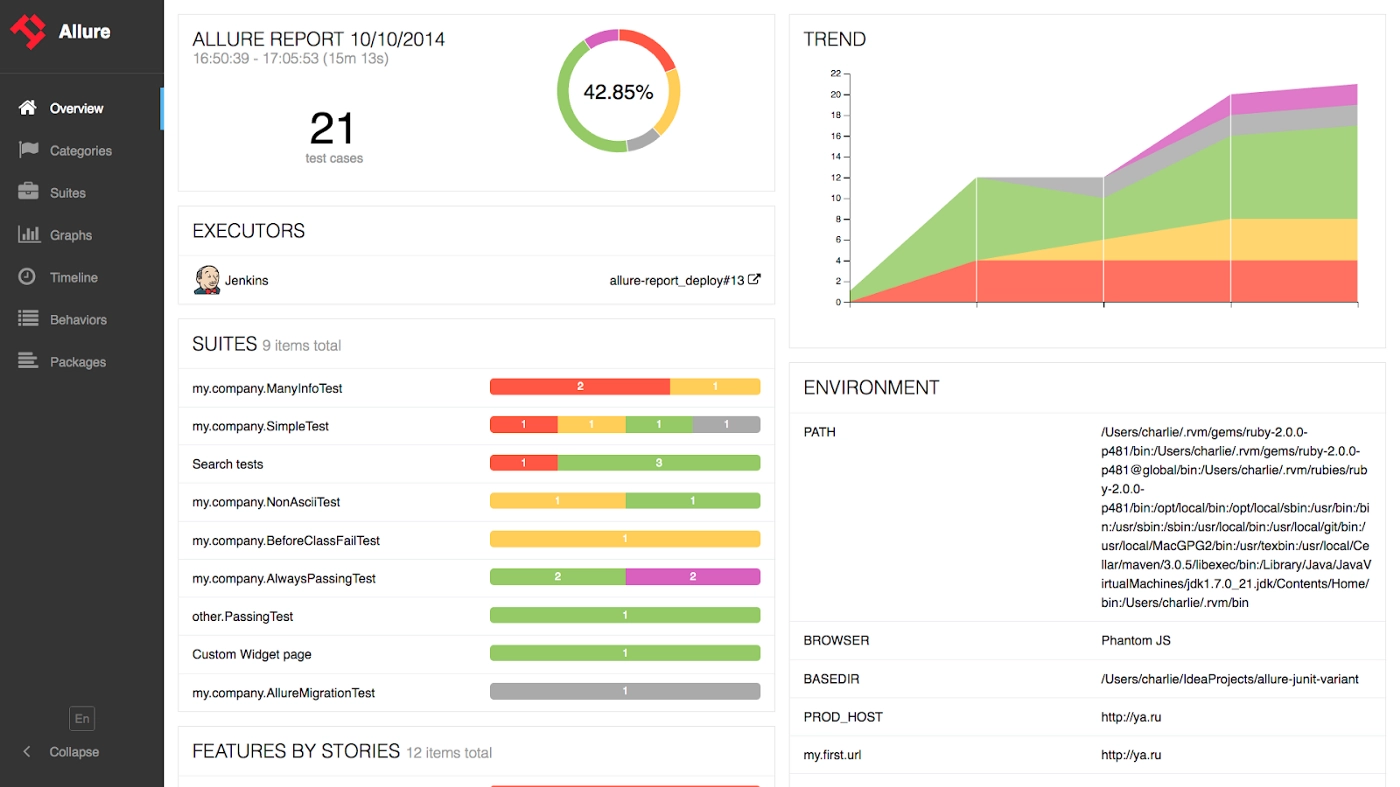

If you’re not familiar with Allure Report, it’s a test reporting tool with many cool features like statistics and analytics views, timeline, grouping test results by various attributes, etc.

Allure Report main screen

Most Allure users probably work with Allure Report. At Wrike, we also have deep integration with Allure Server (Allure EE, but now it’s mostly known as Allure TestOps).

What problems we wanted to solve with Allure TestOps

Why did we decide to use Allure TestOps? First, writing and supporting detailed test documentation takes a lot of resources. It was difficult for us to set aside enough time for it, especially now that we have about 30,000 Selenium tests. Back then we had a bit under 10,000, but it’s still a lot.

Also, before we used Allure, existing test documentation was poorly structured. For each test, a QA engineer wrote a simple short checklist in a Wrike task, because we use Wrike as a task tracker. Then those short checklists became a set of detailed test cases in a TMS, spreadsheet, or another Wrike task.

After that detailed test cases needed to be automated. When production logic changes, both the autotest and test case should be updated, which again, was extremely time-consuming. Also, it looked like a duplicating job. In fact in most cases we just updated automated tests and got by with irrelevant documentation.

When the tests are automated and ready, we can run them in CI (at Wrike we use TeamCity). Each TeamCity build had an Allure report attached to it. Allure reports are great, but for technical reasons, we have many TeamCity builds where Selenium tests could be run. So, reports weren’t aggregated in one place. It was complicated to analyze some tests.

The next problem was test stability. Like many other automation engineers, we suffered from flaky tests. But not all failed tests are flaky; some probably failed because of bugs in the testing environment or tests just needed to be updated. Running those tests not only took time for our reviewers, but also prolonged the runtime of the test build. It’d be preferable not to run these tests at all and fix them when the time is right.

And when that time eventually came, we faced another issue of finding test owners. How do we know who’s responsible for the test?

Here I have a couple of bad pieces of advice for that:

- Check the Javadoc author, because it loses its relevance over time. The person who wrote the test may actually be unaware of it because they transferred to a different team or department and are now responsible for another feature.

- Use commit history. This option is even worse, because some people could just make a code refactoring and that’s why the latest commit in the file is theirs.

So, both options don’t work well enough in most cases.

How Allure helps us solve the issues

Yes, we solved all the issues mentioned above with Allure’s help! To understand how it works, let’s look into the code. At Wrike we use Java to write automated tests, so the code examples will also be provided in Java.

Here’s an example of one of our simplest automated tests:

@StandardSeleniumExtensions

@GuiceModules(WebTestsModule.class)

@Epic("Workspace")

@Feature("Task list")

@Story("Task creation")

@TeamExample

public class CreateTaskExampleTest {

@Inject private WrikeClient wrikeClient;

@Inject private LoginPageSteps loginPageSteps;

@Inject private SpaceTreeSteps spaceTreeSteps;

@Inject private TaskListSteps taskListSteps;

@Test

@TestCaseId(104082)

public void testCreateTask() {

User user = wrikeClient.createAccount(ENTERPRISETRIAL);

loginPageSteps

.login(user);

spaceTreeSteps

.openSpace("Personal");

taskListSteps

.createTaskViaEnter("new task")

.checkTask("new task");

}

}

The test scenario is in the body of the test method. Each method represents a step in the test case. The code is readable and this scenario is quite simple: Create an account with the user, log in as the user, open the user’s personal space, create a new task in the task list, and check that the task was created and appeared in the task list.

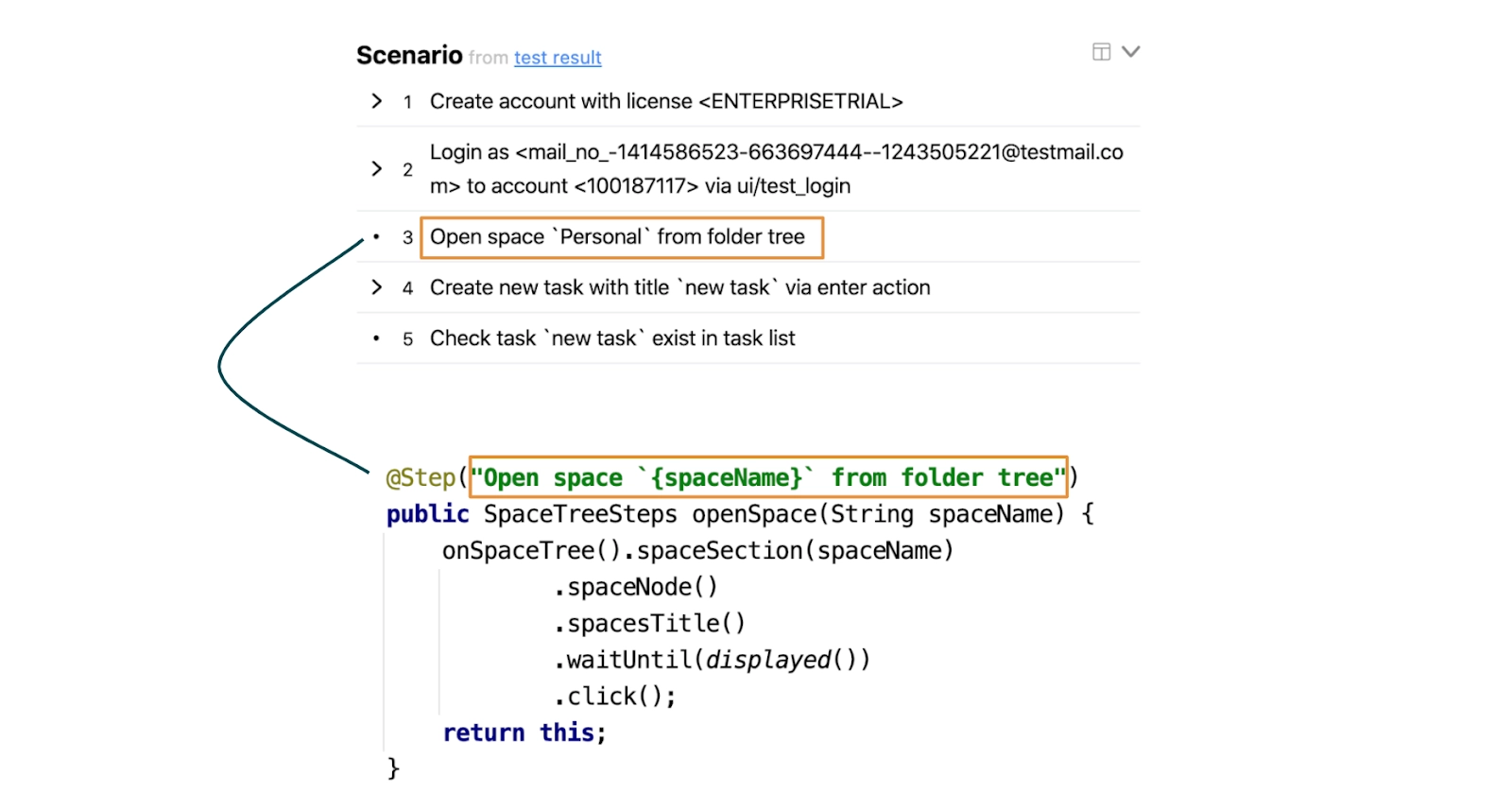

Let’s look closer at one of those methods called openSpace:

...

@Step("Open space `{spaceName}` from folder tree")

public SpaceTreeSteps openSpace(String spaceName) {

onSpaceTree().spaceSection(spaceName)

.spaceNode()

.spacesTitle()

.waitUntil(displayed())

.click();

return this;

}

...

Like the Allure report, we have to use the @Step annotation for methods to see them in the report. This annotation can also work with parameters by setting the actual value of space name instead of a placeholder.

To find the test in Allure, we need to get its ID. At Wrike we use our custom annotation, @TestCaseId:

...

@Test

@TestCaseId(104082)

public void testCreateTask() {

...

This is a unique identifier for the test and will remain the same, even if the Java method is renamed in the future. Now we can find the test in Allure.

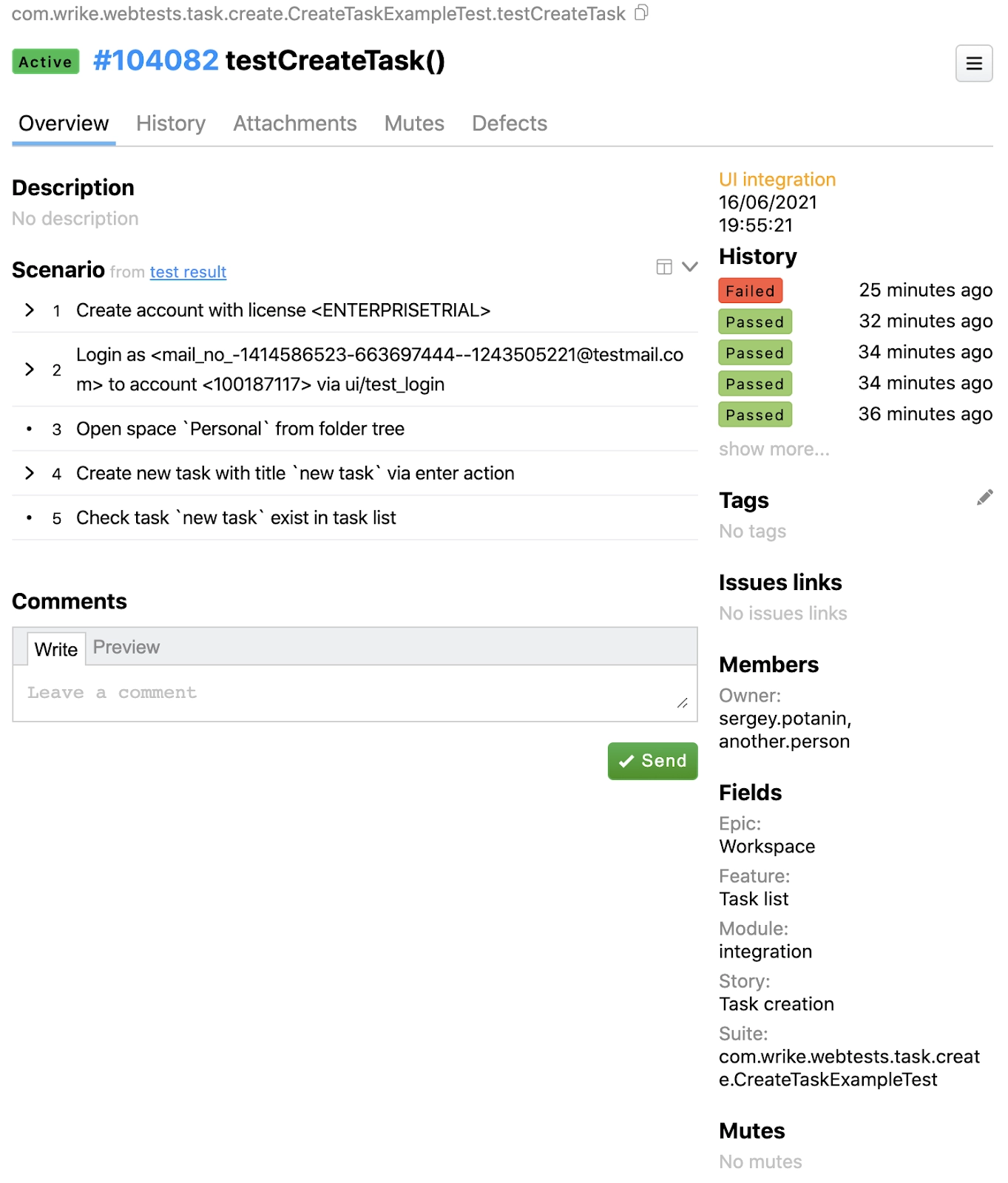

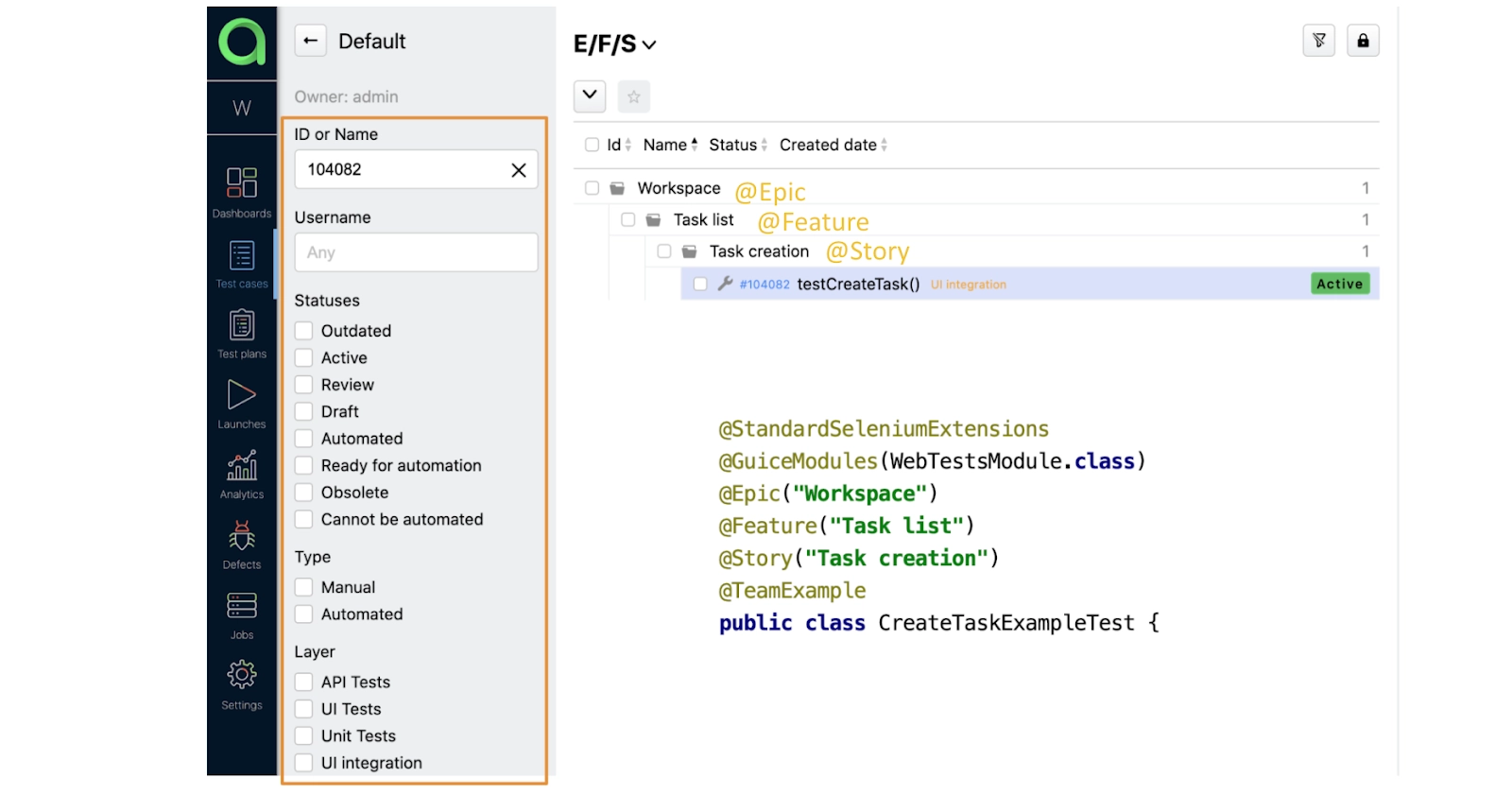

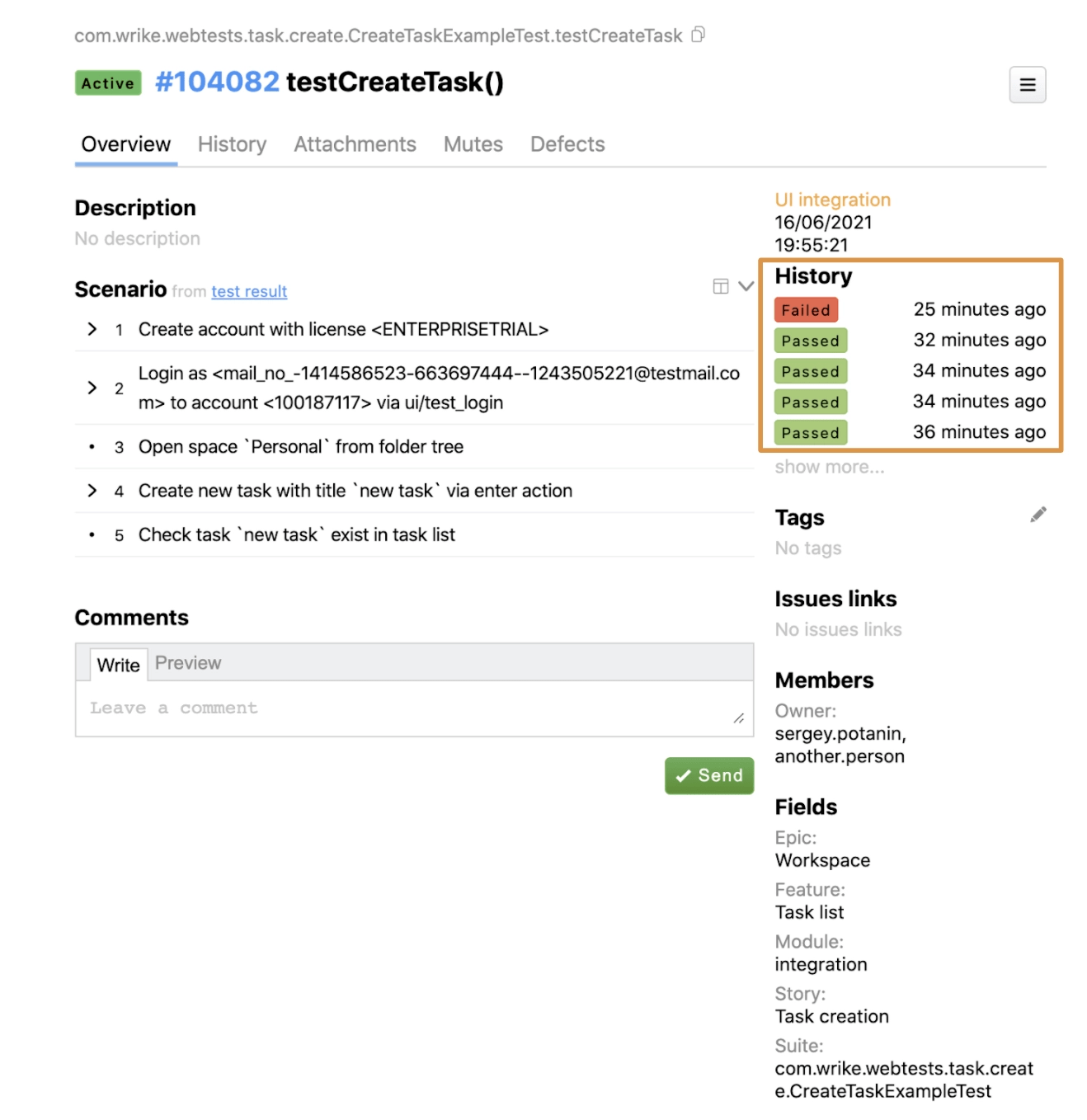

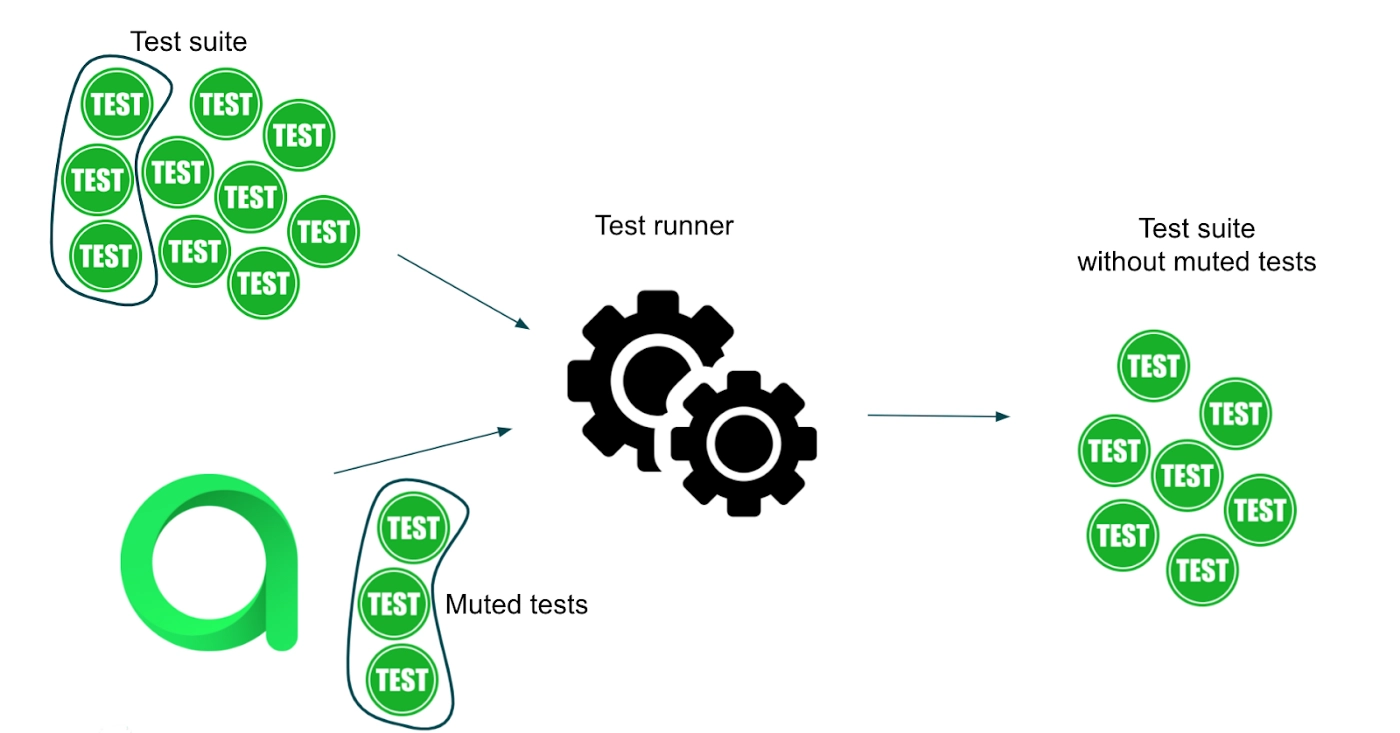

This is the main view of that test in Allure TestOps:

For now we’re interested in the scenario part. Here you can see how the same step looks in the report section and in the code:

Each method is now paired with a readable step description from the corresponding annotation. To get documentation to be formed automatically, all we have to do is place a @Step annotation above each step in the code, which is made once and then can be reused in many other tests. When test logic is changed and other steps are used in the tests, documentation will be automatically updated along with the autotest. Isn’t that awesome?

How to structure test scenarios

Now we want not only to have all the tests with their relevant scenarios, but also to be able to have them structured.

Let’s get back to our automated test example and look at test class annotation markup:

...

@Epic("Workspace")

@Feature("Task list")

@Story("Task creation")

@TeamExample

public class CreateTaskExampleTest {

...

The class annotations @Epic, @Feature, and @Story are the same as for the Allure report. But in the context of Allure TestOps they can be more beneficial.

This is a search view of Allure TestOps:

On the left panel, there are a lot of attributes to filter our tests. In this example, we found our test by its ID. On the right side is a search result that not only shows the test but also the nested structure, which represents Java annotations in the code.

In this view it’s also possible to determine how many tests there are for any feature, story, or epic:

For example, we can find how many tests we have for a task list feature. This means that we can see which parts of the application are covered better than others.

What the simplified test automation process looks like

Now we can simplify our test automation process to this:

- Create a short checklist in Wrike.

- Write Selenium tests for those cases.

- After running those tests in TC, the results will be sent to Allure.

Even after running those tests in several different TC Builds, all the results will be aggregated in one place: Allure TestOps.

What we can do to stabilize the tests

Now that everything is structured and relevant, let’s see what we can do to stabilize the tests. Like many other automation engineers, at Wrike we retry our tests to give them a chance to become green, if possible. And Allure can help us understand what exactly is going on when we retry our tests.

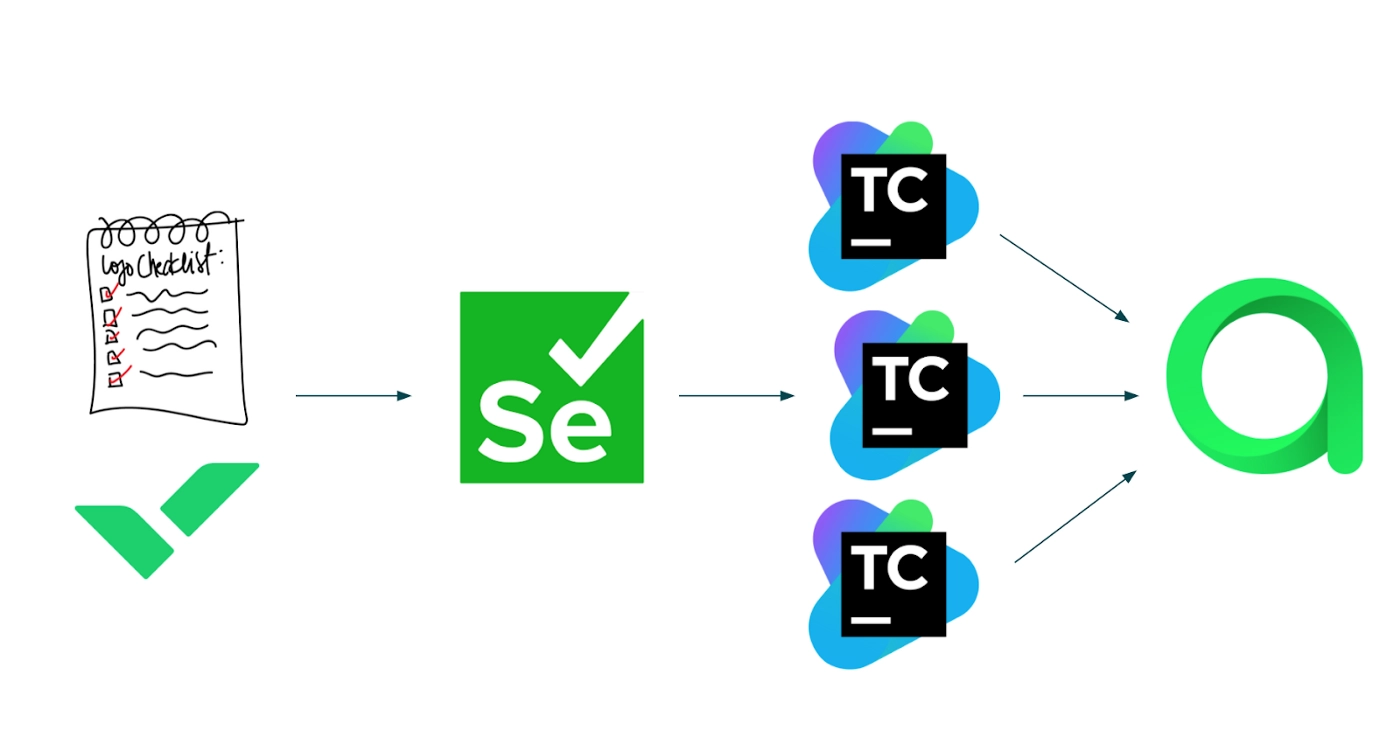

This is one of our real reports with an Allure timeline view of a small test suite:

To increase test stability, we retry failed tests twice during the suite run. This picture shows how two particular tests were retried. At the first retry one of them passed, but another one failed again. On the second retry the test failed for the third time. It means that there must be a problem in the test. We then only have to deal with one failed test instead of two, which is nice.

Now we can see that some tests are unstable or, as we call them, flaky. It would be beneficial to have statistics of test stability, analyze it, and identify the most unstable tests to fix them first. And Allure is helping us here again.

Let’s go back to the test view in Allure TestOps and look at the History section:

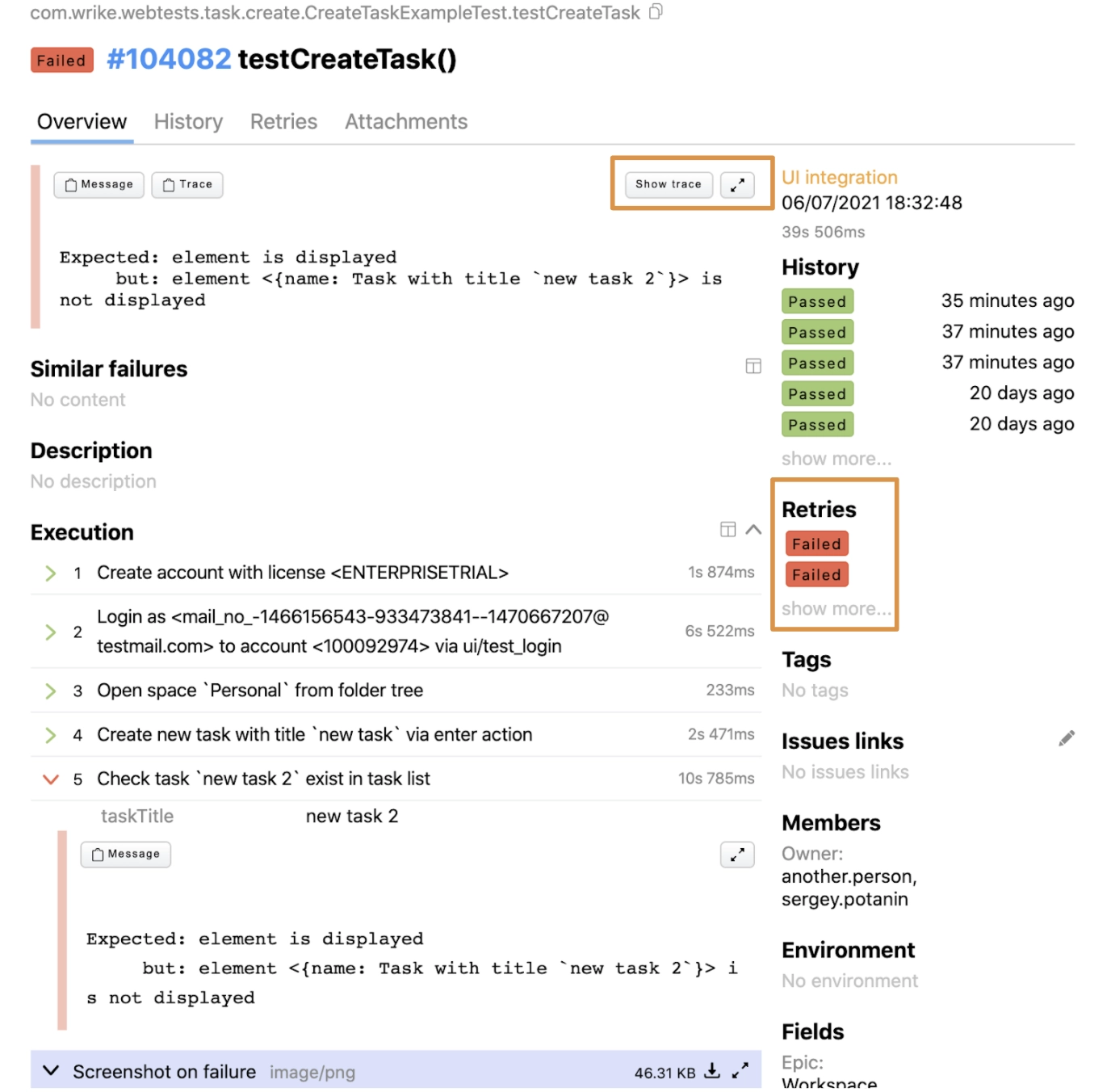

After clicking the latest failed result, it shows a lot of useful information.

We can see the failed test’s stack trace and whether the test had retries and how many:

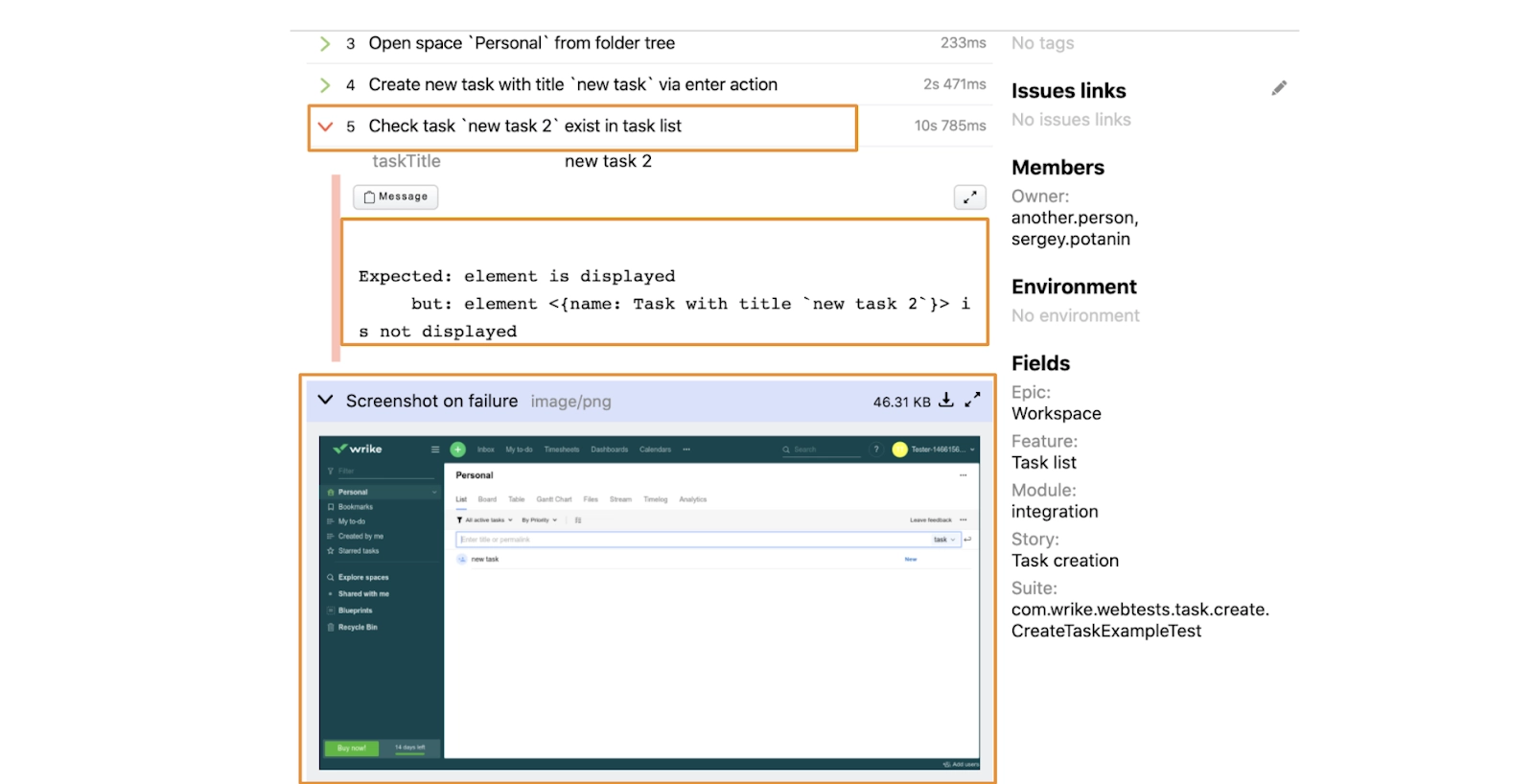

After scrolling lower, there’s also information about:

- At which step the test failed.

- The error message.

- The screenshot at the moment when the test failed.

How to automate the analysis

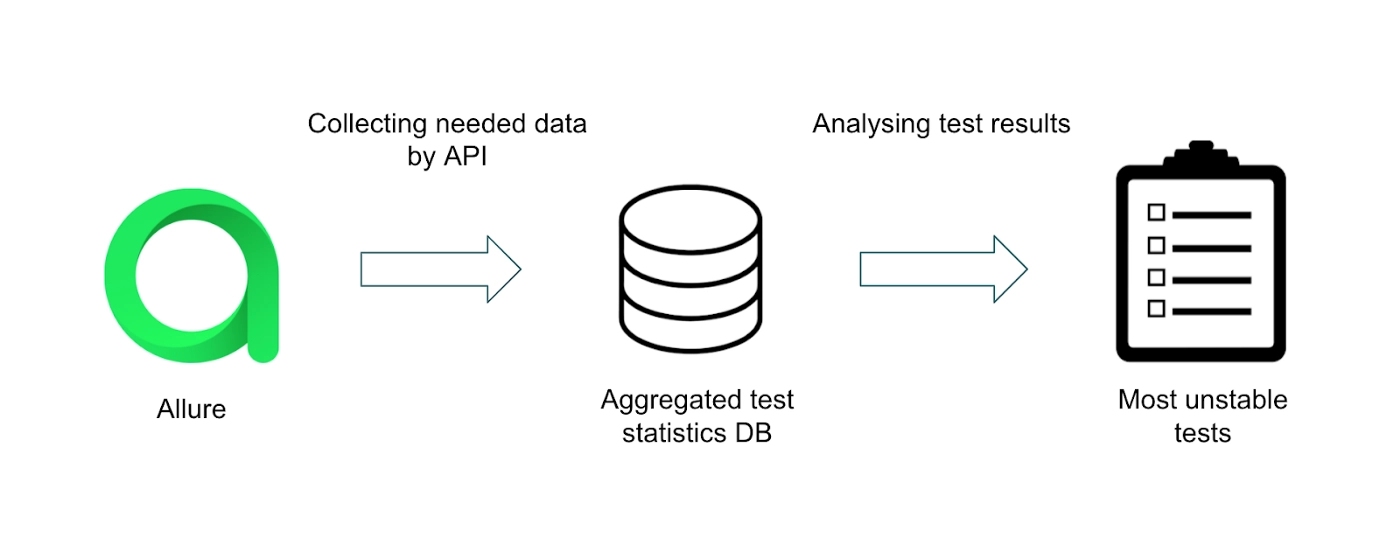

What’s more important about this information is that it’s fully accessible by API. It means that we can automate the analysis. And we did! We have a few services to collect and analyze the test statistics.

As Allure stores all the data for each test run, we first collect the needed data into a separate database. We do this to not overload Allure with too many analytics requests that we execute while analyzing the tests. In this analysis we consider test history, the stack traces of failures, the number of retries, and many more factors. As a result we have a list of the most unstable tests.

How to exclude unstable tests from reports and stop running them

Now we know which tests are unstable, and we don’t want to see them in our reports anymore — we want to fix them first. Moreover, we don’t want to run them with other tests at all. How can we do that? Well, first of all, we want to mute those tests.

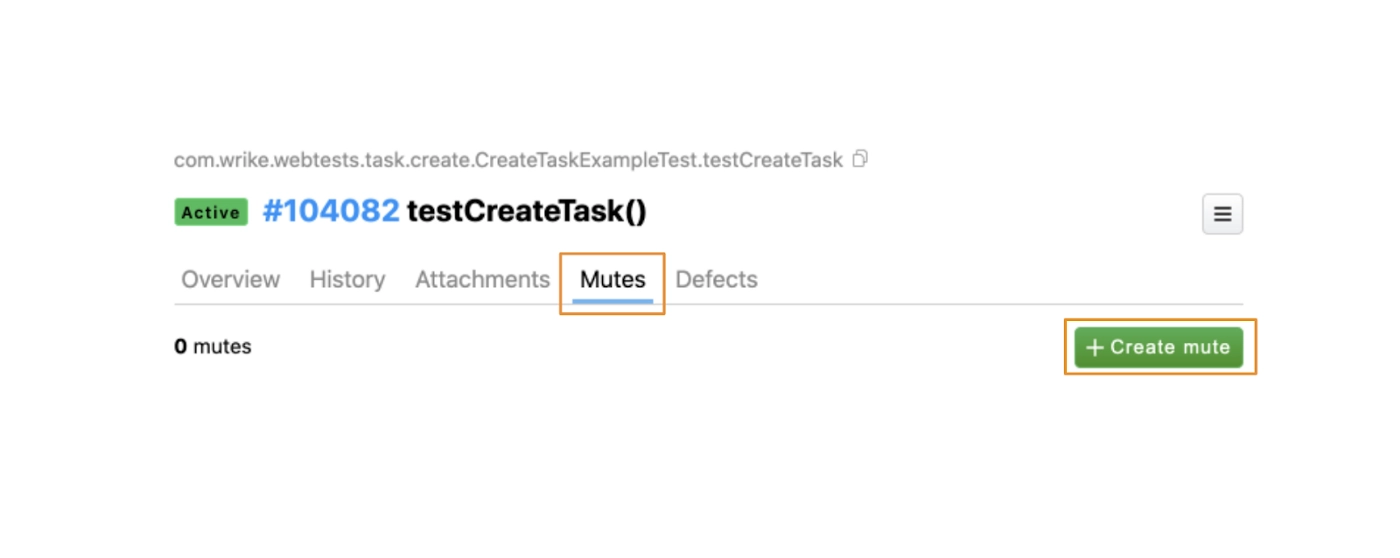

To do so, in Allure TestOps there’s a tab called Mutes, in which there’s a button “create mute” that allows us to mute the test:

After muting you can set the name and the reason for the mute. Also, you can set the task tracker and link it to the actual task ID in this tracker. At Wrike we use Wrike as our tracker system and the issue ID would be the ID of a corresponding task in Wrike.

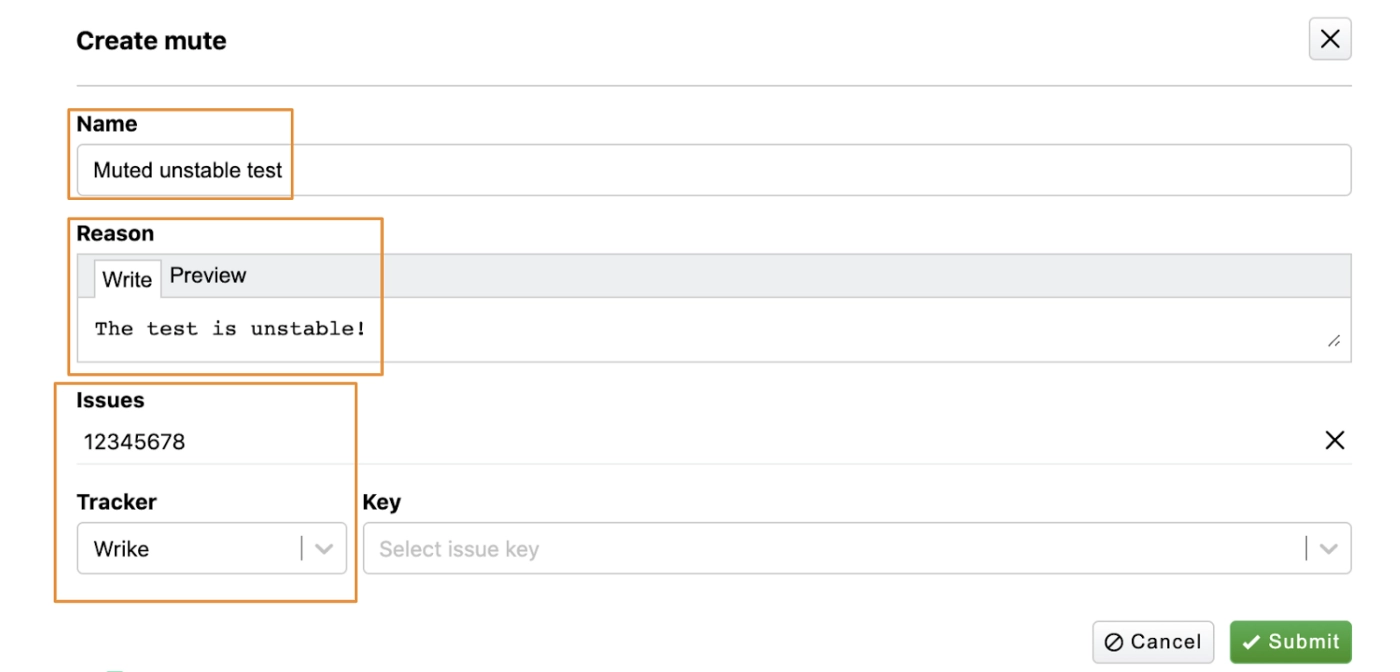

How we modified the test runner

Now, all bad tests are found and muted in Allure. Using Allure API you can get a list of muted tests, so we modified our test runner in Wrike. Before each test suite starts, it requests every muted test from Allure and removes them from tests we want to run. To better explain, I’ll show you a hypothetical example.

Imagine that we have a test suite we want to run. We pass a list of those tests to the test runner. But some of those tests are muted and we don’t want to run them. To know exactly which tests are muted right now, the runner requests the list of muted tests from Allure TestOps by its API. Then the runner removes the tests from the original set and the new relevant test suite is ready to go.

How to understand which team is responsible for each test

So now we don’t have to be bothered by muted tests. But someone has to fix them. How do we find who’s responsible for each test? In Wrike we created custom annotations for each team and linked them to Allure to see which teams are responsible for which tests.

Let’s go back to our test code one more time and look at the class annotations.

...

@Epic("Workspace")

@Feature("Task list")

@Story("Task creation")

@TeamExample

public class CreateTaskExampleTest {

...

Here you can see the custom class annotation @TeamExample, which contains information about responsible testers from this team. Let’s dive into the code of this annotation.

@Documented

@Inherited

@Retention(RetentionPolicy.RUNTIME)

@Target({ElementType.METHOD, ElementType.TYPE})

@LabelAnnotations({@LabelAnnotation(

name = "owner",

value = "sergey.potanin, another.person"

), @LabelAnnotation(

name = "ownerLabel",

value = "sergey.potanin"

), @LabelAnnotation(

name = "ownerLabel",

value = "another.person"

)})

public @interface TeamExample {

}

This is how we mark the responsible persons from each team. In this stub example, the team and test owners are me and another person. But how does it look in Allure as a result?

In Allure’s main view of the test there’s a member section. It contains information about owners passed with the annotation I showed before.

Therefore, for each test Allure has information about responsible people. And as many other things in Allure, this one is available by API, which means we can automate assigning tasks for muted tests. Or we can notify owners in Slack to let them know that some tests need to be taken care of.

Allure’s benefits

Let’s summarize the benefits of using Allure’s features and integrating them into our own automated system:

- We have structured and automatically maintainable test documentation.

- Using various information regarding test statistics, we built a system that determines bad tests and mute them.

- We know everything about test ownership, so we can mute tests and assign tasks to test owners.