“After Zephyr and TestRail there was no room for error. We spent much time exploring other options: digging through forums, discussing with colleagues, and reading books. In the end, at one of the testing conferences, we discovered Allure TestOps.”

Mayflower is a technology company that alters the entertainment industry to a new level of perception and engagement. The company has a passionate in-house team of tech experts who create their global IT solutions. Mayflower boasts over 300 employees across various countries, and their products serve more than 1 billion clients every month. The company prides itself on being experimental and constantly pushing boundaries, positioning them as industry pioneers. Mayflower takes pride in their core streaming platform and ancillary products such as their payment system, AR/VR technology, machine learning, computer vision expertise, and more.

Alexander Abramov and Maksim Rabinovich, QA engineers at Mayflower. This integration was implemented as part of one of several projects that include 3 services. However, in other projects, a different testing infrastructure was used.

The team was generally happy with JIRA and Zephyr, but getting answers for some queries (e.g. getting a complete list of manual and automated tests and checking the coverage of testing) was laborious, so they wanted something more efficient. Consequently, the team pursued two goals:

After several unsuccessful experiments to integrate manual and automated reports, there were still two separate test reports. Merging these separated reports into actionable and insightful analytics took lots of time. Moreover, manual testers complained about the UI/UX of test scenario editing in Zephyr.

These two factors forced Mayflower engineers to start the long journey of looking for a better solution.

The first attempt was TestRail. It was a great solution for storing test cases and test plans, running the tests, and showing fancy reports. However, it did not fix the problem of automation and manual testing integration.

TestRail provides API documentation for automation integration, which the Mayflower team used, and they even made an implementation of test run and status updates for each test. However, the development and maintenance of the integration turned out to be extremely time-consuming, so the journey for something more efficient continued.

After Zephyr and TestRail there was no room for error. Much time was spent exploring various other options: digging through posts left by holy wars on internet forums, listening to colleagues' advice, reading books, and having long discussions.

In the end, at one of the testing conferences, Mayflower discovered Allure TestOps, an all-in-one DevOps-ready testing infrastructure solution.

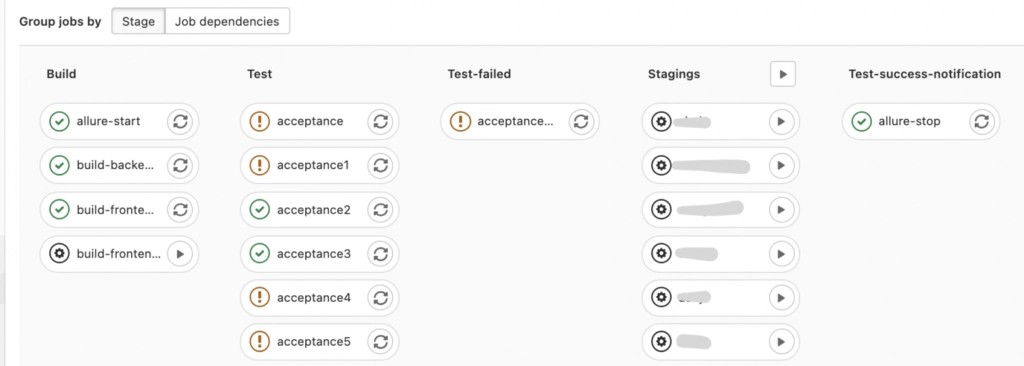

The team deployed a TestOps instance via docker-compose, which promised a long road ahead. First of all, report uploading was set up via allurectl. The Mayflower team has a GitLab CI/CD where some tests run in Docker containers, and others run on test benches. Let's follow the steps that they took: first, create a Docker file and make allurectl:

FROM docker/compose:alpine-1.25.5

RUN apk add \\

bash \\

git \\

gettext

ADD <https://github.com/allure-framework/allurectl/releases/latest/download/allurectl_linux_386> /bin/allurectl

RUN chmod +x /bin/allurectlSecond, configure allure-start and allure-stop jobs so that the pipeline would run the allurectl script when tests run on CI and fetch the results to Allure TestOps.

See the allure-start example below:

allure-start:

stage: build

image: //your project image

interruptible: true

variables:

GIT_STRATEGY: none

tags:

- //if needed

script:

- allurectl job-run start --launch-name "${CI_PROJECT_NAME} / ${CI_COMMIT_REF_NAME} / ${CI_COMMIT_SHORT_SHA} / ${CI_PIPELINE_ID}" || true

- echo "${CI_PROJECT_NAME} / ${CI_COMMIT_REF_NAME} / ${CI_COMMIT_SHORT_SHA} / ${CI_PIPELINE_ID} / ${CI_JOB_NAME}"

rules:

- if: //configure rules and conditions below if needed

when: never

- when: always

needs: []And this is what allure-stop might look like:

allure-stop:

stage: test-success-notification

image: //your project image

interruptible: true

variables:

GIT_STRATEGY: none

tags:

- //if needed

script:

- ALLURE_JOB_RUN_ID=$(allurectl launch list -p "${ALLURE_PROJECT_ID}" --no-header | grep "${CI_PIPELINE_ID}" | cut -d' ' -f1 || true)

- echo "ALLURE_JOB_RUN_ID=${ALLURE_JOB_RUN_ID}"

- allurectl job-run stop --project-id ${ALLURE_PROJECT_ID} ${ALLURE_JOB_RUN_ID} || true

needs:

- job: allure-start

artifacts: false

- job: acceptance

artifacts: false

rules:

- if: $CI_COMMIT_REF_NAME == "master"

when: neverIt's important to note that Allure, via the testing framework, creates an allure-results folder in {project_name}tests/_output. All that's left to do is upload the artifacts created after the test run via the allurectlconsole command:

.after_script: &after_script

- echo -e "\\e[41mShow Artifacts:\\e[0m"

- ls -1 ${CI_PROJECT_DIR}/docker/output/

- allurectl upload ${CI_PROJECT_DIR}/docker/output/allure-results || trueIn addition, the Mayflower team has implemented failed test notifications to Slack. It's easy — fetch the launch id and grep it like this:

if [[ ${exit_code} -ne 0 ]]; then

# Get Allure launch id for message link

ALLURE_JOB_RUN_ID=$(allurectl launch list -p "${ALLURE_PROJECT_ID}" --no-header | grep "${CI_PIPELINE_ID}" | cut -d' ' -f1 || true)

export ALLURE_JOB_RUN_IDUltimately, the tests run in Docker using a local environment. That starts the allure-start job and creates a place to upload tests later.

The team has also configured the Parent-child pipeline to deploy a branch to a physical testing instance and run a set of tests on it. To do so, we fetch all the necessary variables (${CI_PIPELINE_ID} and ${PARENT_PIPELINE_ID}) and make sure that all the results are stored in a single Allure TestOps launch:

.run-integration: &run-integration

image:

services:

- name: //your image

alias: docker

stage: test

variables:

<<: *allure-variables

tags:

- //tags if needed

script:

- echo "petya ${CI_PROJECT_NAME} / ${CI_COMMIT_REF_NAME} / ${CI_COMMIT_SHORT_SHA} / ${CI_PIPELINE_ID} / ${PARENT_PIPELINE_ID} / ${CI_JOB_NAME} / {$SUITE}"

- cd ${CI_PROJECT_DIR}/GitLab

- ./gitlab.sh run ${SUITE}

after_script: *after_script

artifacts:

paths:

- docker/output

when: always

reports:

junit: docker/output/${SUITE}.xml

interruptible: true“At last, we got the first results. It took us a whole Friday to set up and get tests with steps and a pie chart with stats, but it meant that for each commit or branch creation, the necessary test suite was run and the results were returned to Allure. It was our first win!”

However, there was a shock when it came to looking at the automated tests tree — we found an unreadable structure that looked like this:

The tree is built on the principle 'Test Method name + Data Set #x'.

Steps had names like "get helper", "wait for element x", or "get web driver", without any details about what exactly happens within the step. If a manager was to see such a test case, there would be more questions than answers, and unfortunately, there was no way to solve this issue in a couple of clicks!

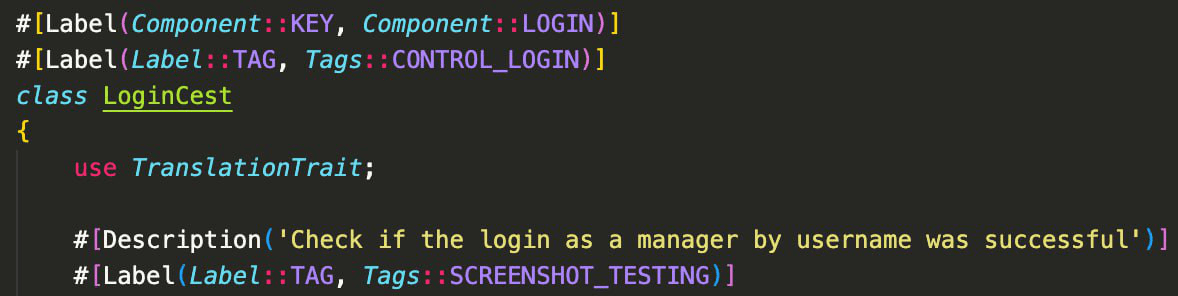

In fact, it took almost two weeks to refactor the automation codebase, annotate each test with metadata, and wrap each step in human-readable names, before the team could benefit from the TestOps flexibility of test case management.

The usual metadata description in PHP adapted Javadoc looks like this:

/**

* @Title("Short Test Name")

* @Description("Detailed Description")

* @Features({"Which feature is tested?"})

* @Stories({"Related Story"})

* @Issue({Requirements})

* @Labels({

* @Label(name = "Jira", values = "SA-xxxx"),

* @Label(name = "component", values = "Component Name"),

* @Label(name = "layer", values = "the layer of testing architecture"),

* })

*/ In the end, you'll get something like this:

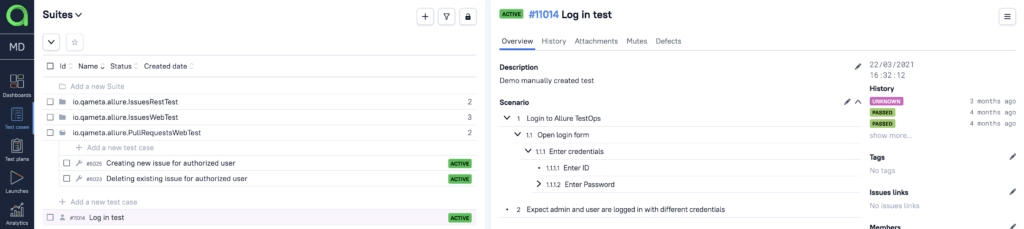

This is the "Group by Features" view. In two clicks, the view may be changed to different grouping, filtering, and order.

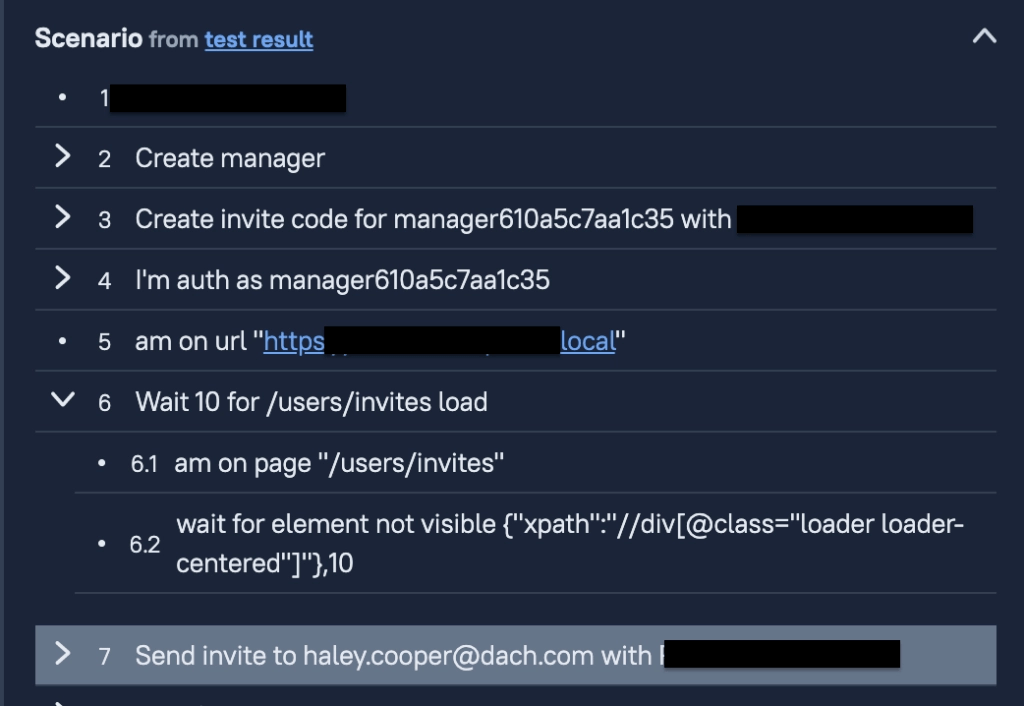

Next, the automated test steps need to be put in order. For this, the Allure executeStep method was used as a wrapper. In this example, a human-readable step text is given to the method signature as the first parameter of the addInvite method:

public function createInviteCode(User $user, string $scheme): Invite

{

return $this->executeStep(

'Create invite code for ' . $user->username . ' with ' . $scheme,

function () use ($user, $scheme) {

return $this->getHelper()->addInvite(['user' => $user, 'schemeType' => $scheme]);

}

);

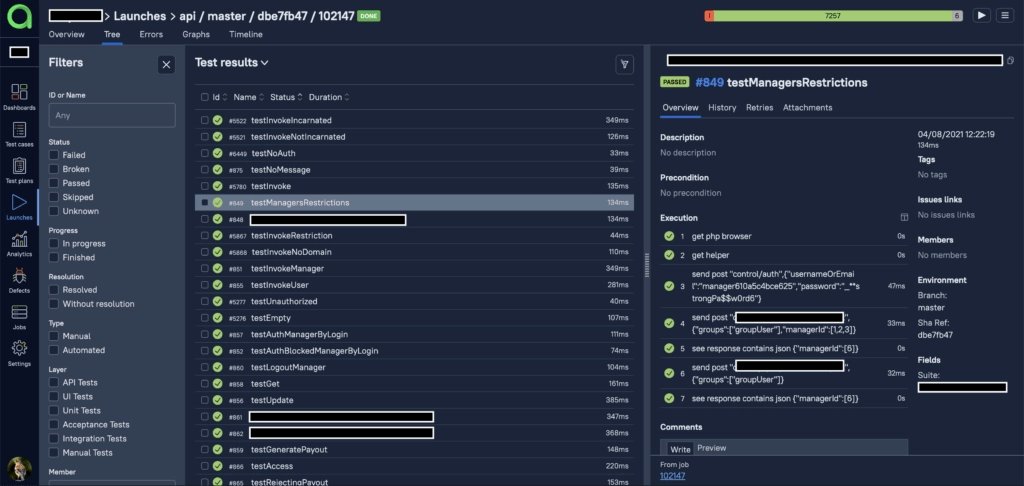

}With this, all the realization details can be hidden under the hood, and the steps are given readable names. In the Allure TestOps UI, the test now looks like this:

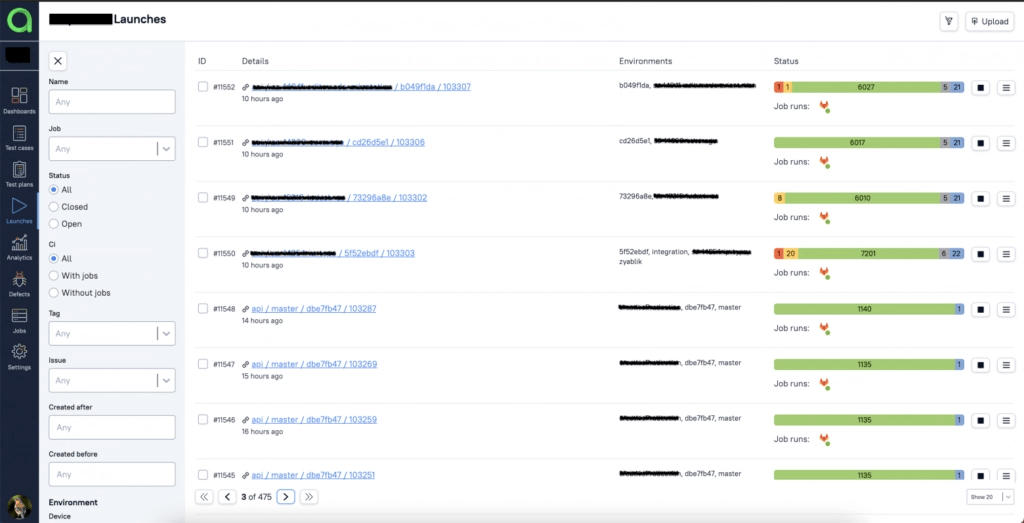

“At this point, we achieved the first journey milestone: a Launches page in Allure TestOps. This allows you to see all the Launches on branches/commits and access the list of tests for each launch. We have also got a flexible view constructor for testing metrics dashboarding: tests run, passed and failed tests statistics, etc.”

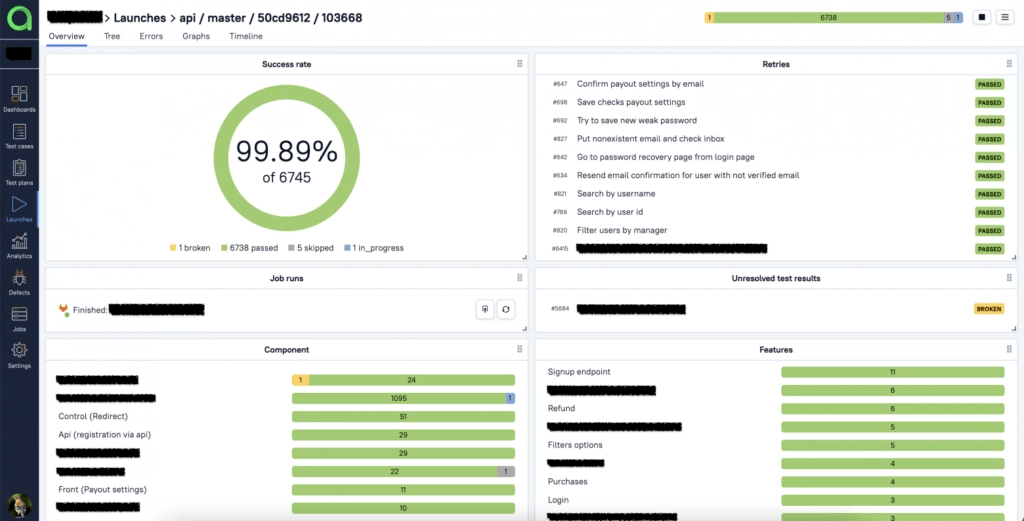

Entering a Launch also provides more details: components or features involved, number of reruns, test case tree data, test duration, and other information.

And there is one more mind-blowing feature: Live Documentation that automatically updates test cases.

The mechanics are elegant. Imagine that someone updates a test in the repository, and the test gets executed within a branch/commit test suite. As soon as the Launch is closed, Allure TestOps updates the test case in UI according to all the changes in the last run. This feature lets you forget about manual test documentation updates.

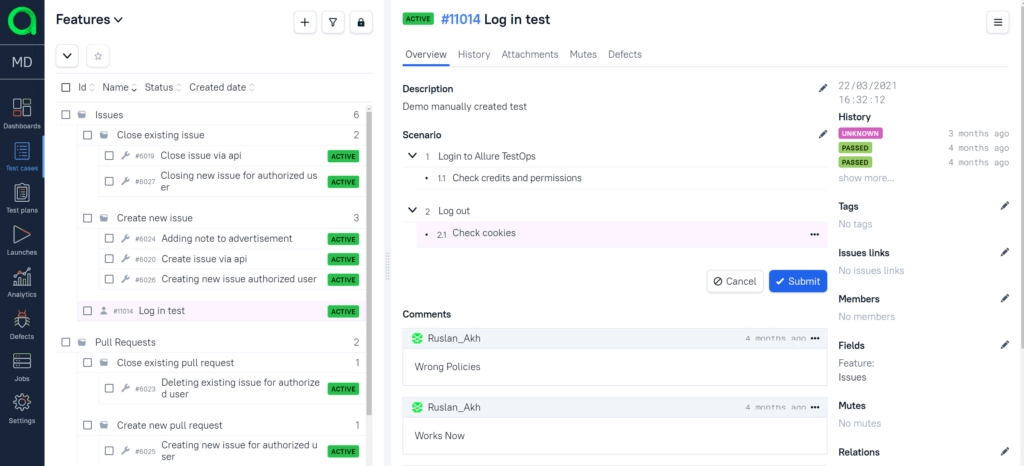

With the automation working well, the next step was to migrate all the manual tests from TestRail to Allure TestOps. A TestRail integration provides a lot of flexibility to import the tests to Allure TestOps, but the challenge was to adapt them all to an Allure-compatible logic.

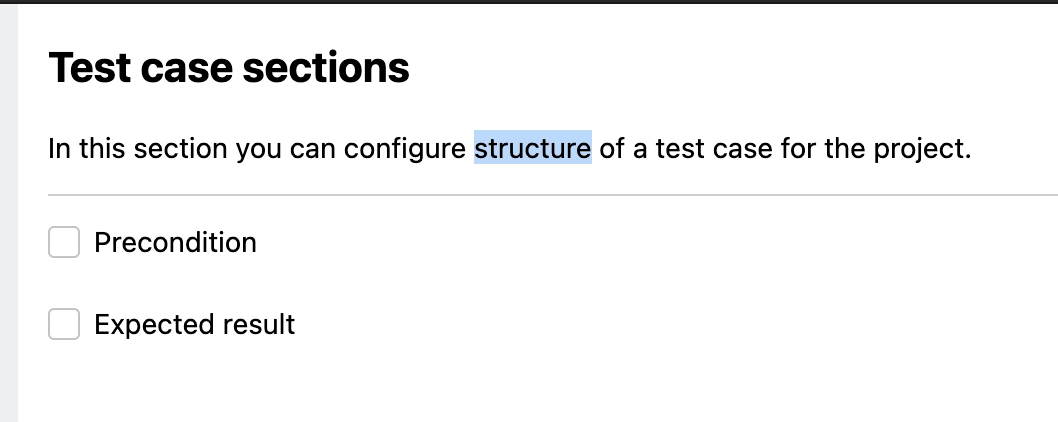

The default logic is built on the principle that a test case contains a finished scenario while the expected result stays optional. In case an expected result is needed, it goes to a nested step:

For those that enjoy a precondition style, there is an option to choose this structure for a project:

In the end, the Mayflower team had to adapt a lot of our manual tests to the new structure. It took some time, but a database was built, that contained both manual and automated tests in one place with end-to-end reporting. Everyone thought they were ready to present the result to colleagues and management - or were they?

Nope! On the day before the presentation, the team decided to set all the reports and filters up to demonstrate how it all worked. To everyone's shock, it was discovered that the test case tree structure was completely messed up! The problem was quickly discovered and it was found that the automated tests were structured by code annotations while manual ones were created via UI without the corresponding annotations. It led to confusion in the test documentation and test result updates after each run on the main branch.

“Test Cases as Code concept: the main idea is to create, manage, and review manual test cases in the code repository via CI.”

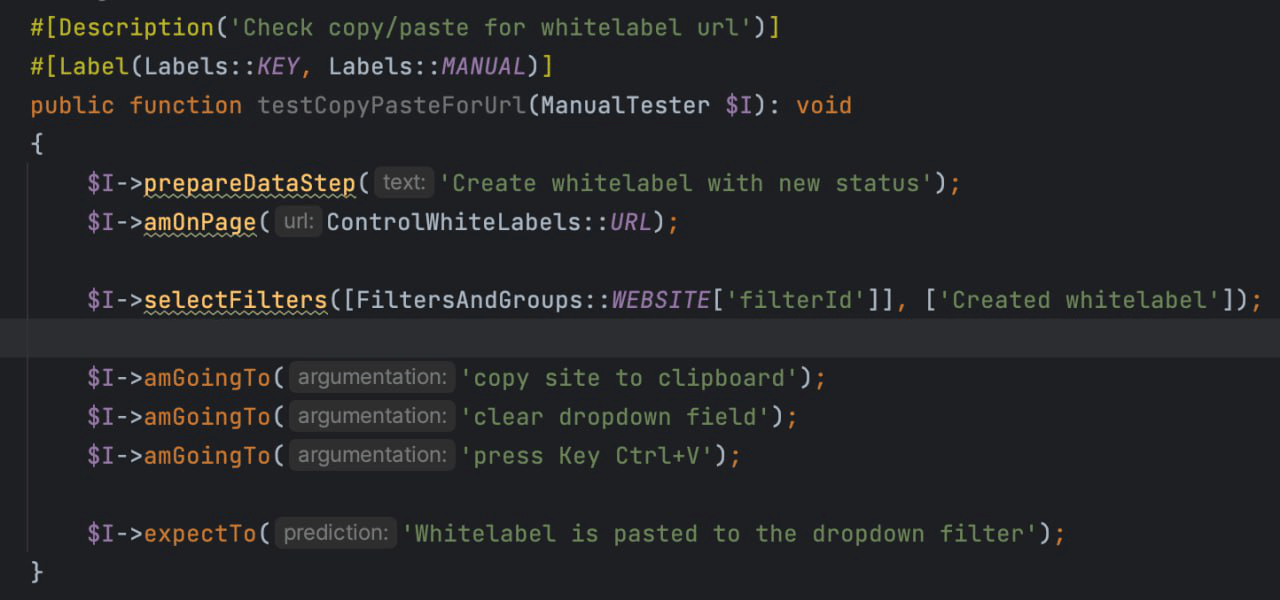

To fix this issue the test case tree was rebuilt and the manual tests were left out. More time was spent on research, and the team discovered the Test Cases as Code. The main idea of the concept is to create, manage, and review manual test cases in the code repository via CI.

This solution is easily supported in Allure TestOps: a manual test is just created in code, marked with all the necessary annotations, and then the MANUAL label is added to it. Like this:

/*

* @Title("Short title")

* @Description("Detailed description")

* @Features({"The feature tested"})

* @Stories({"A story"})

* @Issues({"Link to the issue"})

* @Labels({

* @Label(name = "Jira", values = "JIRA ticket id"),

* @Label(name = "component", values = "Conponent"),

* @Label(name = "createdBy", values = "Test Case author"),

* @Label(name = "ALLURE_MANUAL", values = "true"),

* })

@param IntegrationTester $I *Integration Tester

@throws \\Exception*

*/

public function testAuth(IntegrationTester $I): void

{

&I -> step(text: 'Open google.com')

&I -> step(text: 'Accept cookies')

&I -> step(text: 'Click Log In button')

&I -> subStep(text: 'Enter credentials', subStep: 'login: Tester, password:blabla')

&I -> step(text: "Expect name Tester")

}The step method is simply a wrapper for the internal Allure TestOps executeStep method. The only difference between a manual and an automated test is the ALLURE_MANUAL annotation. On the launch start, manual tests get an "In progress" state that indicates the test case must be executed by manual testers. The launch will automatically balance manual tests among the testing team members.

The Test Cases as Code approach also allows you to go further and create manual test actors as well as methods that can be used as shared steps in manual tests. It is up to you to do so.

“At this point, we had happily got all the tests stored and managed in Allure TestOps with reporting, filtering, and dashboards now working as expected, and moreover, we had achieved our two goals!”

Two years passed and we asked Alexander to share some more details of how the project developed. Here is the new story for Mayflower.

In addition to the main service, there are two additional services that are closely related to each other. The second service is developed in TypeScript and is designed for advertising a streaming website. It contains banners, widgets, landings and other elements. The concept of using PHP and Codeception turned out to be successful, and the team decided to stick to the approach of using one programming language for development and testing for the second service. We settled on the combination of CodeceptJS and Playwright (which officially supports CodeceptJS). In PHP, it is now possible to work with attributes, and the Allure adapter also supports this feature.

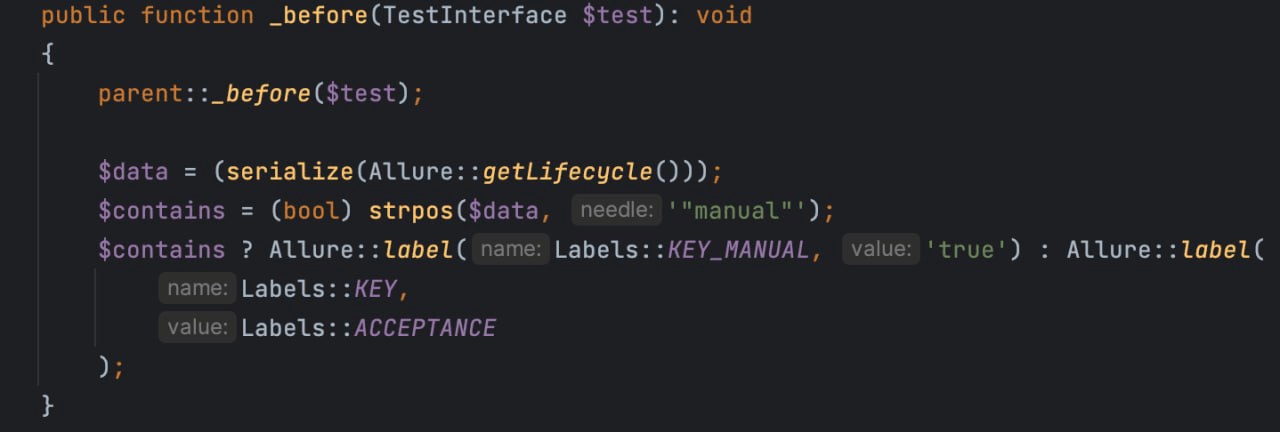

Additionally, over time, we have implemented adding add labels to all tests in the __before method.

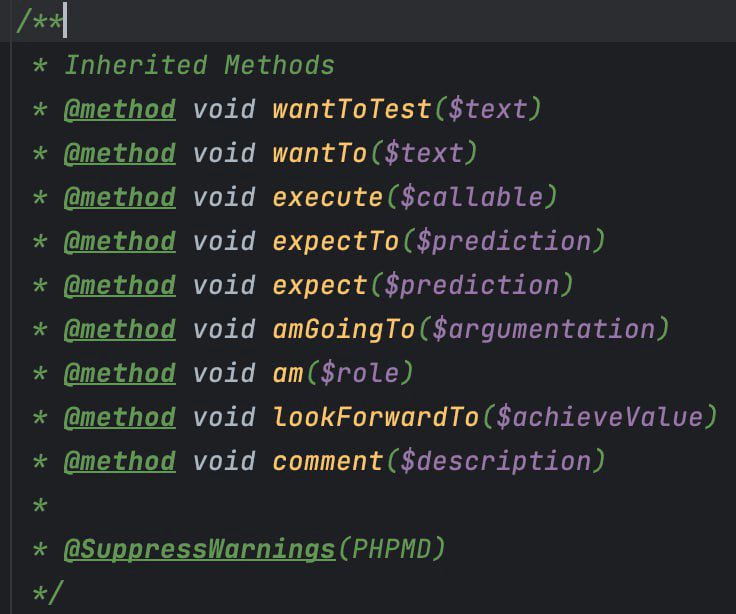

And for manual tests, we also use Inherited Methods, which are already implemented in Codeception, as well as the executeStep method from Allure.

Therefore, we have to use the Allure adapter methods and call the callable function, passing in a clear description. However, it should be noted that integrating Allure with the project is quite deep. There may be difficulties in transferring the project if necessary.

Several jobs have been configured to allow filtering by job, SHA-ref, or branch. Integration with JIRA has also been set up. After this, it is convenient to filter in Allure.

A third service has been created in Golang (which serves as an intermediary for the advertising service, providing statistics for a web streaming site) collects statistics. The statistics are available in a service that displays, processes, and collects data. Event processing in Go is done through multiple endpoints. Statistics can be obtained through the API.

Go language was used for writing tests here as well. We found the “cute” framework from amazon, which was wrapped in Docker and placed in the test folder. To make the reporting more visual, we used an adapter for Allure. Initially, we did not plan to use Allure, but decided to add it when the features we needed appeared. Go tests were marked and labeled, but manual tests were not implemented yet.

All three services upload data on each commit to Allure report, which allows Allure to easily build a beautiful tree of all services in different languages if the "update from master branch" parameter is set. This led to the creation of a test plan that includes both manual and automated tests from three projects!.

Manual tests are only run once a month and usually take a lot of time and often the report is ready only the next day. It would be great to reduce testing time if automate them and get report in time, but this is plans for future.

Speaking of dashboards, Allure TestOps provides a special "Dashboards" section containing a set of small customizable widgets and graphs. All the tests, numbers, launches, usage, and result statistics data are available on a single screen.

As you see, the Test Cases pie chart shows the total number of test cases, and the Launches graph provides some details about the number of test executions by day. Allure TestOps provides more views and a flexible JQL-like language for custom dashboards, graphs, and widgets configuration. The Mayflower team really loved the Test Case Tree Map Chart - the coverage matrix that allows estimating automation and manual coverage sorted by feature, story, or any other attribute you define in your test cases.

It would be great to have a dashboard that shows the number of failures of the same test on the master branch for a week. This would be very useful for us. Another nice-to-have feature would be statistics on flaky tests. It would be easy to gather this data. Providing such statistics would be greatly appreciated.

At the moment, the "Bulk Action" feature in launches is not implemented, but it would be a great feature. Sometimes the number of pages in a launch reaches 1000 and it's necessary to filter jobs and delete them with one click.

The coverage chart may be inconvenient because it's represented only by squares, not a list. The main thing is to make the Treemap clickable. When we delve into a test case, it's unclear what's inside - there's just a red square. It's not an obvious thing. If you delve into it, you can understand, but initially it's not obvious.

In the end, those are the benefits that Mayflower derived from automating with Allure TestOps:

Ambitious goal of Mayflower company is to become the leading fun-tech company in terms of both user base and revenue.

Their primary focus is on generating fun, and they are constantly exploring new ideas and technologies to enhance their users' experience. While VR and AR are currently popular and highly anticipated, the company recognizes that there may be unforeseen technologies emerging in the future, and they are prepared to meet them.