“It might look like advertising, but honestly, Allure TestOps is a significant tool that has boosted our process to a qualitatively new level.”

Alex ShurovHead of QAA at Wrike

Alex ShurovHead of QAA at WrikeWrike is a versatile collaborative work management platform of choice for 20k+ companies in more than 140 countries. It boosts productivity by 50% and helps cut down routine by 90%.

The intensity of the testing process at Wrike is amazing. They run approximately 130k-180k tests every day and they do 3k+ runs of their E2E builds every month. Their Main Project in Allure has 55k stored launches over the past 6 months. Now, this is test automation taken to a whole new level!

We've talked to Alex Shurov, head of QAA at Wrike, and Ivan Varivoda, QA Automation Tech Lead there. You can watch our conversation with Alex and Ivan on our YouTube channel:

Alex Shurov - head of QAA at Wrike. He joined the Wrike team in 2010 and was the first QA Automation Engineer.

Ivan Varivoda - QA Automation Tech Lead at Wrike. He has been at Wrike since 2017 and is responsible for QAA tooling, part of the test infrastructure, and improving the quality of tests.

When Wrike adopted TestOps, they took a significant risk going for a tool that had not seen much usage at that point. So what made them take the risk and what setup were they using before TestOps? Their test management system had several iterations:

In the end, Wrike adopted Allure TestOps as their test reporting solution. The Allure plugin UI was already familiar, so this made the transition somewhat easier.

At Wrike, the most important features of TestOps turned out to be having manual and automated tests in the same space, automatically updating all tests based on their code, generating reports in real time, creating custom annotations and muting flaky tests.

This feature cut down the waiting time considerably. Recently, the Wrike team did an experiment with the TeamCity plugin for Report - it was used to run all the 52k+ tests that the team is maintaining. The size of the Allure results file ended up to be 3 GB, and generating that report took about 15 minutes. This is time during which other activities are halted; but with TestOps, you don't need to wait all 15 minutes if the data you need has already been generated.

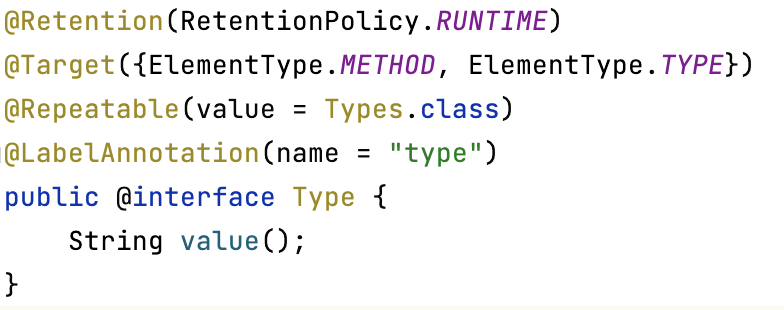

In addition to the epic/feature/story annotations already present in Allure Report, TestOps allows you to create custom annotations:

These can then be used as epics and features. You can then mark technical attributes of tests (e. g. smoke test, a test that can be run on Safari, screenshot test). At Wrike, a specific annotation is created for each team so that it's easier to determine test ownership.

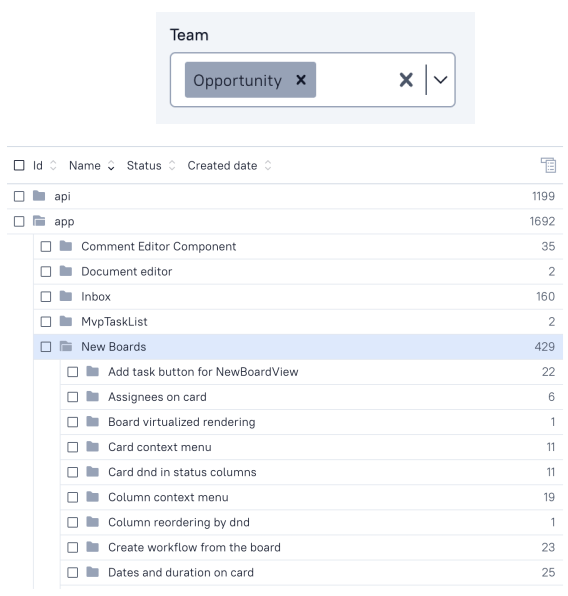

What's even better is that these custom annotations can be used as filters to select all tests with a specific annotation. This can be done in the tree view:

Here, the custom annotation for the Opportunity team is used to select tests made by that team.

Selenium tests are not stable and are easily broken. With TestOps, it is possible to mute these flaky tests. There is a problem, however: even though a muted test is not counted in the result, it is still being run. This lengthens the duration of test runs considerably.

To solve this issue, the Wrike team used the '@TestCaseID' annotation provided by TestOps. The ID is the same for the automated test and the test case, and knowing what test cases are muted the respective tests can be excluded from being run.

Allure TestOps provides a rich API for creating custom solutions. This was used at Wrike for automating flaky test handling and gathering metrics.

Once the system with muting / unmuting tests was up and running, the next step was to automate it. Several services were written that perform the muting automatically based on success rate. Once a test is muted a special task is created to fix the test. The custom annotations for teams are then used by the system to find whoever wrote the test, then assigns them the new task.

Suppose the test gets fixed - what then? Once per day, a special launch is performed for all muted tests, to find those that can be unmuted. Every muted test stores the id of its task. The system checks that both

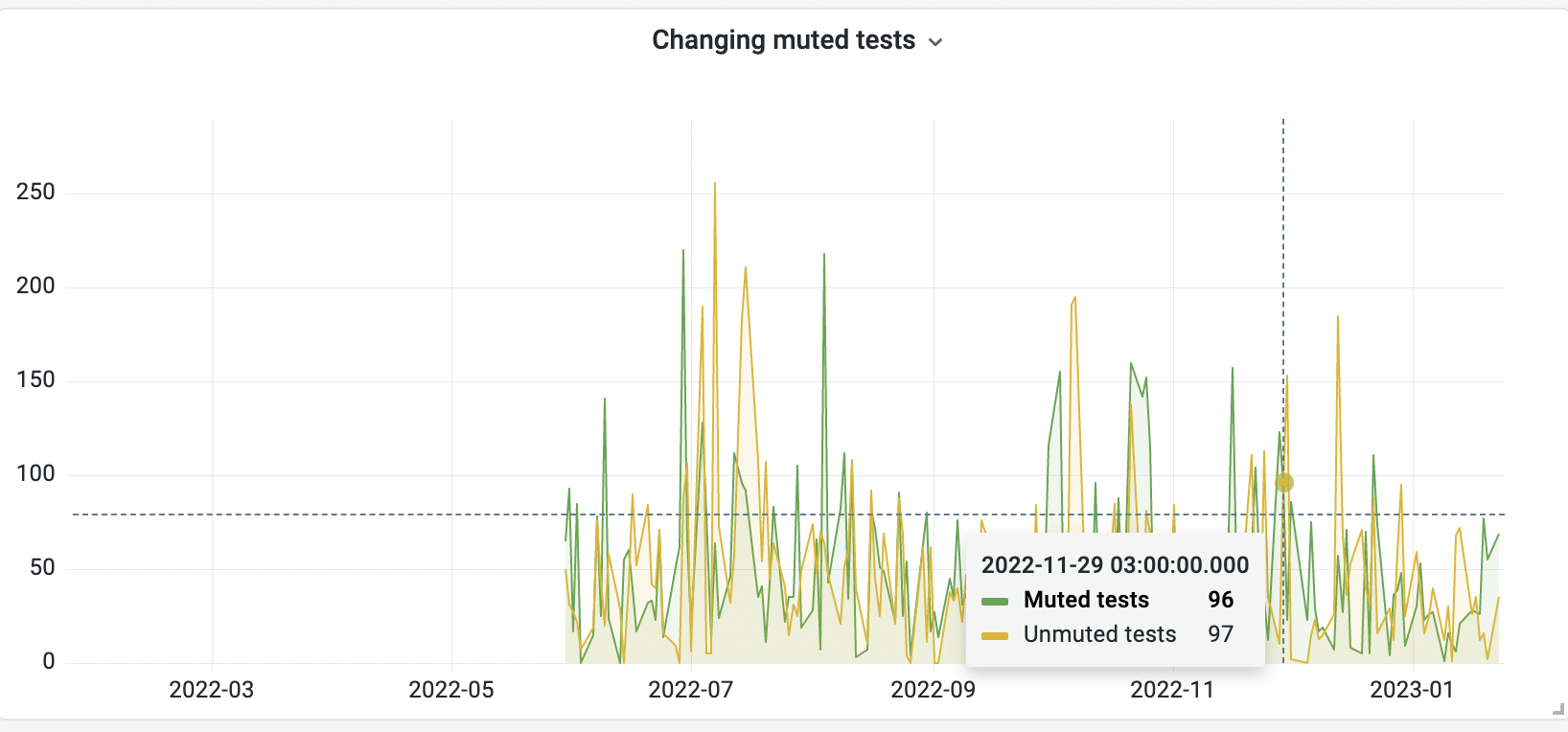

If that is the case, or if the test passes 10 times in a row without any info on the task, then the test is unmuted by the system. Thus, other than dealing with the code of the test, the whole process is automated. Here's a chart of tests being muted and unmuted:

As you can see, an average day might see 96 tests newly muted and 97 unmuted (with the total number of muted tests hovering around 1.4k right now). If you'd like to know more about how Wrike manages flaky tests, take a look at this presentation by Ivan Varivoda.

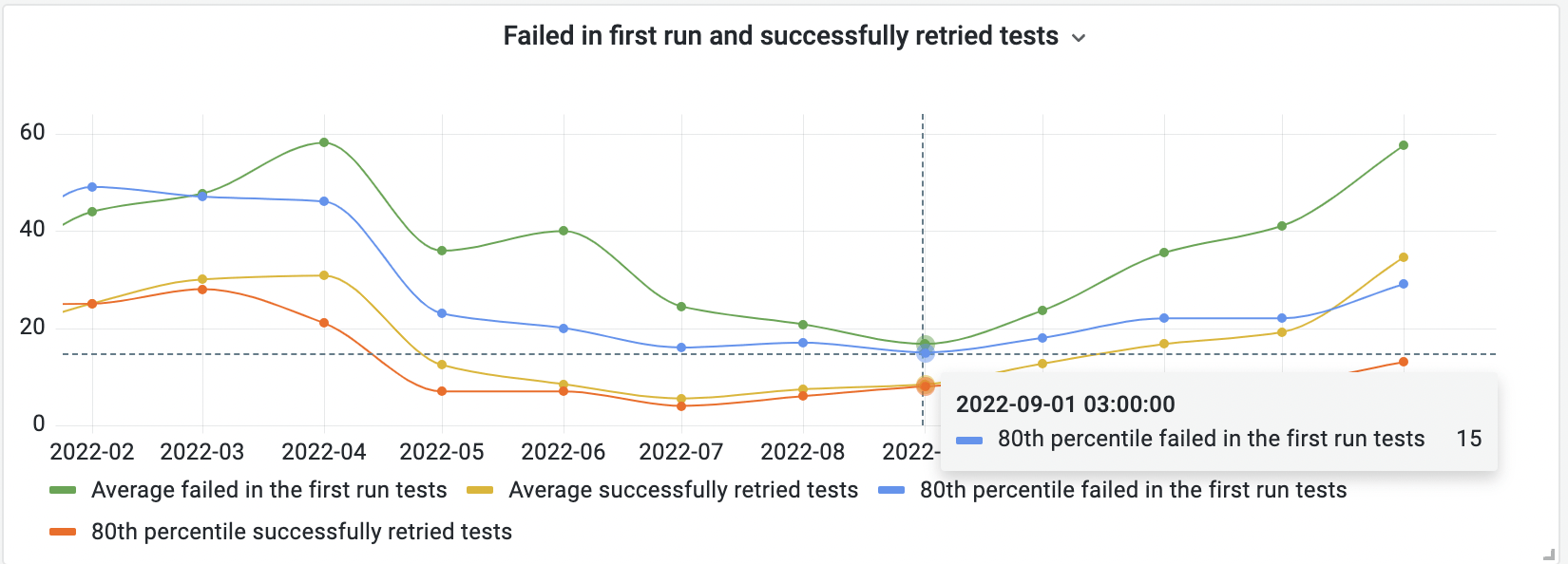

Allure TestOps does have the Analytics section for presenting metrics, but our friends from Wrike honestly told us they're not using that, preferring an in-house solution. They use TestOps as a test data source (along with TeamCity). The data is then saved by the Allure client into internal databases, and then charts are generated in Grafana. As an example, here is a chart that compares the number of tests that failed on first run and the number of those that were then successfully retried:

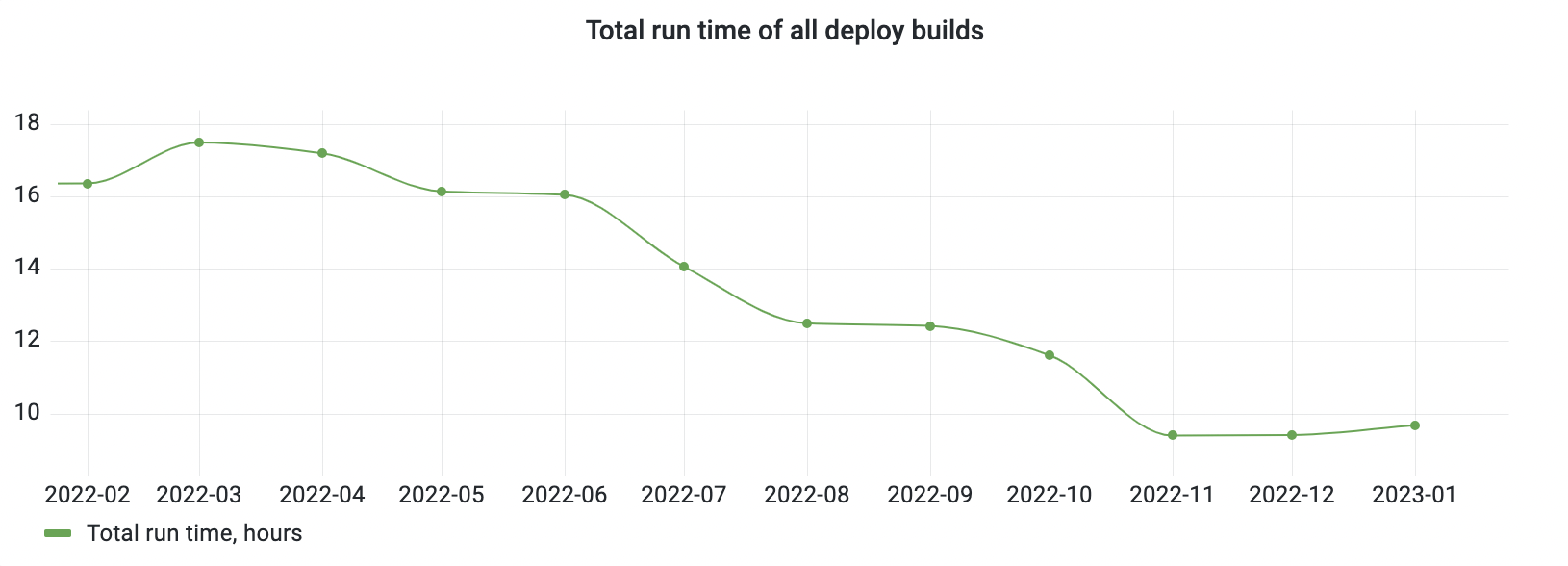

This shows how well the retry mechanism is working. Another metric being tracked at Wrike is the run time of deploy builds:

As you can see, in the 3rd and 4th quarter the company set a goal to reduce the run time; they used this metric to measure the results of their efforts.

Long-term, there are 3 most important goals for QA at Wrike.

Wrike is currently looking to expand its team, and they need great QA Engineers. This might be an actual opportunity for you! At Wrike, you would develop internal tools and services in Java. You'd be able to work in a full-stack QA team and develop your automation skills at the Wrike QA automation school. If you enjoy working in a fast-paced environment, have web testing experience and a proactive attitude, follow the link and give it a go!