Behind the pretty HTML cover of Allure Report is the idea that QA should be the entire team's responsibility, not just the testing department. That means test results should be accessible to and readable by people without the QA or dev skill set.

Report allows you to move past the details that don’t help you, staying at your preferred level of abstraction - and yet, if you do need to drill into the code, it’s just a few mouse clicks away.

Report achieves this basic goal by being language-, framework-, and tool-agnostic. It can hide the peculiarities of your tech stack because it doesn’t depend upon it.

How does one become agnostic? It takes an initial structural decision - and then you have to write tons of integrations, which means hundreds of thousands of lines of code. Allure Report is designed to make that work easier.

Let’s imagine we’re writing a new integration for Report and look at what resources we can leverage. We will see how much effort we need with Report compared to other tools. We will start with the most straightforward advantages of the existing code base. Then, we'll talk about more fundamental stuff like architecture and knowledge.

Selenide native reporting vs Selenide in Allure Report

To begin with, let us compare native reporting for Selenide with Allure-Selenide and then estimate the work needed to write the Allure integration.

While creating simple reporting for Selenide is relatively easy, it’s a completely different story if you want to make quality test reports. JUnit has only one extension point - the exception thrown on test failure. You can jam the meta-information for the report into that exception, but working with that data will be difficult.

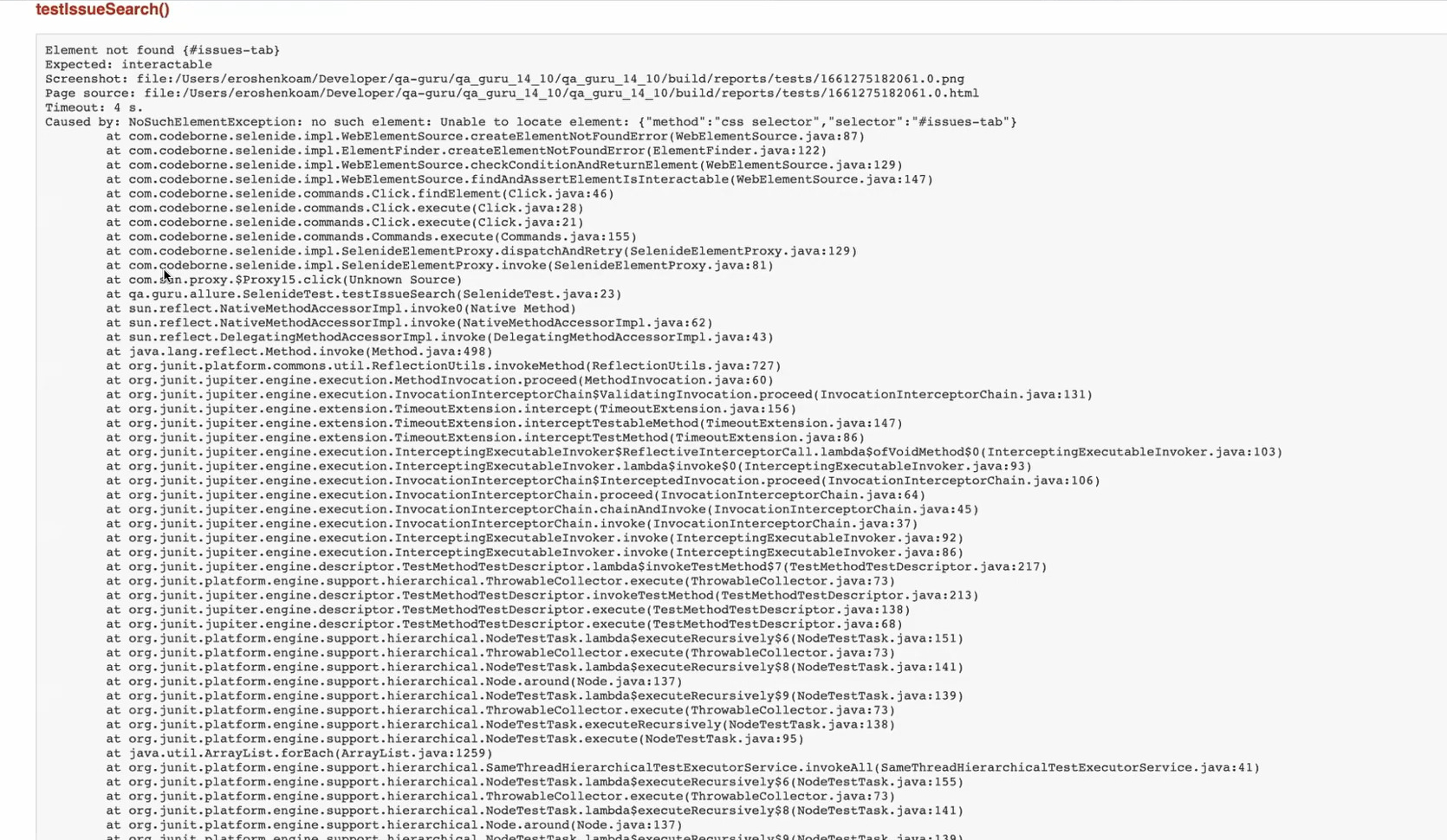

By default, Selenide and most other tools take the easy road. When Selenide reports on a failed test, what you get is just the text of the exception, a screenshot, and the HTML of the page at the time of failure:

If you’re the only tester on the project and all the tests are fresh in your memory, this might be more than enough - which is what the developers of Selenide are telling us.

Now, let’s compare this to Allure Report. If you run Report on a Selenide test with nothing plugged in, you’ll get just the text of the exception, just like with Selenide’s report.

But, as I’ve said before, the power of Allure Report is in its integrations. Things will change if we turn on allure-selenide and an integration for the framework you’re using (in this case - allure-junit). First (this is specific to the Selenide integration), we’re going to have to add the following line at the beginning of our test (or as a separate function with a @BeforeAll annotation):

SelenideLogger.addListener("AllureSelenide", new AllureSelenide());

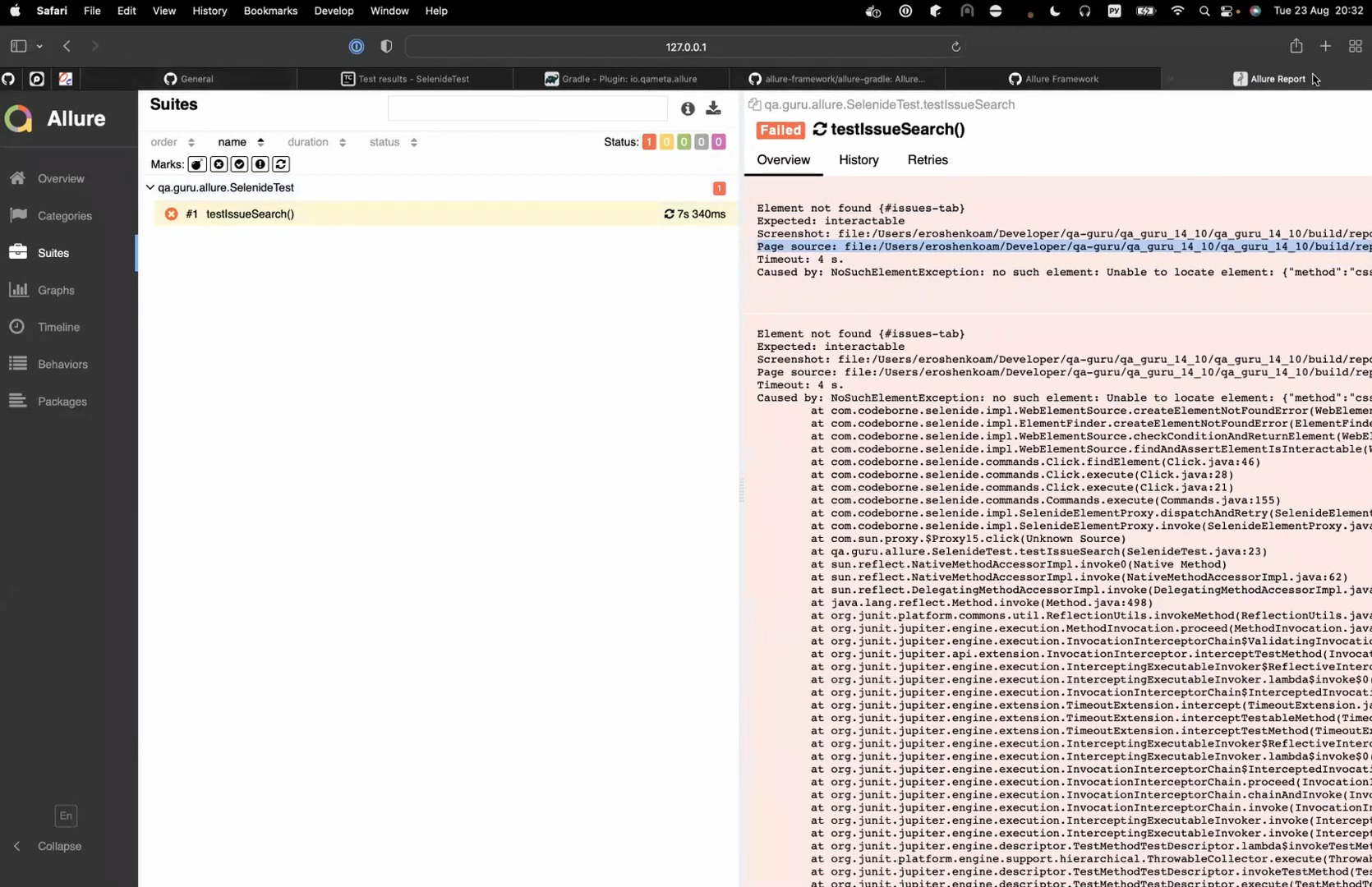

Now, our test results have steps in them, and you can see precisely where the test has failed:

Seeing the location can help you figure out why the test failed. You also get screenshots and the page source.

Finally, with these integrations, you can wrap the function calls of your test inside the step() function or use the @Step annotation for functions you write yourself. This way, the steps displayed in test results will have custom names you’ve written in human language, not lines of code. That makes the test results readable by people who don’t write Java (other testers, managers, etc.).

Adding all the code for steps might seem like a lot of extra work, but in the long run, it actually saves time. Instead of answering a bunch of questions from other people in your company, you can just direct them to test results written in plain English.

That is powerful stuff compared to what Selenide (and most other tools) offer as default reports. How much effort did it take to achieve this?

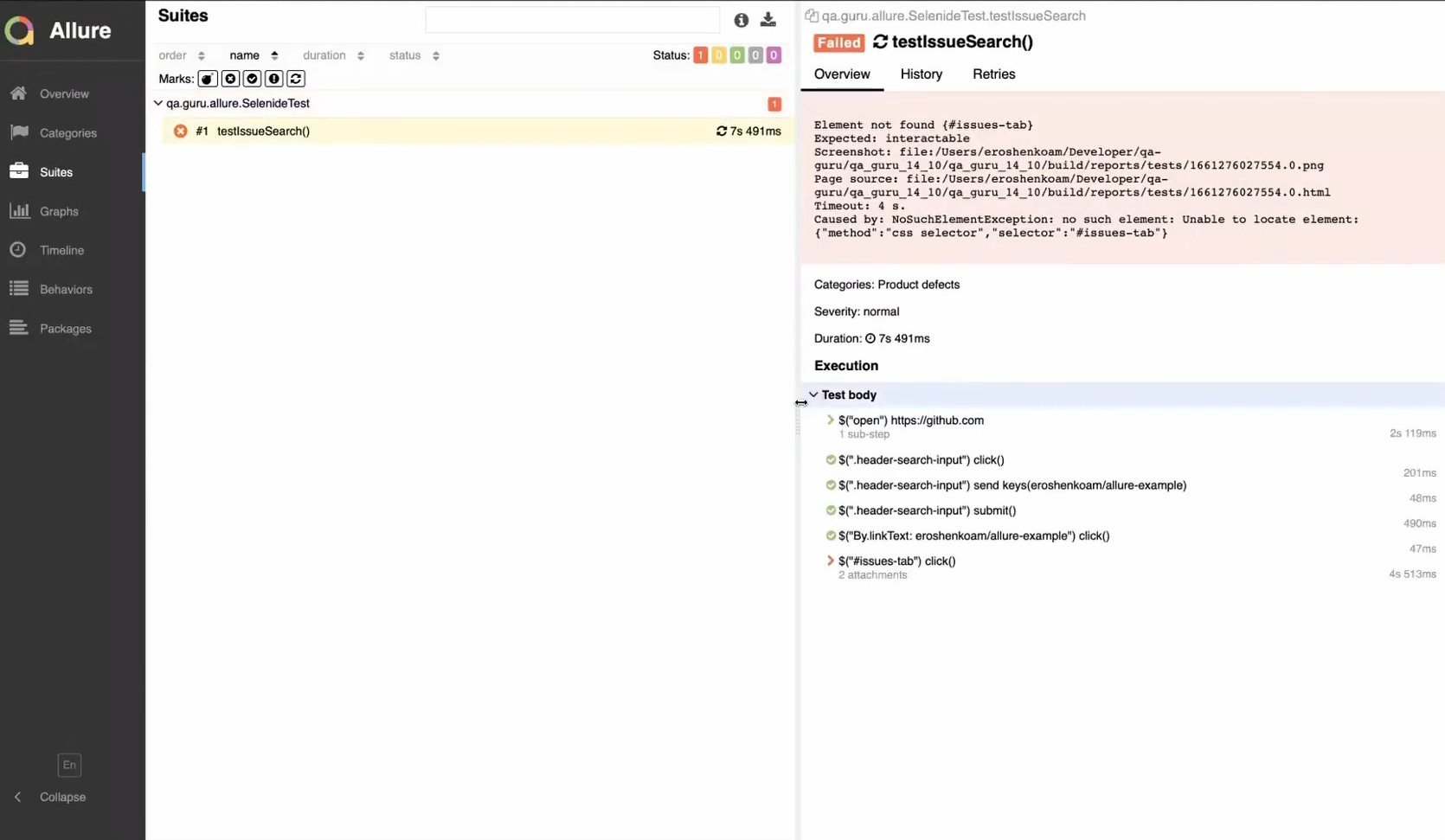

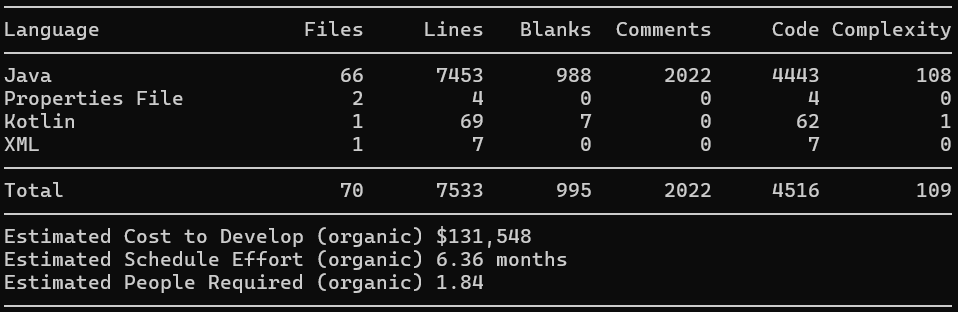

Here's how much work it took to write the allure-selenide integration, with tests and everything:

Considering the functionality this provides, it is very reasonable. Writing such an integration would probably be as easy as providing the bare exception that we get if we use Selenide’s native reporting. Partially, this is because allure-selenide leverages work done elsewhere in the Allure ecosystem. Let's take a closer look at Allure's common libraries.

Common libraries

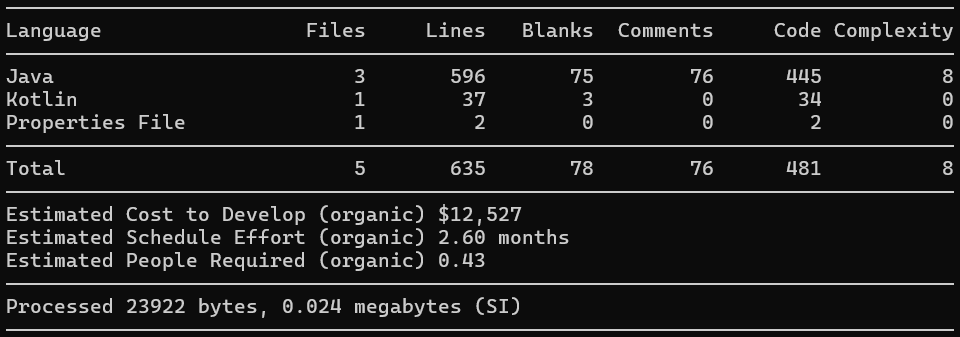

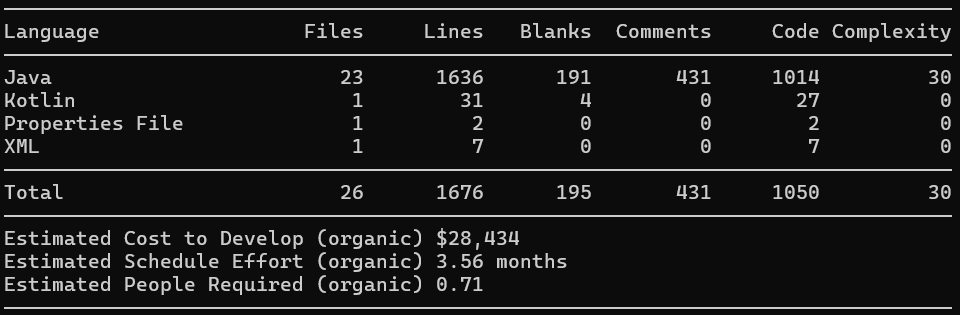

The 250 lines of code (excluding tests) in allure-selenide call roughly 500 lines of code from allure-model and about 1300 lines from allure-java-commons, both parts of allure-java. Here's the work investment for java-commons:

Initially, most framework adapters written independently by the Allure community and were separate entities. Then they were collected in a single repository, and common parts of code were merged into common libraries.

This centralization made writing new integrations much easier. Today, there are about three dozen integrations for different Java frameworks and tools - you can take a look at them all here. A lot of them are housed in allure-java and utilize the common libraries.

Writing the common libraries is not a straightforward task. There are problems of execution here that can be extremely difficult to solve. For instance, when working on the allure-go integration, Anton Sinyaev spent several months solving the issue of parallel test execution. By that time, that issue had existed for eight years in testify, the framework from which allure-go was forked. Such problems can be unique for a particular framework, which makes writing common libraries difficult.

To sum up, the allure-junit4 integration gives an idea of how much time you need once the process is smoothed out and the common libraries are present:

Without common libraries, you could be looking at half a year.

The JSON with the results

What helps writing an integration apart from the common libraries?

Say we're writing an integration from scratch for a new language, like Go. Go is very different from Java or Python, both in basic things like lack of classes and in the way it works with threads. Not only was it impossible to reuse the code - even the general solutions couldn’t be translated from one language to another. Then what has been reused?

Probably the most important part of Allure Report is its data format, the JSON file that stores the results of test runs. That format is the meeting point for all languages, and it is the thing that makes Allure Report language-agnostic.

Designing the data format took about a year, and it has incorporated some major architectural decisions - which means if you’re writing a new integration, you no longer have to think about this stuff.

Because this work has already been done, it took just one weekend to write the first, raw version of allure-go - though several months after that were spent solving problems of execution and working out the kinks.

Experience

The least tangible asset of all is experience. Writing integrations is a peculiar programming field, and a person skilled in it will be much more productive than someone who is just talented and generally experienced.

To put this into numbers, it would take ten people about 2–3 years to re-do the work that’s been done on Allure Report, with one developer for each of the major languages and its common libraries, 2 or 3 devs for the reporter itself, an architect, and someone to work with the community.

Community

And this brings us to the final point. Our community has been a major driver for the development of our tool in the following ways:

Demand:

As we’ve already said, implementing test reporting can take months if done properly. If you’re doing this purely for your own comfort, you’ll probably do things quick-and-dirty. But knowing that millions of people are using the tool is enough motivation to sit around for an extra month or two and provide, say, proper parallel execution of tests.

Experienced developers:

Because Allure is open-source, there have always been plenty of talented developers writing integrations, and we hired many of them.

The integrations themselves:

Back in the days of Allure-1, our team wrote just one adapter (for JUnit), and then focused on the reporter. But the decision to separate the adapter and the reporter made it easier for other people to write their own adapters - and they did, covering all important languages and frameworks.

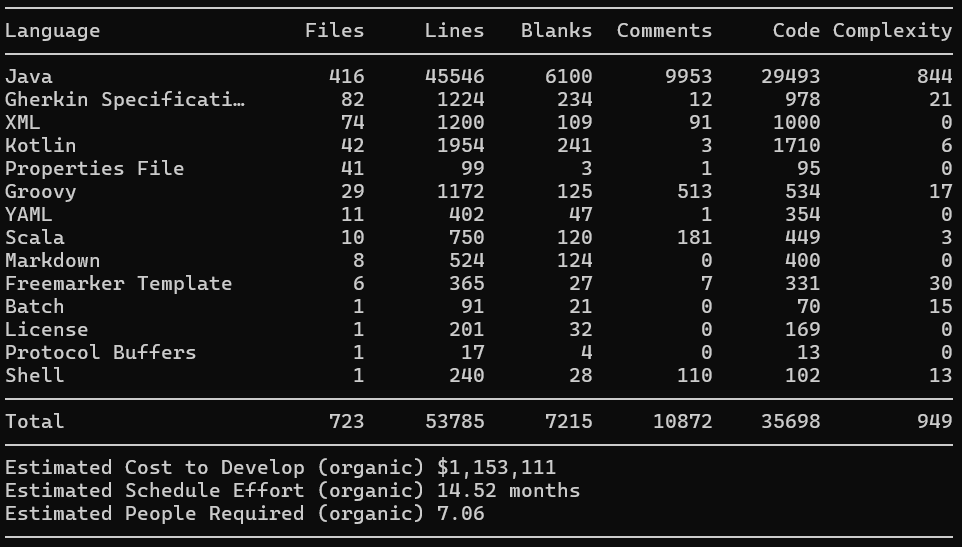

These integrations were then verified and internalized by our company. Here is an estimate of this combined effort for just one language (allure-java):

All of this has been possible because many developers out there use Allure Report as a default tool - they are the bedrock of our community.

Conclusion

The structure of Allure Report didn’t appear all at once as Athena did from the head of Zeus. It took many years of thinking, planning, and re-iterating on community feedback. What emerged was a tool that was purpose-built to be extensible and to smooth out the creation of new integrations. Today, expanding upon this labor means leveraging the code, experience, and architectural decisions accumulated over the years.