Today we will talk about automation as a service. We, at Qameta Software, have been promoting this idea for quite a while, ever since we came up with it almost ten years ago. At that time, we were solving a lot of problems using test automation. We had neither testing tools nor reports, so we had to build all these things ourselves. We were calculating the cost and efficiency of automation, and considering its necessity. As a result, we defined the concept of automation as a service.

Allure TestOps is a rather unique system because it includes a test case management system, a reporting tool for automated testing, and an analytics engine. At the same time, it integrates natively with various programming languages and other tools (CI systems, issue trackers, etc.).

We came to the conclusion that if we are to sell a tool, we shouldn't force our customers to integrate it by themselves using the API. We need to make everything work out of the box - and that is our main goal.

In this article, we will pose and answer three main questions related to the use of our system.

- Why do we need a TMS?

- How does TMS usually work with automated tests?

- How do we go about setting everything up, with the example of Allure TestOps?

Why would you want to use a TMS at all?

TMS stands for Test Case Management System or Test Management System. Let's consider a situation in which we have one tester on the project. What tasks do they perform?

- First, they write test cases, in the form of some straightforward list stored in a small denormalized document.

- Second, they conduct a testing session - they go through the list test by test and check that everything is working correctly.

- After that, they write down which functionality works, and what bugs they were able to find. This is what their job is.

If we are talking about a single tester, then the described setup would generally work just fine. The responsible person is obvious; there is no need to use any additional tools. But as soon as several more testers join the project, each of the steps becomes more and more complicated. For example, when multiple testers start writing tests, it will likely be inconvenient to do that in a single doc file.

At this moment, we will usually start grouping the tests by functionality. A Word document gets replaced by an Excel sheet, the test description becomes more formal, and names, specific steps, environment specification, as well as other additional information get documented.

The necessity of test run planning arises, splitting them by functionality and QA engineers. We start looking for a specific tool to do this. Such a tool should allow us to create test sets, distribute them among engineers, filter and schedule them, etc. It should also be able to track who executed which test set. That would help us understand how a bug was missed, and to determine whether it was due to insufficient test coverage, design or environment error, or a mistake made by the person who did the testing.

In other words, the increase in the number of testers working on the same project also increases the number of probable error sources. Also, when we have several testers in a team, we are faced with the problem of maintaining test documentation: the product is constantly changing and it becomes necessary to synchronize the process of updating the documentation.

TMS were created for these exact purposes. Its main goal is control.

That doesn’t mean that TMS should have control over our team members. We talk about control over the workflow. The system does not allow testers to intersect. Each is assigned their own tasks to perform. The one who planned the launch handles it. Such control allows us to understand that our work process is streamlined and organized.

When we work with any TMS, we can see an organized system of test cases. For each, there is an opportunity to describe the scenario. Such tests may have their own tags and other meta information. We can select the required tests and run them with the click of a button. Tests are automatically assigned to the user, who can mark whether they pass or fail and fill in a detailed explanation of why it happened. This is how the system works: testers add scripts that are then executed. Anyone can log in and see which scripts passed in any given run, which did the testing, and what bugs were found. For manual testing, such systems have been developed for more than fifteen years and they already have all the necessary functionality: analytics, test distribution among users, reusable steps, test order management, etc., etc.

The need for automation will arise over time in any given company. Of course, not everything can be automated, and automated tests may not always be as flexible as human-run tests. But now the issue of introducing automation does not require justification - this is a standard evolutionary step for any team. Now, compared to the situation twelve years ago, there are so many tools that make the work of a test automation engineer easier - creating stable and reliable automated tests requires almost no effort.

It is important to understand how the payoff period for test automation is calculated. For example, we spend ten hours doing manual testing and want to implement automation. We need to add more time to these ten hours, another ten hours, for example, for writing automated tests. After the introduction of automation, we started saving time, and instead of the ten hours that we spent on manual testing, we now spend four hours on testing and one more on automated test support. At this point, you might think that we have already paid off the costs of automation. But in fact, we need to use automated tests for some more time so that the extra time that was spent on automation fully pays off.

How does TMS usually work with test automation?

The next logical question is: how can we use a test management system to introduce test automation? It is necessary to establish the same level of control over automated tests as we have with manual tests. We want to see how many new automated tests were written, make them easy to run, and generally work with them as with a service. Some existing TMSs claim to fully support test automation, but in practice, it almost never works. They offer users integration via the API but do not warn them about problems that may arise during the integration process.

Besides developing the automated tests themselves and hiring or training employees, you also have to worry about control over your workflow, test stability, and not drowning in a sea of errors.

Automation is a little more complicated than manual testing. There are parameterized tests, different environments, and many other nuances. Most test automation projects are very primitive and inflate the cost of automation. Besides developing the automated tests themselves as well as hiring or training employees, you also have to think about establishing control over your workflow, making sure the tests are stable. On top of that, you need to avoid drowning in a sea of errors. As a result, automation is often not implemented at all.

While the results of the work of a manual tester can be easily seen and evaluated, the results of the work of a person who writes automated tests are more difficult to see and understand, because they go unnoticed by the control system. To me, this seems to be one of the main problems of those legacy systems. The world has stepped forward, and we already have examples of how automation is being seriously implemented in almost any team and project, but the control systems that we use do not respond to this challenge in any way. We at Allure TestOps are trying to solve this problem and help our customers.

How to setup test automation with Allure TestOps?

How to automate manual test cases?

Let's imagine we have a manual tester who has written several test cases. They are very well documented and include a lot of useful information, test data, and detailed scenario descriptions. After that, the cases are submitted for automation. While working with ready-made test cases, the automation engineer does not have to dive deep into the context of the system at the initial stage, when they’ve just joined the team. After the automation stage is completed, we often know nothing about the state of the newly written tests, whether they were automated correctly, and whether the correct steps are being performed by them.

In Allure TestOps, this problem is solved through native integrations with programming languages. The automation engineer can import any manual test from the IDE they use into Allure. For the test case, a template will be generated based on the information from the manual test, to which you can then add all the necessary code. Thus, all the steps of the detailed scenario (test data, tags, and other important meta-information), which were diligently filled in by a manual tester, are not lost during automation. When using the Allure plugin, the automation engineer transfers a manual test into code. Its entire structure is preserved. And the manual tester will be able to observe the results of writing an automated test in the Allure TestOps interface.

This approach allows us to establish communication between a manual tester and an automation engineer. In this case, manual testers act as customers: they write full-fledged test cases that describe a lot of useful information. Automation engineers pack all this information into automated tests, and as a result, automated tests look almost the same as tests for manual testing. They are just as detailed and use language constructs that manual testers are used to. This helps us build confidence in test automation. This is one of the points that we need to pay attention to - since the manual tester performs the role of a customer for a test automation engineer, we need to make sure that the results of automation are as clear as possible to manual testers. After the automated test is written, a manual tester can perform a review - compare manual and automated test scenarios in the system, and validate the accuracy of the test data and steps performed. At this stage, the automation engineer and the reviewer can communicate to resolve any disagreements. Once the review is finished, a button is clicked, and our manual testing becomes automated.

How to run automated tests?

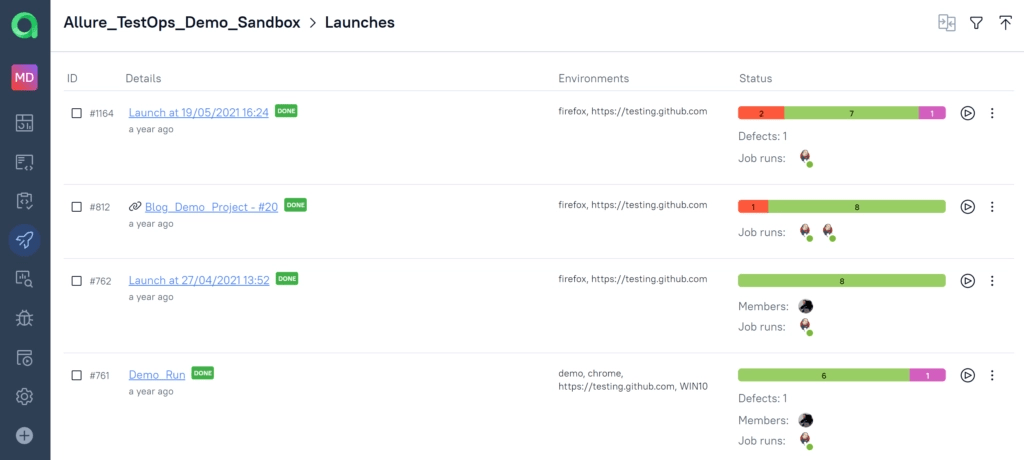

Apart from native integrations with programming languages, Allure also provides a set of native integrations with any CI system. The integration is designed to make all reports on test results available in real-time. The user can immediately see which tests have failed and why.

Allure allows users to manually create test suites that need to be run. After selecting tests and specifying the environment, the system will automatically create and run the job in the CI system. This functionality is often required on projects with many tests because it allows developers, managers, or analysts to run small groups of tests during the day - for example, a group of tests covering one specific feature. This allows restarting only failed tests if necessary, and not waste time and resources on running the rest.

Thanks to this behavior, a lot of time can be saved on managing test automation. Automatic tests periodically turn out to be unstable and often what we lack is the ability to quickly restart the desired test. The beauty of Allure is that it allows you to run automated tests from the system as if they were manual tests, select the necessary cases and combine them into test plans, assign executors for manual tests, and run created test plans in one click.

Working with reports

The advantage and also the problem of automated tests, compared to manual ones, is that they are always reproducible (if they are properly organized). Let's say we run 100 tests and 5% of them fail. Each of these failed tests takes five minutes to analyze, so it takes twenty-five minutes to go through all the failed tests. In general, this is a small amount of time. But, if our hundred tests are run by different people ten times a day, then each of the team members will spend twenty-five minutes looking through all these crashes, and a total of four hours will be spent by the whole team.

There are many reasons for automated tests to fail!

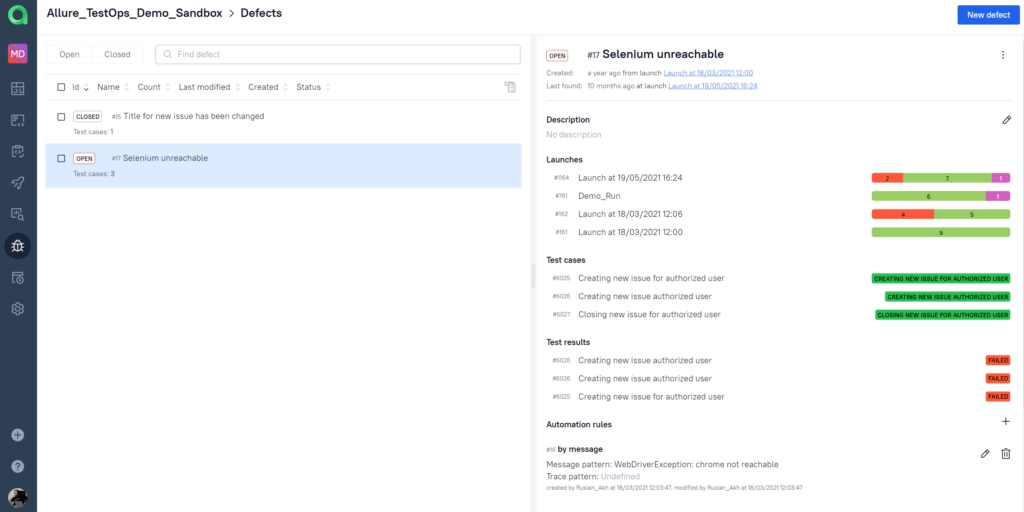

That's quite a lot. And this issue may harm the credibility of your automated tests. To solve this problem in Allure, we can go into the failed test, find the exact reason for its failure (a stack-trace or an error message), create a defect for the error with a description and link this defect to the specific crash (although this will require using some RegEx magic). The next time we run the suite and any of tests fail with the same error, instead of a red test we will see an already documented defect, and there will be no need to analyze the failed test again.

We can also view all defects on a separate page, change their status and integrate them with the bug tracker.

Thus, developers will spend a minimum amount of time analyzing automated tests. They will be able to ignore problems that are already documented and pay attention only to new crashes that have arisen as a result of changes in the codebase. This greatly increases the credibility of automated tests and makes them not painful to run. Tests like that will be used and will bring real benefits to your project.

Test automation analytics

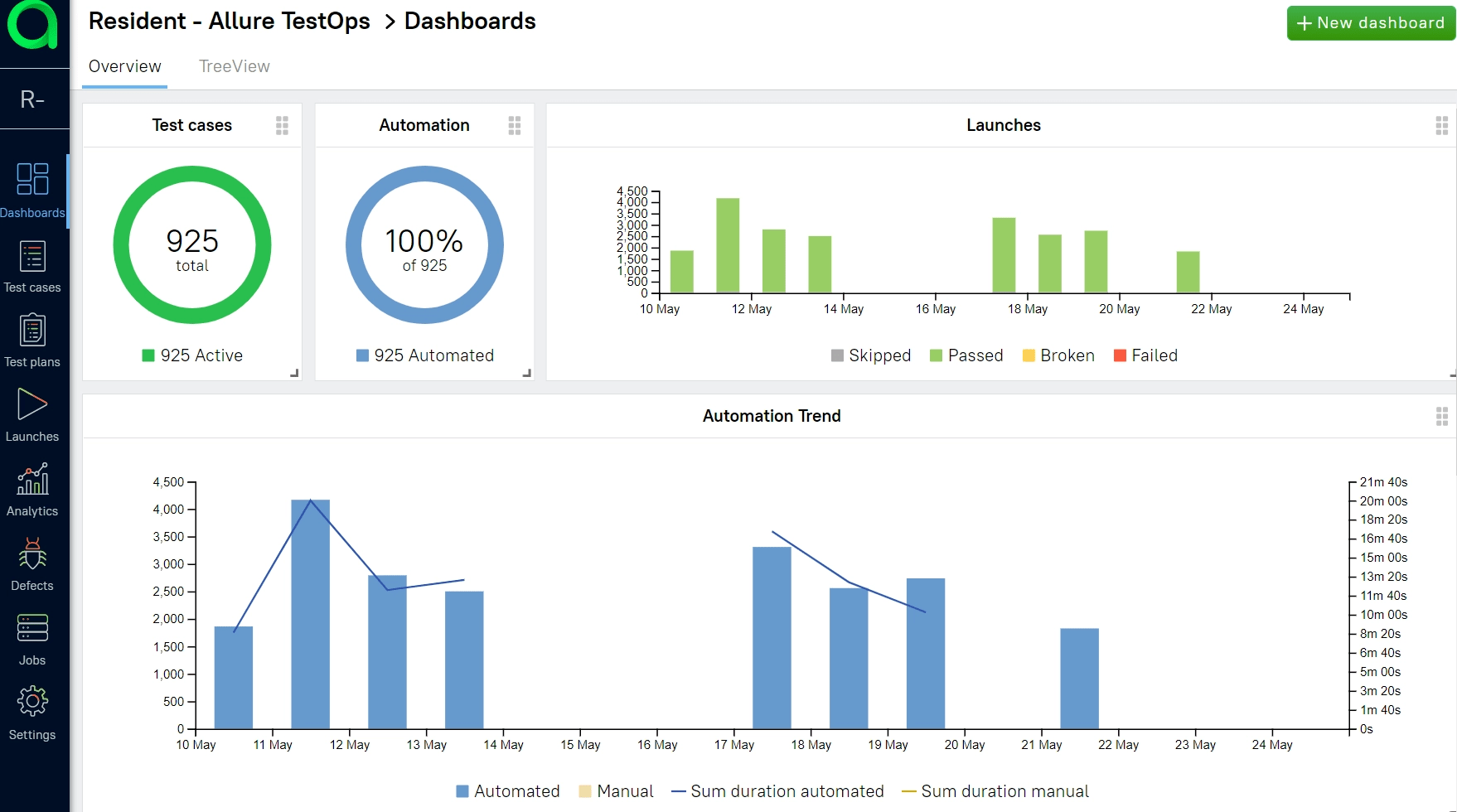

Analytics for automated tests should take into account many aspects: their stability, speed of execution, speed of writing, and the issues and errors your automated testing detects. Allure allows you to keep track of all this. There are lots of interesting metrics that can be visualized and organized into a dashboard.

Such dashboards are quite easy to set up and allow you to follow trends in the speed of development and execution of automated tests, the frequency of their failure, the workload of team members, etc.

Conclusion

In the end, we get our automated testing as an actual service. The actual writing of automated tests is only a small part of the job. You can hire a good automation engineer for this. But in order to genuinely and effectively implement automation and set up automated testing, you will need to take care of all the aspects that we’ve talked about in this article:

- Control of the workflow of automation.

- Execution of test runs.

- Organization and management of test reports.

- Analytics of test results.

Hiring a good automation engineer is not enough to organize all these processes.

Setting up Allure is easy: Allure Report, our OSS reporting tool, has everything you need to get started. It allows you to set up analytics and parse errors by categories. It has support for detailed scenarios for automated tests and other neat features.

Then, if this approach shows its viability in your team, take a look at Allure TestOps.

Learn more about Allure tools

Qameta Software focuses on developing amazing tools that help software testers. Learn more about Allure Report, a lightweight automation reporting tool, and Allure TestOps, the all-in-one DevOps-ready testing platform.

Subscribe to our Monthly Newsletter (below) or follow us on Twitter, or ask for assistance on GitHub Discussions.